paulsbitsandbytes.com

This unlocks *inference-time hyper-scaling*

For the same runtime or memory load, we can boost LLM accuracy by pushing reasoning even further!

This unlocks *inference-time hyper-scaling*

For the same runtime or memory load, we can boost LLM accuracy by pushing reasoning even further!

Read more 👇

Read more 👇

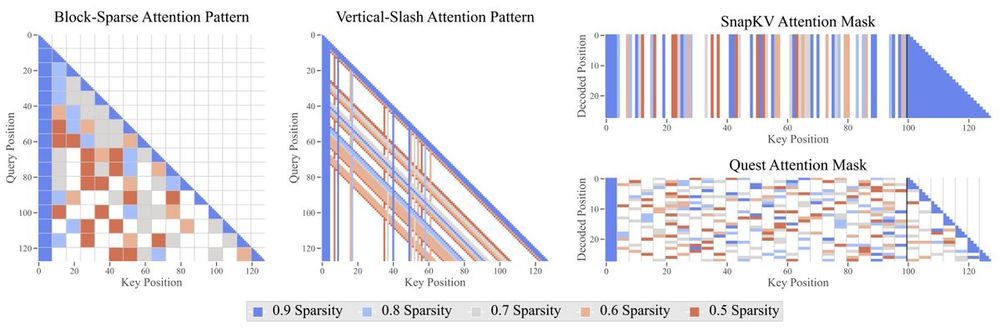

We performed the most comprehensive study on training-free sparse attention to date.

Here is what we found:

We performed the most comprehensive study on training-free sparse attention to date.

Here is what we found:

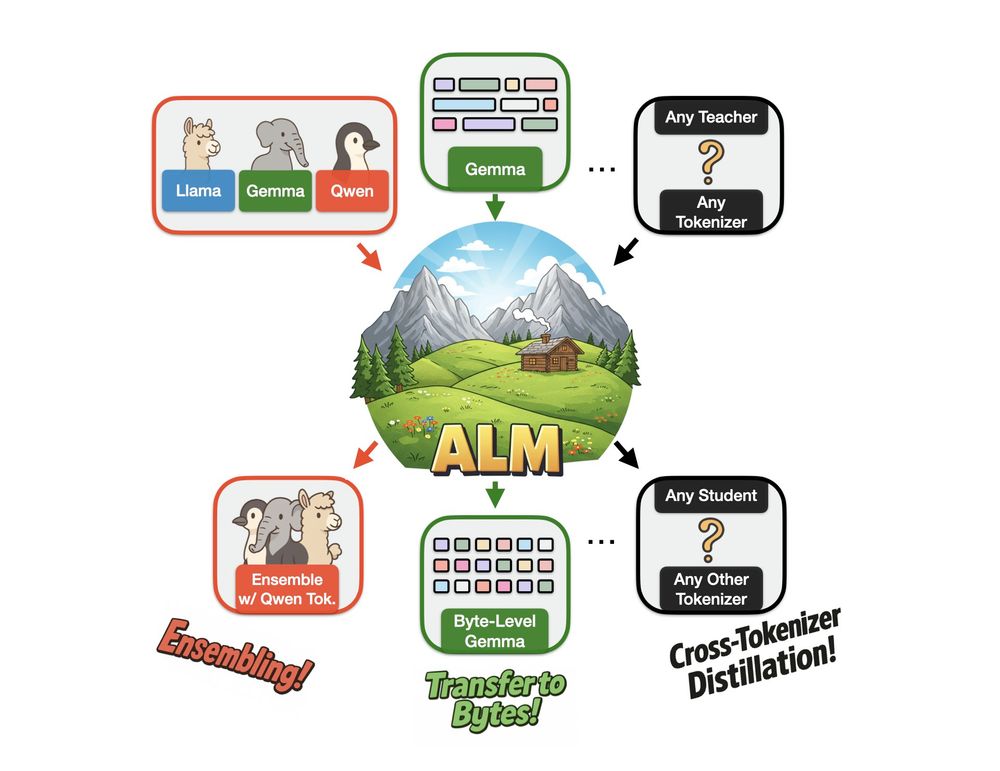

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵