Kaggle Notebook Master and collector of competition silver medals. https://www.kaggle.com/lextoumbourou

Talks about Software dev, ML, generative art, note-taking, dog photos. Mostly cross-posting from Mastodon.

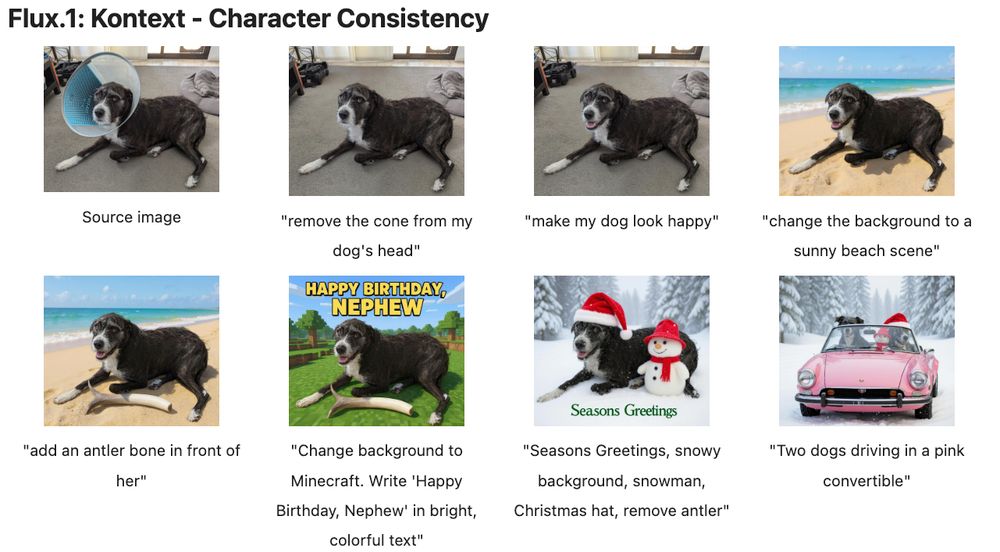

https://notesbylex.com/absurdly-good-doggo-consistency-wit…

https://notesbylex.com/absurdly-good-doggo-consistency-wit…

Turns out we can just use the LLM's internal sense of confidence as the reward signal to train a reasoning model, no reward model / ground-truth examples / self-play needed.

Amazing.

https://notesbylex.com/learning…

Turns out we can just use the LLM's internal sense of confidence as the reward signal to train a reasoning model, no reward model / ground-truth examples / self-play needed.

Amazing.

https://notesbylex.com/learning…

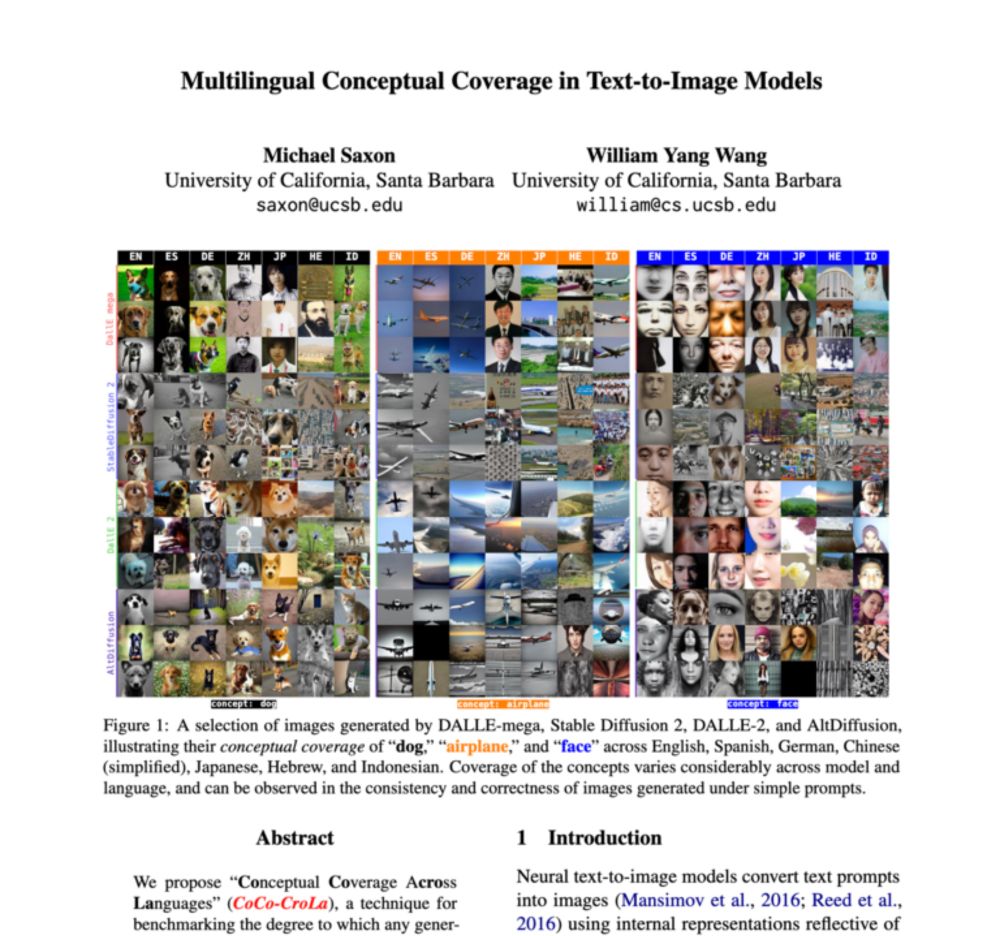

gist.github.com/michaelsaxon...

gist.github.com/michaelsaxon...

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

www.reddit.com/r/business/c...

www.reddit.com/r/business/c...

arxiv.org/abs/2501.19393

arxiv.org/abs/2501.19393

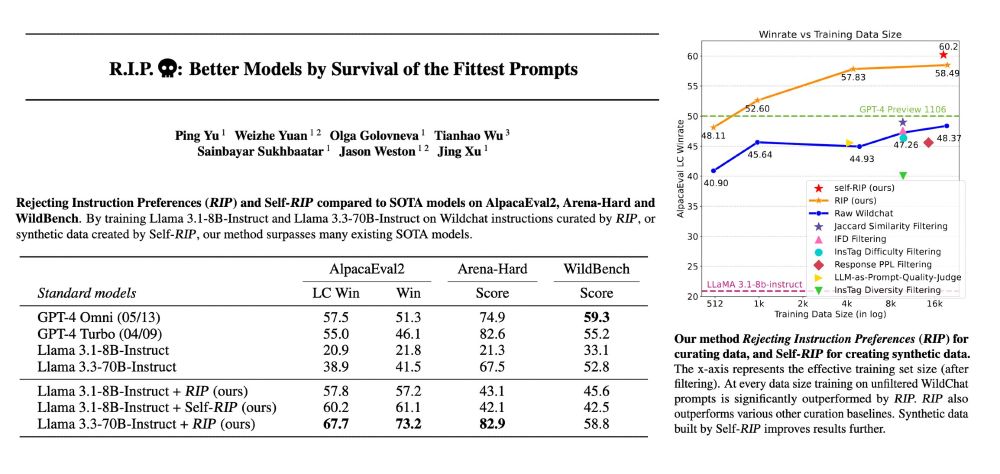

Rejecting Instruction Preferences (RIP) can filter prompts from existing training sets or make high-quality synthetic datasets. They see large performance gains across various benchmarks compared to unfiltered data.

arxiv.org/abs/2501.18578

Rejecting Instruction Preferences (RIP) can filter prompts from existing training sets or make high-quality synthetic datasets. They see large performance gains across various benchmarks compared to unfiltered data.

arxiv.org/abs/2501.18578

"The recipe:

We follow DeepSeek R1-Zero alg -- Given a base LM, prompts and ground-truth reward, we run RL.

We apply it to CountDown: a game where players combine numbers with basic arithmetic to reach a target number."

github.com/Jiayi-Pan/Ti...

"The recipe:

We follow DeepSeek R1-Zero alg -- Given a base LM, prompts and ground-truth reward, we run RL.

We apply it to CountDown: a game where players combine numbers with basic arithmetic to reach a target number."

github.com/Jiayi-Pan/Ti...

1. generate 10-20 examples from criteria in different styles with r1/o1/CoT, etc

2. have a model rate for each example based on quality + adherence.

3. filter/edit top examples by hand

Repeat for each category of output.

1. generate 10-20 examples from criteria in different styles with r1/o1/CoT, etc

2. have a model rate for each example based on quality + adherence.

3. filter/edit top examples by hand

Repeat for each category of output.

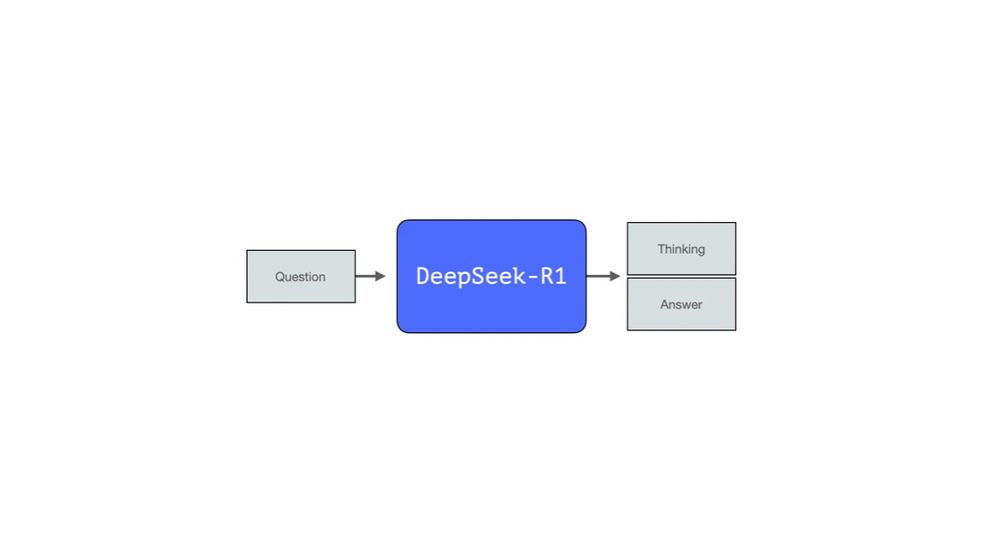

Spent the weekend reading the paper and sorting through the intuitions. Here's a visual guide and the main intuitions to understand the model and the process that created it.

newsletter.languagemodels.co/p/the-illust...

Spent the weekend reading the paper and sorting through the intuitions. Here's a visual guide and the main intuitions to understand the model and the process that created it.

newsletter.languagemodels.co/p/the-illust...

github.com/ml-explore/m...

via awnihannun on Twitter.

github.com/ml-explore/m...

via awnihannun on Twitter.

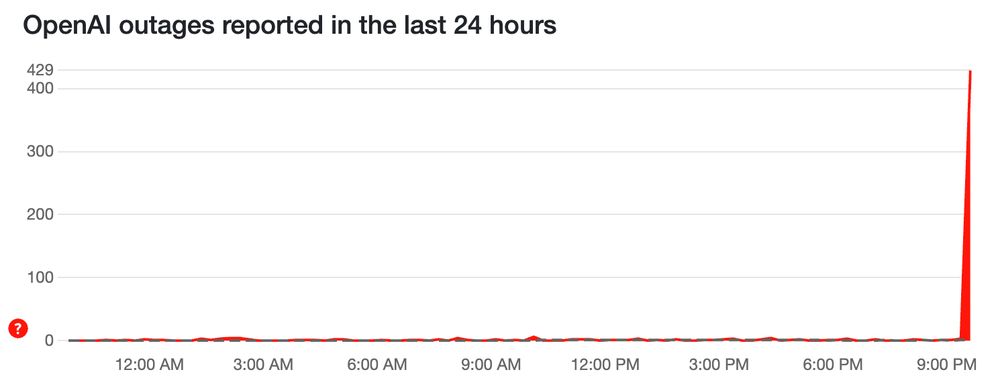

$500 billion! For comparison, the 1960s Apollo project, when adjusted for inflation, cost around $250B.

openai.com/index/announ...

$500 billion! For comparison, the 1960s Apollo project, when adjusted for inflation, cost around $250B.

openai.com/index/announ...

DeepSeek V3:

* Semi-Private: 7.3%

* Public Eval: 14%

DeepSeek Reasoner:

* Semi-Private: 15.8%

* Public Eval: 20.5%

Performance is on par, albeit slightly lower, than o1-preview"

x.com/arcprize/sta...

DeepSeek V3:

* Semi-Private: 7.3%

* Public Eval: 14%

DeepSeek Reasoner:

* Semi-Private: 15.8%

* Public Eval: 20.5%

Performance is on par, albeit slightly lower, than o1-preview"

x.com/arcprize/sta...

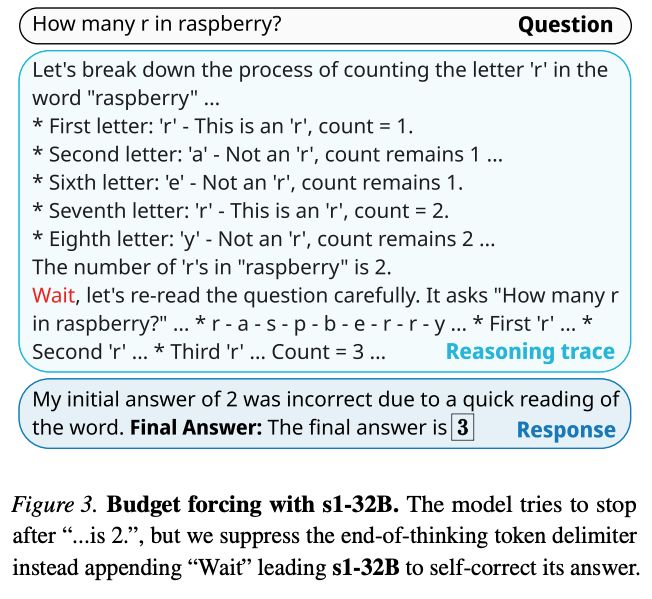

"So positions 3, 8, and 9 are Rs? No, that can't be right because the word is spelled as S-T-R-A-W-B-E-R-R-Y, which has two Rs at the end...

Wait, maybe I'm overcomplicating it...."

gist.github.com/IAmStoxe/1a1...

"So positions 3, 8, and 9 are Rs? No, that can't be right because the word is spelled as S-T-R-A-W-B-E-R-R-Y, which has two Rs at the end...

Wait, maybe I'm overcomplicating it...."

gist.github.com/IAmStoxe/1a1...

1. Now support Wikilinks with a display name: `[[my-page|A Cool Page]]`

2. Also added support for aliases.

See the release notes: github.com/lextoumbouro...

1. Now support Wikilinks with a display name: `[[my-page|A Cool Page]]`

2. Also added support for aliases.

See the release notes: github.com/lextoumbouro...

Unsloth finetunes LLMs 2x faster, with 70% less VRAM, 12x longer context - with no accuracy loss

Documentation: docs.unsloth.ai

We also fixed 4 bugs in Phi-4: unsloth.ai/blog/phi4

Phi-4 Colab: colab.research.google.com/github/unslo...

Unsloth finetunes LLMs 2x faster, with 70% less VRAM, 12x longer context - with no accuracy loss

Documentation: docs.unsloth.ai

We also fixed 4 bugs in Phi-4: unsloth.ai/blog/phi4

Phi-4 Colab: colab.research.google.com/github/unslo...