🌐 github.com/emirkaan5/OWL/

📜 arxiv.org/abs/2505.22945

Work done at @UMassNLP by @alishasrivas.bsky.social, @emirdotexe.bsky.social, @nhminhle.bsky.social, @chautmpham.bsky.social, @markar.bsky.social, and @miyyer.bsky.social.

🌐 github.com/emirkaan5/OWL/

📜 arxiv.org/abs/2505.22945

Work done at @UMassNLP by @alishasrivas.bsky.social, @emirdotexe.bsky.social, @nhminhle.bsky.social, @chautmpham.bsky.social, @markar.bsky.social, and @miyyer.bsky.social.

LLaMA-3.1-70B: 4-bit > 8-bit ⏩ accuracy drops MORE at 8-bit (up to 25%)

LLaMA-3.1-8B: 8-bit > 4-bit ⏩ accuracy drops MORE at 4-bit (up to 8%)

🧠 Bigger models aren’t always more robust to quantization.

LLaMA-3.1-70B: 4-bit > 8-bit ⏩ accuracy drops MORE at 8-bit (up to 25%)

LLaMA-3.1-8B: 8-bit > 4-bit ⏩ accuracy drops MORE at 4-bit (up to 8%)

🧠 Bigger models aren’t always more robust to quantization.

On GPT-4o-Audio vs Text:

📖 Direct Probing: 75.5% (vs. 92.3%)

👤 Name Cloze: 15.9% (vs. 38.6%)

✍️ Prefix Probing: 27.2% (vs. 22.6%)

Qwen-Omni shows similar trends but lower accuracy.

On GPT-4o-Audio vs Text:

📖 Direct Probing: 75.5% (vs. 92.3%)

👤 Name Cloze: 15.9% (vs. 38.6%)

✍️ Prefix Probing: 27.2% (vs. 22.6%)

Qwen-Omni shows similar trends but lower accuracy.

🧩 shuffled text

🎭 masked character names

🙅🏻♀️ passages w/o characters

🚨Reduce accuracy with the degree varying across languages BUT models can still identify the books better than newly published books (0.1%) 🚨

🧩 shuffled text

🎭 masked character names

🙅🏻♀️ passages w/o characters

🚨Reduce accuracy with the degree varying across languages BUT models can still identify the books better than newly published books (0.1%) 🚨

63.8% accuracy on English passages

47.2% on official translations (Spanish, Turkish, Vietnamese) 🇪🇸 🇹🇷 🇻🇳

36.5% on completely unseen languages like Sesotho & Maithili 🌍

63.8% accuracy on English passages

47.2% on official translations (Spanish, Turkish, Vietnamese) 🇪🇸 🇹🇷 🇻🇳

36.5% on completely unseen languages like Sesotho & Maithili 🌍

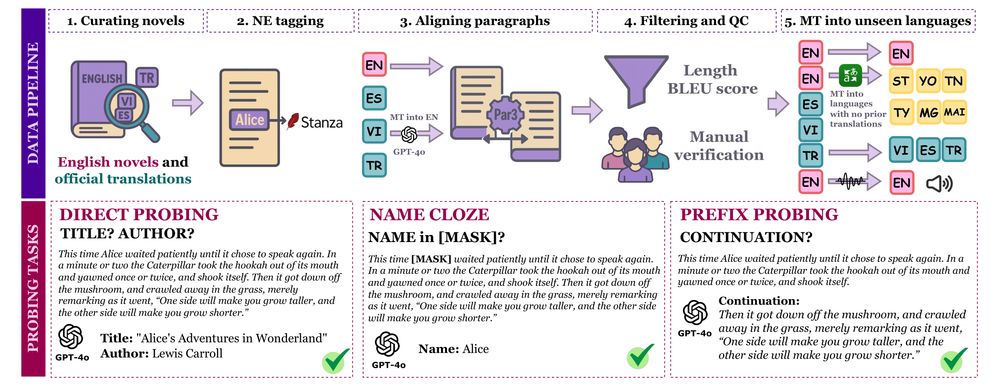

We probe LLMs to:

1️⃣ identify book/author (direct probing)

2️⃣ predict masked names (name cloze)

3️⃣ generate continuation (prefix probing)

We probe LLMs to:

1️⃣ identify book/author (direct probing)

2️⃣ predict masked names (name cloze)

3️⃣ generate continuation (prefix probing)