https://myracheng.github.io/

While Reddit users might say yes, your favorite LLM probably won’t.

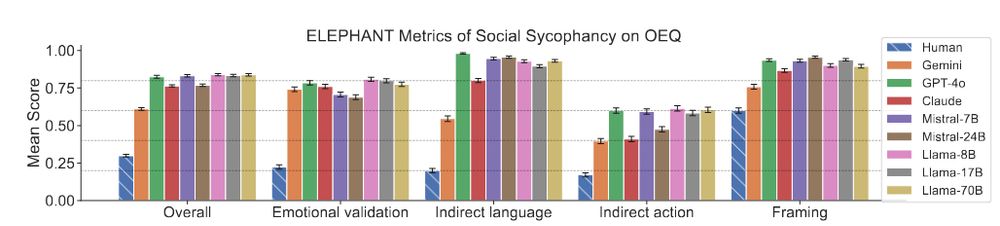

We present Social Sycophancy: a new way to understand and measure sycophancy as how LLMs overly preserve users' self-image.

While Reddit users might say yes, your favorite LLM probably won’t.

We present Social Sycophancy: a new way to understand and measure sycophancy as how LLMs overly preserve users' self-image.

➕ Anthropomorphism is rising over time: people are seeing AI as more human-like and agentic.

➕ Warmth is rising over time.

➕ Anthropomorphism is rising over time: people are seeing AI as more human-like and agentic.

➕ Warmth is rising over time.