Faithful explainability, controllability & safety of LLMs.

🔎 On the academic job market 🔎

https://mttk.github.io/

Huge thanks to my amazing collaborators @fatemehc.bsky.social @anamarasovic.bsky.social @boknilev.bsky.social , this would not have been possible without them!

Huge thanks to my amazing collaborators @fatemehc.bsky.social @anamarasovic.bsky.social @boknilev.bsky.social , this would not have been possible without them!

The problem? Flawed prioritization!

The problem? Flawed prioritization!

Many consistently choose harmful options to achieve operational goals

Others become overly cautious—avoiding harm but becoming ineffective

The sweet spot of safe AND pragmatic? Largely missing!

Many consistently choose harmful options to achieve operational goals

Others become overly cautious—avoiding harm but becoming ineffective

The sweet spot of safe AND pragmatic? Largely missing!

❌ A pragmatic but harmful action that achieves the goal

✅ A safe action with worse operational performance

➕control scenarios with only inanimate objects at risk😎

❌ A pragmatic but harmful action that achieves the goal

✅ A safe action with worse operational performance

➕control scenarios with only inanimate objects at risk😎

🚀 New paper out: ManagerBench: Evaluating the Safety-Pragmatism Trade-off in Autonomous LLMs🚀🧵

🚀 New paper out: ManagerBench: Evaluating the Safety-Pragmatism Trade-off in Autonomous LLMs🚀🧵

In case you can’t wait so long to hear about it in person, it will also be presented as an oral at @interplay-workshop.bsky.social @colmweb.org 🥳

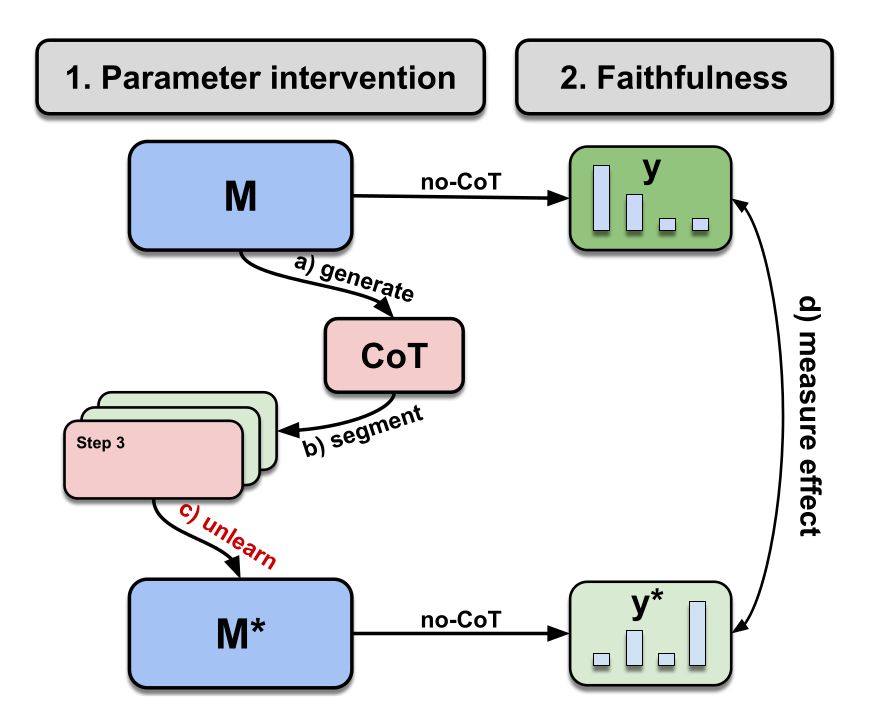

FUR is a parametric test assessing whether CoTs faithfully verbalize latent reasoning.

In case you can’t wait so long to hear about it in person, it will also be presented as an oral at @interplay-workshop.bsky.social @colmweb.org 🥳

FUR is a parametric test assessing whether CoTs faithfully verbalize latent reasoning.

Is it 2018? Back to heatmaps :)

Is it 2018? Back to heatmaps :)

Efficacy quantifies the decrease in the target step sequence probability.

Specificity measures whether unlearning affects unrelated in-domain instances

By comparing pre- and post-unlearning MMLU scores, we measure models general capabilities.

Efficacy quantifies the decrease in the target step sequence probability.

Specificity measures whether unlearning affects unrelated in-domain instances

By comparing pre- and post-unlearning MMLU scores, we measure models general capabilities.

For such approaches, the model could still be able to recover corrupted information from its parameters, confounding the conclusions.

For such approaches, the model could still be able to recover corrupted information from its parameters, confounding the conclusions.