mitscha.github.io

huggingface.co/collections/...

HF model collection for OpenCLIP and timm:

huggingface.co/collections/...

And of course big_vision checkpoints:

github.com/google-resea...

huggingface.co/collections/...

HF model collection for OpenCLIP and timm:

huggingface.co/collections/...

And of course big_vision checkpoints:

github.com/google-resea...

arxiv.org/abs/2502.14786

HF blog post from @arig23498.bsky.social et al. with a gentle intro to the training recipe and a demo:

huggingface.co/blog/siglip2

Thread with results overview from Xiaohua (only on X, sorry - these are all in the paper):

x.com/XiaohuaZhai/...

arxiv.org/abs/2502.14786

HF blog post from @arig23498.bsky.social et al. with a gentle intro to the training recipe and a demo:

huggingface.co/blog/siglip2

Thread with results overview from Xiaohua (only on X, sorry - these are all in the paper):

x.com/XiaohuaZhai/...

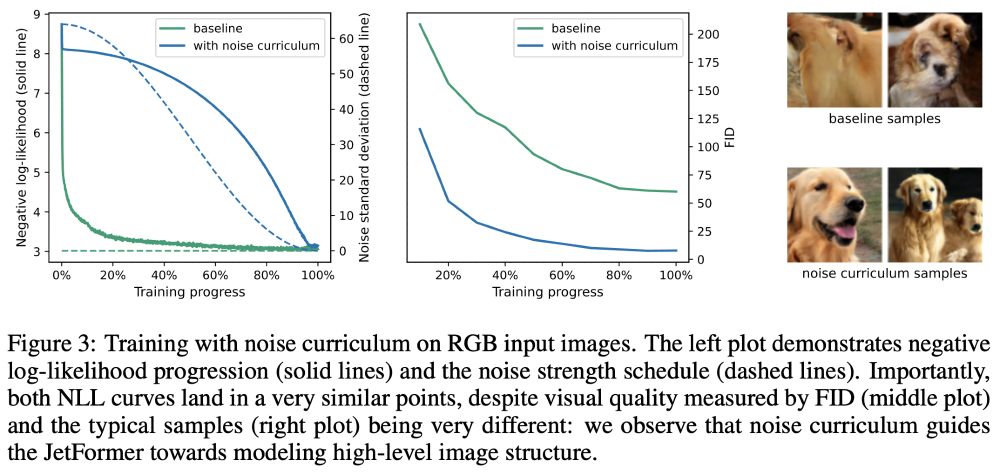

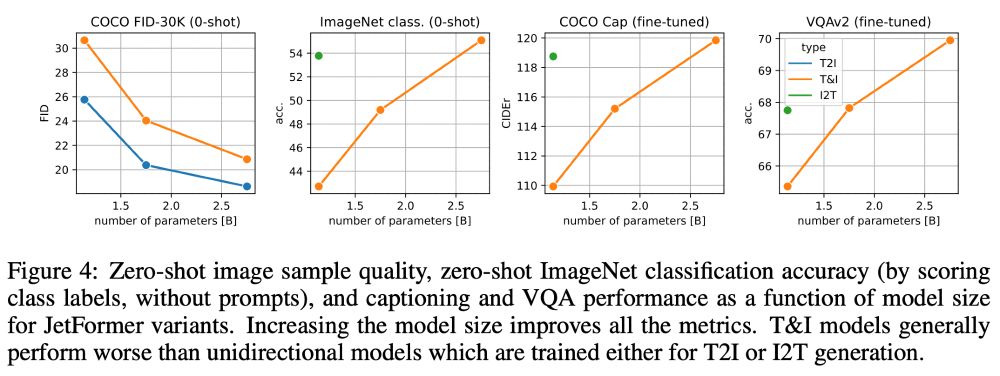

So far we explored a simple setup (image/text pairs, no post-training), and hope JetFormer inspires more (visual) tokenizer-free models!

7/

So far we explored a simple setup (image/text pairs, no post-training), and hope JetFormer inspires more (visual) tokenizer-free models!

7/

- They can induce information loss (e.g. small text)

- Removing specialized components was a key driver of recent progress (bitter lesson)

- Raw likelihoods are comparable across models (for hill climbing, scaling laws)

6/

- They can induce information loss (e.g. small text)

- Removing specialized components was a key driver of recent progress (bitter lesson)

- Raw likelihoods are comparable across models (for hill climbing, scaling laws)

6/

5/

5/

4/

4/

We train JetFormer to maximize the likelihood of the multimodal data, without auxiliary losses (perceptual or similar).

3/

We train JetFormer to maximize the likelihood of the multimodal data, without auxiliary losses (perceptual or similar).

3/

arxiv.org/abs/2312.02116

2/

arxiv.org/abs/2312.02116

2/