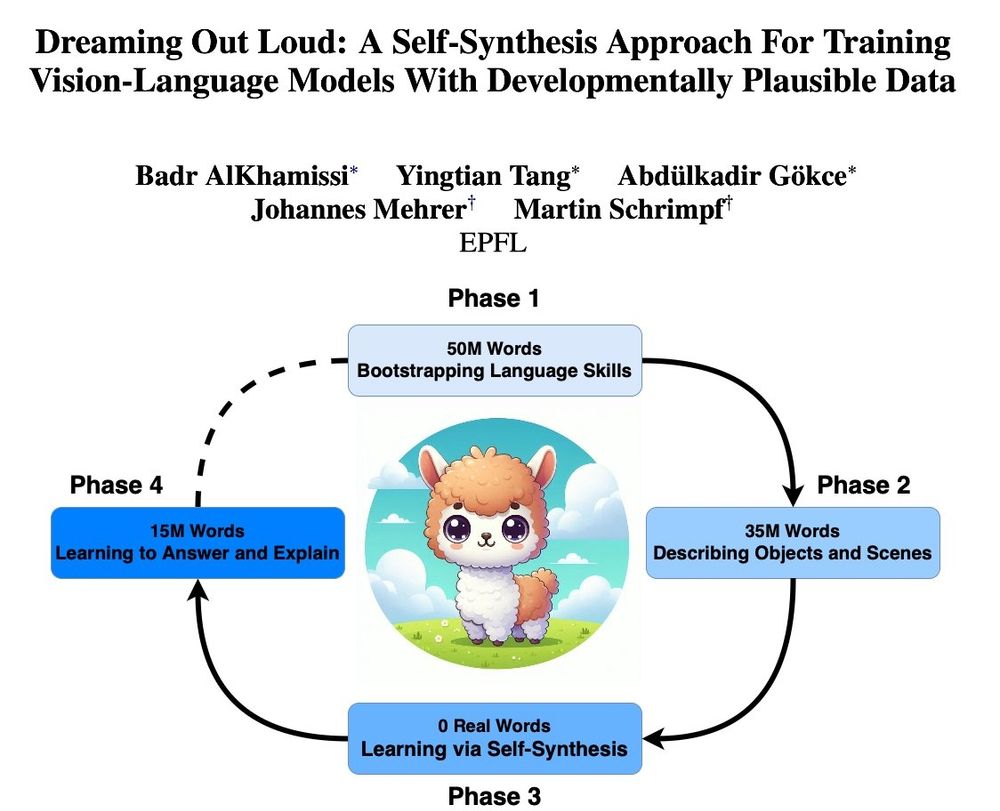

Our model learns over 4 phases -- most crucially self-captioning unseen images to generate synthetic language data

arxiv.org/abs/2411.00828

Our model learns over 4 phases -- most crucially self-captioning unseen images to generate synthetic language data

arxiv.org/abs/2411.00828

#NeuroAI #neuroscience #language

#NeuroAI #neuroscience #language