Transformer patches don't need to be of uniform size -- choose sizes based on entropy --> faster training/inference. Are scale-spaces gonna make a comeback?

Transformer patches don't need to be of uniform size -- choose sizes based on entropy --> faster training/inference. Are scale-spaces gonna make a comeback?

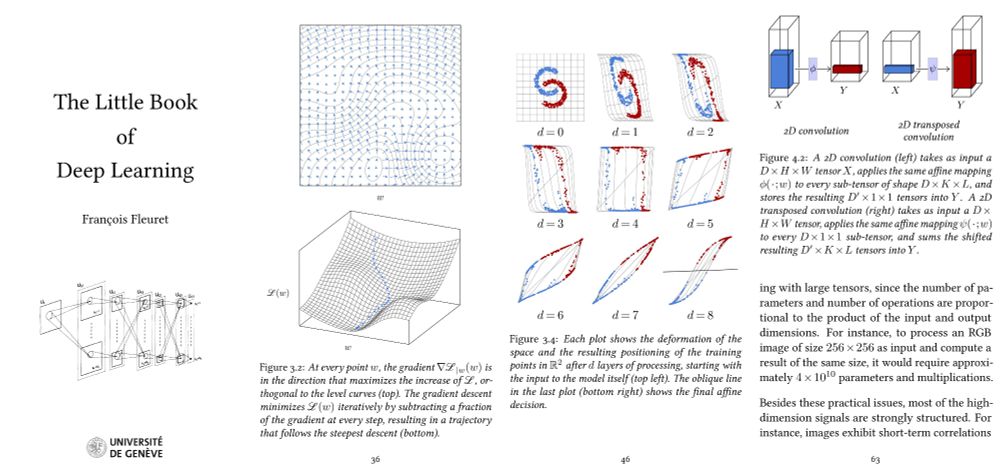

* math: precision matters

* knowledge: effective param count is more important

* 4B-8bit threshold — for bigger prefer quant, smaller prefer more params

* parallel TTC only works above 4B-8bit

arxiv.org/abs/2510.10964

![A scatter plot titled “AIME25 — Total Memory vs. Accuracy (Qwen3)” compares model accuracy (%) against total memory usage (weights + KV cache, in GB) for various Qwen3 model sizes and quantization levels.

Axes:

• X-axis: Total Memory (Weight + KV Cache) [GB] (log scale, ranging roughly from 1 to 100)

• Y-axis: Accuracy (%), ranging from 0 to 75

Legend:

• Colors: model sizes —

• 0.6B (yellow)

• 1.7B (orange)

• 4B (salmon)

• 8B (pink)

• 14B (purple)

• 32B (blue)

• Shapes: precision levels —

• Circle: 16-bit

• Triangle: 8-bit

• Square: 4-bit

• Marker size: context length —

• Small: 2k tokens

• Large: 30k tokens

Main trend:

Larger models (rightward and darker colors) achieve higher accuracy but require significantly more memory. Smaller models (left, yellow/orange) stay below 30% accuracy. Compression (8-bit or 4-bit) lowers memory usage but can reduce accuracy slightly.

Inset zoom (upper center):

A close-up box highlights the 8B (8-bit) and 14B (4-bit) models showing their proximity in accuracy despite differing memory footprints.

Overall, the chart demonstrates scaling behavior for Qwen3 models—accuracy grows with total memory and model size, with diminishing returns beyond the 14B range.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:ckaz32jwl6t2cno6fmuw2nhn/bafkreiatqdqcrxckrhsruoka6llaggjdsr3cpgspql5lnn7kttwrx3q7ga@jpeg)

* math: precision matters

* knowledge: effective param count is more important

* 4B-8bit threshold — for bigger prefer quant, smaller prefer more params

* parallel TTC only works above 4B-8bit

arxiv.org/abs/2510.10964

youtu.be/e5kDHL-nnh4

youtu.be/e5kDHL-nnh4

youtu.be/e5kDHL-nnh4

youtu.be/e5kDHL-nnh4

youtu.be/e5kDHL-nnh4

youtu.be/e5kDHL-nnh4

I wanted to see how the exact same post would perform on both X (Twitter) and Bluesky.

The results were...interesting...

[Thread]

I wanted to see how the exact same post would perform on both X (Twitter) and Bluesky.

The results were...interesting...

[Thread]

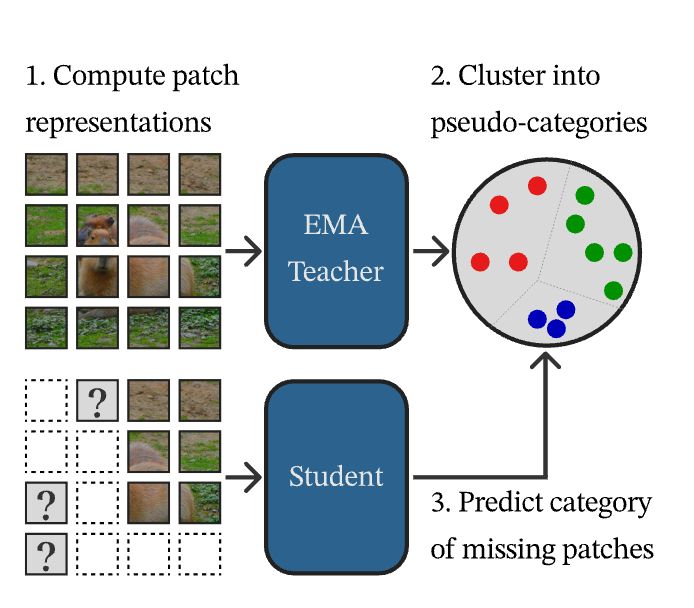

CAPI: Cluster and Predict Latents Patches for Improved Masked Image Modeling.

CAPI: Cluster and Predict Latents Patches for Improved Masked Image Modeling.

code: github.com/verlab/accel...

paper: arxiv.org/abs/2404.19174

project: www.verlab.dcc.ufmg.br/descriptors/...

code: github.com/verlab/accel...

paper: arxiv.org/abs/2404.19174

project: www.verlab.dcc.ufmg.br/descriptors/...

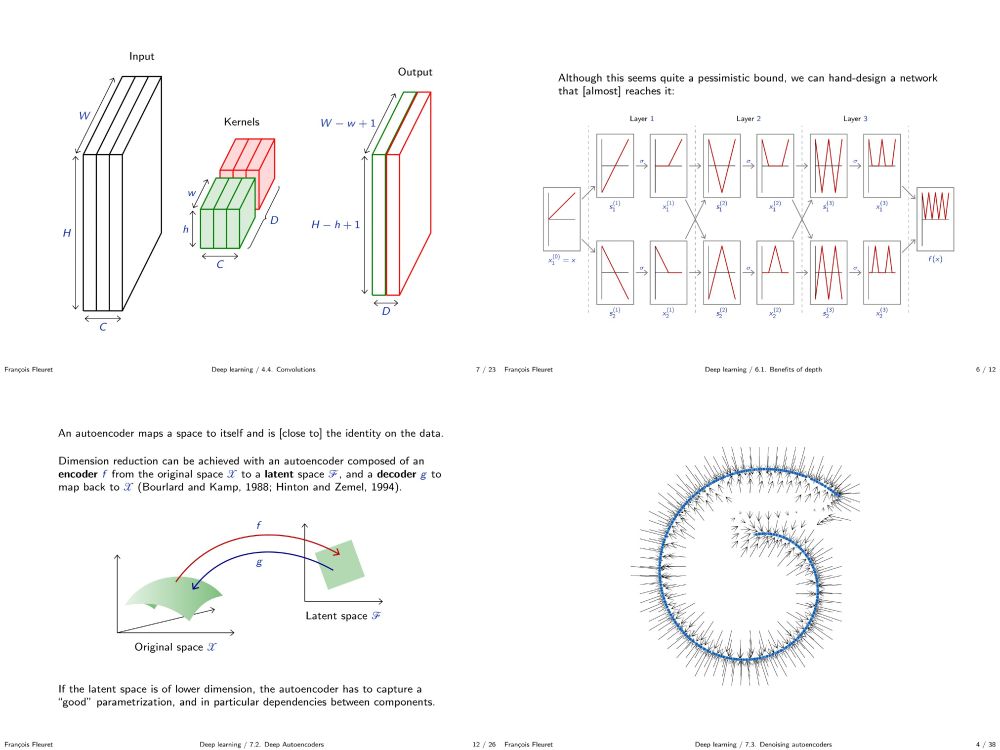

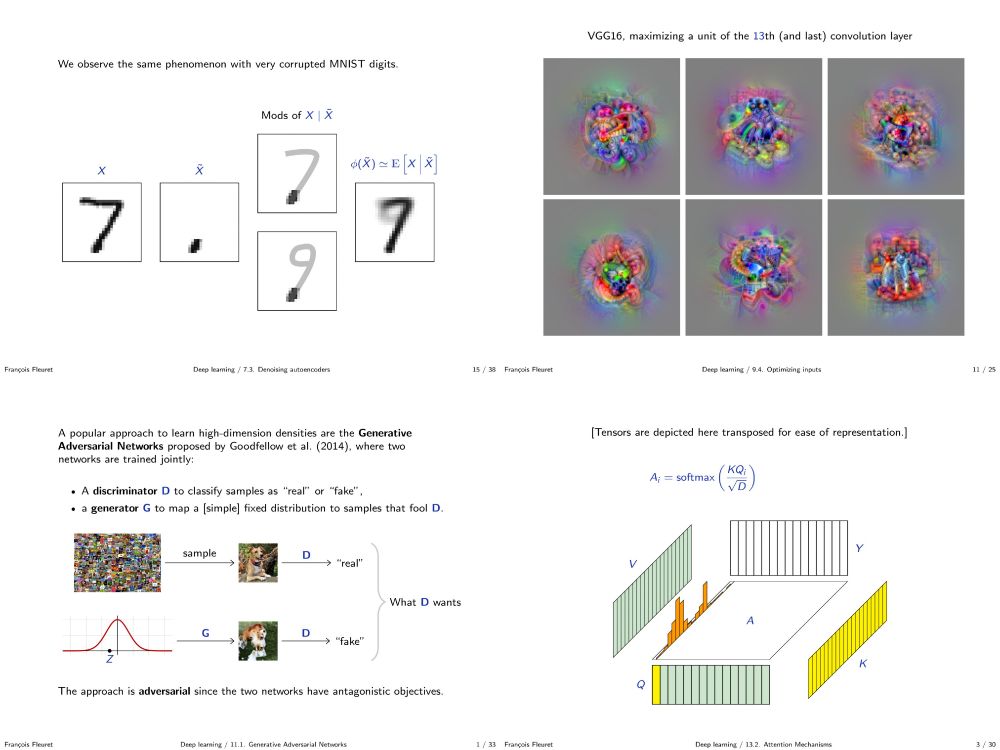

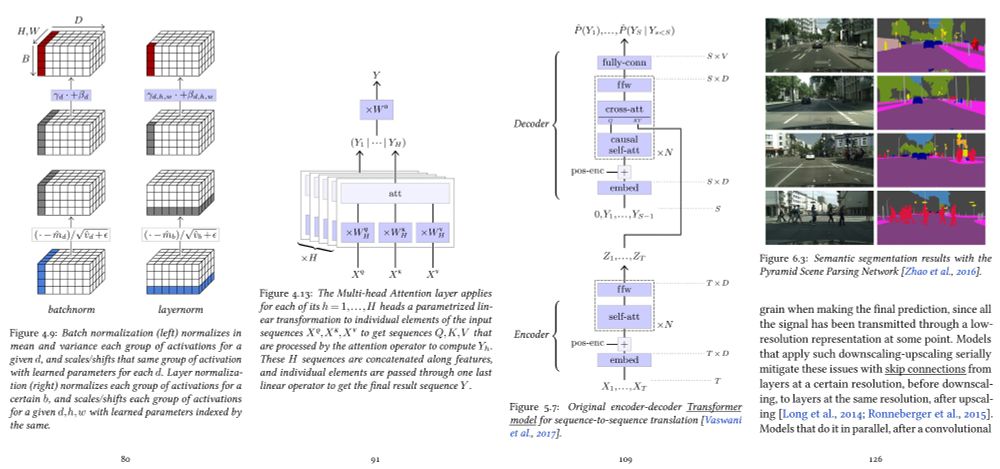

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

Right now, it only has atproto docs but already been useful to me to answer random questions about the project.

github.com/davidgasquez...

Right now, it only has atproto docs but already been useful to me to answer random questions about the project.

github.com/davidgasquez...