bsky.app/profile/did:...

bsky.app/profile/did:...

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

We look for: native speaker of Spanish or Chinese who has an advanced level of English

💸 Compensation provided

✅ Check the flyer for eligibility

📲 Scan the QR code to get in touch.

Feel free to share this news!

#linguistics #paidstudy

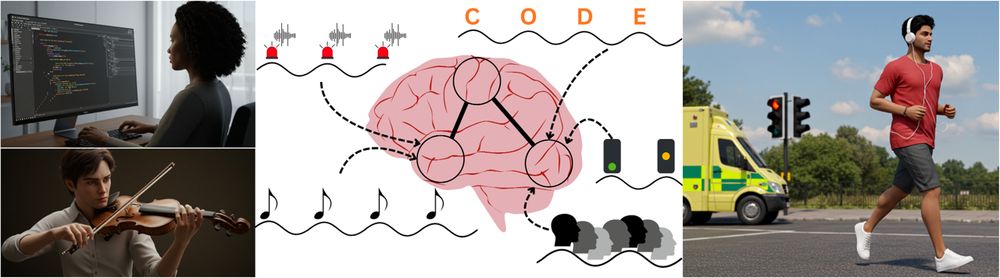

🧠 Researchers at the University of Manchester want to recruit normal hearing volunteers aged 18-50 who are native English speakers to take part in research, which will help us to understand different aspects of listening in noise.

#hearinghealth #research

🧠 Researchers at the University of Manchester want to recruit normal hearing volunteers aged 18-50 who are native English speakers to take part in research, which will help us to understand different aspects of listening in noise.

#hearinghealth #research

Brain rhythms in cognition -- controversies and future directions

arxiv.org/abs/2507.15639

#neuroscience

Brain rhythms in cognition -- controversies and future directions

arxiv.org/abs/2507.15639

#neuroscience

bsky.app/profile/sfnj...

vist.ly/3n7ycdj

bsky.app/profile/sfnj...

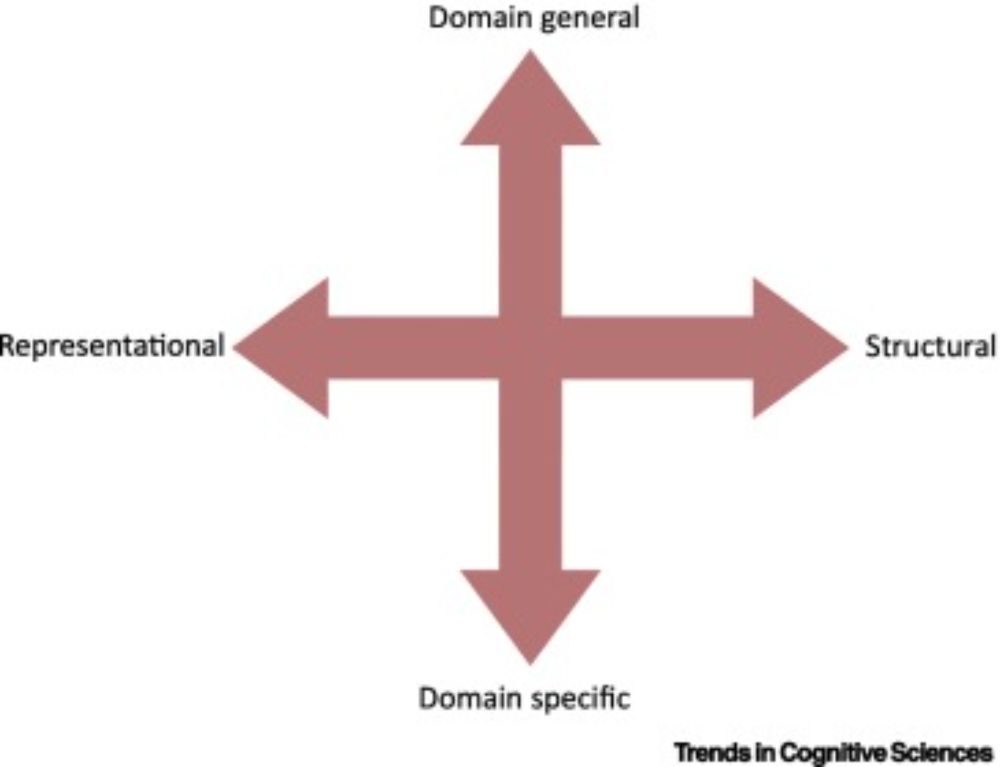

www.cell.com/trends/cogni... @mpi-nl.bsky.social

www.cell.com/trends/cogni... @mpi-nl.bsky.social

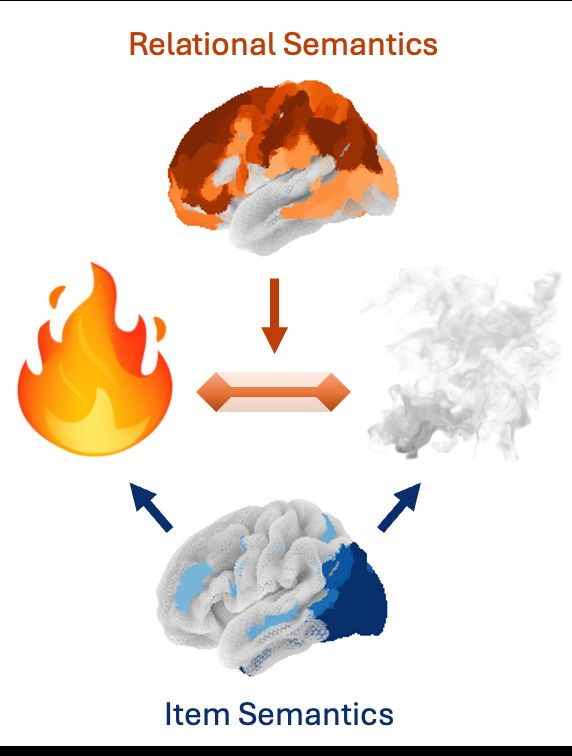

Prior work has mapped how the brain encodes concepts: If you see fire and smoke, your brain will represent the fire (hot, bright) and smoke (gray, airy). But how do you encode features of the fire-smoke relation? We analyzed fMRI with embeddings extracted from LLMs to find out 🧵

Prior work has mapped how the brain encodes concepts: If you see fire and smoke, your brain will represent the fire (hot, bright) and smoke (gray, airy). But how do you encode features of the fire-smoke relation? We analyzed fMRI with embeddings extracted from LLMs to find out 🧵

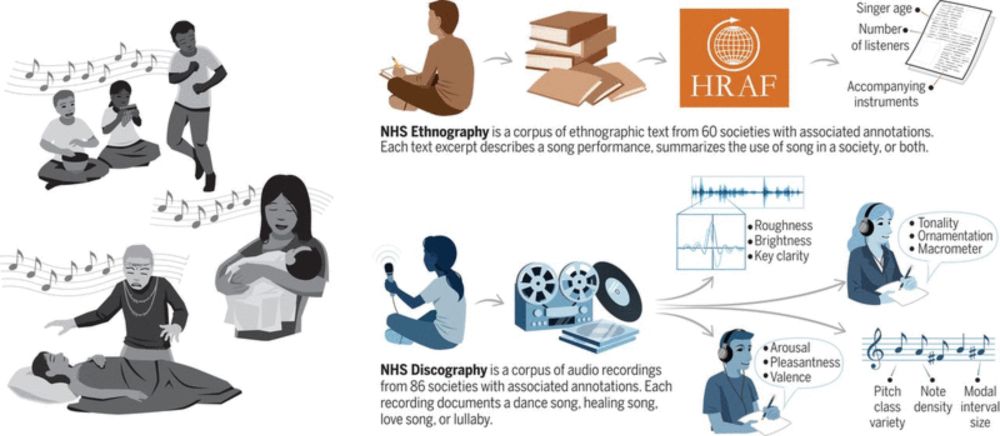

www.nature.com/articles/s42...

www.nature.com/articles/s42...

www.science.org/doi/10.1126/...

#neuroscience

www.science.org/doi/10.1126/...

#neuroscience

brand new paper in Computational Brain and Behaviour with @andreaeyleen.bsky.social at @mpi-nl.bsky.social

brand new paper in Computational Brain and Behaviour with @andreaeyleen.bsky.social at @mpi-nl.bsky.social

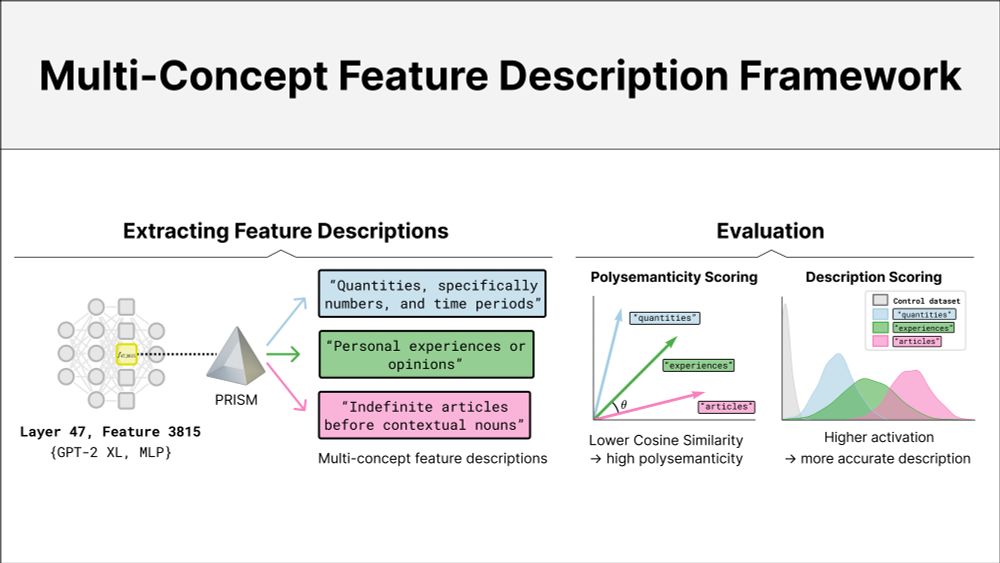

We introduce PRISM, a framework for extracting multi-concept feature descriptions to better understand polysemanticity.

📄 Capturing Polysemanticity with PRISM: A Multi-Concept Feature Description Framework

arxiv.org/abs/2506.15538

🧵 (1/7)

We introduce PRISM, a framework for extracting multi-concept feature descriptions to better understand polysemanticity.

📄 Capturing Polysemanticity with PRISM: A Multi-Concept Feature Description Framework

arxiv.org/abs/2506.15538

🧵 (1/7)

Are sensory sampling rhythms fixed by intrinsically-determined processes, or do they couple to external structure? Here we highlight the incompatibility between these accounts and propose a resolution [1/6]

Are sensory sampling rhythms fixed by intrinsically-determined processes, or do they couple to external structure? Here we highlight the incompatibility between these accounts and propose a resolution [1/6]

Inharmonicity enhances brain signals of attentional capture and auditory stream segregation

www.biorxiv.org/content/10.1...

#EEG

#neuroscience

#neuroskyence

Inharmonicity enhances brain signals of attentional capture and auditory stream segregation

www.biorxiv.org/content/10.1...

#EEG

#neuroscience

#neuroskyence

tracking rate of periodicity is achievable without sharp acoustic edges or consistent phase alignment to envelope. consistent with assuming distinct processes for phase and rate tracking

www.jneurosci.org/content/45/2...

tracking rate of periodicity is achievable without sharp acoustic edges or consistent phase alignment to envelope. consistent with assuming distinct processes for phase and rate tracking

www.jneurosci.org/content/45/2...

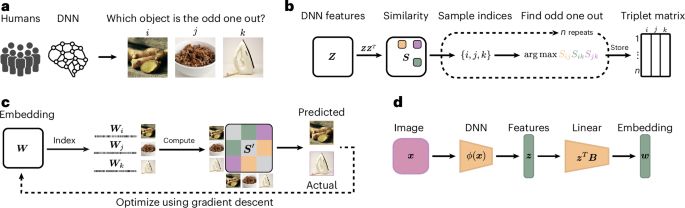

New preprint from Leonard E. van Dyck, @martinhebart.bsky.social @kathadobs.bsky.social

www.biorxiv.org/content/10.1...

#neuroskyence

New preprint from Leonard E. van Dyck, @martinhebart.bsky.social @kathadobs.bsky.social

www.biorxiv.org/content/10.1...

#neuroskyence

What makes lexical tones challenging for L2 learners? Previous studies suggest that phonological universals are at play... In our perceptual study, we found little evidence for these universals.

What makes lexical tones challenging for L2 learners? Previous studies suggest that phonological universals are at play... In our perceptual study, we found little evidence for these universals.

www.eneuro.org/content/earl...

www.eneuro.org/content/earl...

this review lays out what I think the fundamental specializations are for music perception in humans, namely, the hierarchical processing of pitch and rhythm

or, how our minds turn vibrating air into music

authors.elsevier.com/a/1lG9G_V1r-...

this review lays out what I think the fundamental specializations are for music perception in humans, namely, the hierarchical processing of pitch and rhythm

or, how our minds turn vibrating air into music

authors.elsevier.com/a/1lG9G_V1r-...

Excited to share our new paper just out in Scientific Reports!

🧠🎧 Using intracranial EEG, we show how the human brain automatically encodes patterns in random sounds– without attention or explicit awareness.

🔗 doi.org/10.1038/s415...

Excited to share our new paper just out in Scientific Reports!

🧠🎧 Using intracranial EEG, we show how the human brain automatically encodes patterns in random sounds– without attention or explicit awareness.

🔗 doi.org/10.1038/s415...