get started with sota VLMs (gemma 3, Qwen2.5VL, InternVL3 & more) and serve them wherever you want 🤩

learn more github.com/ggml-org/lla... 📖

get started with sota VLMs (gemma 3, Qwen2.5VL, InternVL3 & more) and serve them wherever you want 🤩

learn more github.com/ggml-org/lla... 📖

We have shipped a how-to guide for VDR models in Hugging Face transformers 🤗📖 huggingface.co/docs/transfo...

We have shipped a how-to guide for VDR models in Hugging Face transformers 🤗📖 huggingface.co/docs/transfo...

They're just like ColPali, but highly scalable, fast and you can even make them more efficient with binarization or matryoshka with little degradation 🪆⚡️

I collected some here huggingface.co/collections/...

They're just like ColPali, but highly scalable, fast and you can even make them more efficient with binarization or matryoshka with little degradation 🪆⚡️

I collected some here huggingface.co/collections/...

get started ⤵️

> filter models provided by different providers

> test them through widget or Python/JS/cURL

get started ⤵️

> filter models provided by different providers

> test them through widget or Python/JS/cURL

collection is here huggingface.co/collections/...

collection is here huggingface.co/collections/...

RolmOCR-7B follows same recipe with OlmOCR, builds on Qwen2.5VL with training set modifications and improves accuracy & performance 🤝

huggingface.co/reducto/Rolm...

RolmOCR-7B follows same recipe with OlmOCR, builds on Qwen2.5VL with training set modifications and improves accuracy & performance 🤝

huggingface.co/reducto/Rolm...

the paper introduces an interesting pre-training pipeline to handle long context and the model saw 4.4T tokens arxiv.org/pdf/2504.07491

the paper introduces an interesting pre-training pipeline to handle long context and the model saw 4.4T tokens arxiv.org/pdf/2504.07491

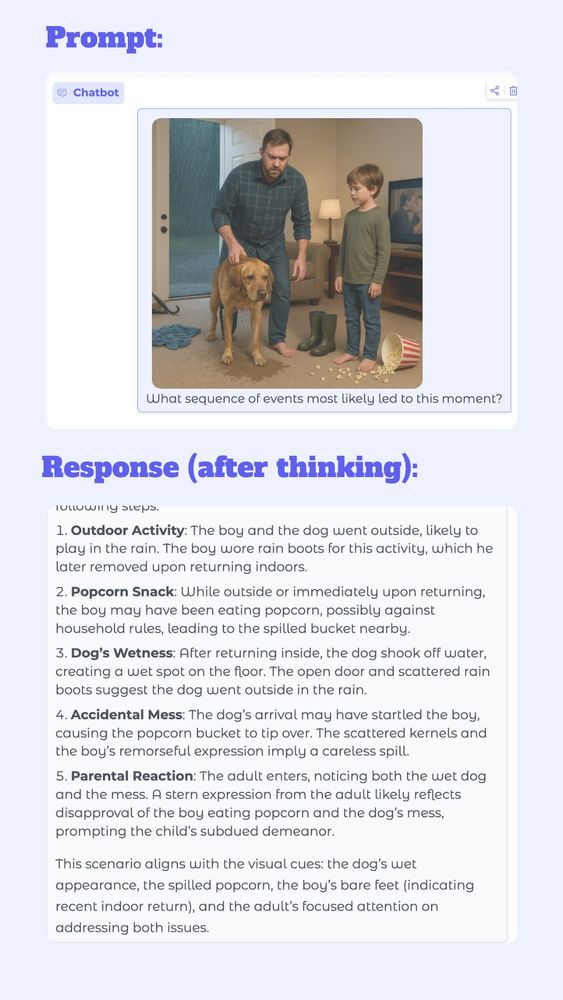

Kimi-VL-A3B-Thinking is the first ever capable open-source reasoning VLM with MIT license ❤️

> it has only 2.8B activated params 👏

> it's agentic 🔥 works on GUIs

> surpasses gpt-4o

I've put it to test (see below ⤵️) huggingface.co/spaces/moons...

Kimi-VL-A3B-Thinking is the first ever capable open-source reasoning VLM with MIT license ❤️

> it has only 2.8B activated params 👏

> it's agentic 🔥 works on GUIs

> surpasses gpt-4o

I've put it to test (see below ⤵️) huggingface.co/spaces/moons...

> 7 ckpts with various sizes (1B to 78B)

> Built on InternViT encoder and Qwen2.5VL decoder, improves on Qwen2.5VL

> Can do reasoning, document tasks, extending to tool use and agentic capabilities 🤖

> easily use with Hugging Face transformers 🤗 huggingface.co/collections/...

> 7 ckpts with various sizes (1B to 78B)

> Built on InternViT encoder and Qwen2.5VL decoder, improves on Qwen2.5VL

> Can do reasoning, document tasks, extending to tool use and agentic capabilities 🤖

> easily use with Hugging Face transformers 🤗 huggingface.co/collections/...

take your favorite VDR model out for multimodal RAG 🤝

take your favorite VDR model out for multimodal RAG 🤝

> Link to all models, datasets, demos huggingface.co/collections/...

> Text-readable version is here huggingface.co/posts/merve/...

> Link to all models, datasets, demos huggingface.co/collections/...

> Text-readable version is here huggingface.co/posts/merve/...

@llamaindex.bsky.social released vdr-2b-multi-v1

> uses 70% less image tokens, yet outperforming other dse-qwen2 based models

> 3x faster inference with less VRAM 💨

> shrinkable with matryoshka 🪆

huggingface.co/collections/...

@llamaindex.bsky.social released vdr-2b-multi-v1

> uses 70% less image tokens, yet outperforming other dse-qwen2 based models

> 3x faster inference with less VRAM 💨

> shrinkable with matryoshka 🪆

huggingface.co/collections/...

Here's everything released, find text-readable version here huggingface.co/posts/merve/...

All models are here huggingface.co/collections/...

Here's everything released, find text-readable version here huggingface.co/posts/merve/...

All models are here huggingface.co/collections/...

🔖 Model collection: huggingface.co/collections/...

🔖 Notebook on how to use: colab.research.google.com/drive/1e8fcb...

🔖 Try it here: huggingface.co/spaces/hysts...

🔖 Model collection: huggingface.co/collections/...

🔖 Notebook on how to use: colab.research.google.com/drive/1e8fcb...

🔖 Try it here: huggingface.co/spaces/hysts...

The model is very interesting, it has different encoders for different modalities each (visual prompt, text prompt, image and video) then it concatenates these to feed into LLM 💬

the output segmentation tokens are passed to SAM2, to sort of match text (captions or semantic classes) to masks ⤵️

The model is very interesting, it has different encoders for different modalities each (visual prompt, text prompt, image and video) then it concatenates these to feed into LLM 💬

the output segmentation tokens are passed to SAM2, to sort of match text (captions or semantic classes) to masks ⤵️

The models are capable of tasks involving vision-language understanding and visual referrals (referring segmentation) both for images and videos ⏯️

The models are capable of tasks involving vision-language understanding and visual referrals (referring segmentation) both for images and videos ⏯️

@m--ric.bsky.social

> Blog: hf.co/blog/smolage...

> Quickstart: huggingface.co/docs/smolage...

@m--ric.bsky.social

> Blog: hf.co/blog/smolage...

> Quickstart: huggingface.co/docs/smolage...

writing a tool and using it is very easy, just decorate the function with `@tool`

what's cooler is that you can push and pull tools from Hugging Face Hub! see below

writing a tool and using it is very easy, just decorate the function with `@tool`

what's cooler is that you can push and pull tools from Hugging Face Hub! see below

Just initialize it with the tool of your choice and the model of your choice

See below how you can get started, you can use the models with HF Inference API as well as locally!

Just initialize it with the tool of your choice and the model of your choice

See below how you can get started, you can use the models with HF Inference API as well as locally!

LLMs can already write code and do reasoning, so why bother yourself with writing the tool?

CodeAgent class is here for it! see it in action below

LLMs can already write code and do reasoning, so why bother yourself with writing the tool?

CodeAgent class is here for it! see it in action below

however cool your LLM is, without being agentic it can only go so far

enter smolagents: a new agent library by @hf.co to make the LLM write code, do analysis and automate boring stuff! huggingface.co/blog/smolage...

however cool your LLM is, without being agentic it can only go so far

enter smolagents: a new agent library by @hf.co to make the LLM write code, do analysis and automate boring stuff! huggingface.co/blog/smolage...

QLoRA fine-tuning with 4-bit with bsz of 4 can be done with 32 GB VRAM and is very fast! ✨

github.com/merveenoyan/...

QLoRA fine-tuning with 4-bit with bsz of 4 can be done with 32 GB VRAM and is very fast! ✨

github.com/merveenoyan/...

I have been looking forward to this feature as I felt most back to back releases are overwhelming and I tend to miss out 🤠

I have been looking forward to this feature as I felt most back to back releases are overwhelming and I tend to miss out 🤠