Meredith Whittaker

@meredithmeredith.bsky.social

President of Signal, Chief Advisor to AI Now Institute

LOL all morning picturing some finance quant slouched at his desk prompting Dalle, like, "make me a picture of pikachu having sex with the Apple logo" or whatever tf those guys use these things for, then throwing up his hands like "WHY WON'T THIS FIND ALPHA?!"

www.bloomberg.com/news/article...

www.bloomberg.com/news/article...

October 16, 2025 at 12:39 PM

LOL all morning picturing some finance quant slouched at his desk prompting Dalle, like, "make me a picture of pikachu having sex with the Apple logo" or whatever tf those guys use these things for, then throwing up his hands like "WHY WON'T THIS FIND ALPHA?!"

www.bloomberg.com/news/article...

www.bloomberg.com/news/article...

New speakers. I'm officially a dad.

September 23, 2025 at 8:42 AM

New speakers. I'm officially a dad.

📣 NEW -- In The Economist, discussing the privacy perils of AI agents and what AI companies and operating systems need to do--NOW--to protect Signal and much else!

www.economist.com/by-invitatio...

www.economist.com/by-invitatio...

September 9, 2025 at 11:44 AM

📣 NEW -- In The Economist, discussing the privacy perils of AI agents and what AI companies and operating systems need to do--NOW--to protect Signal and much else!

www.economist.com/by-invitatio...

www.economist.com/by-invitatio...

New Sunday Times profile in which I succeed, like a fencer in a 2hr marathon match, in fending off Qs abt my personal life & consistently turning focus back to my work & ideas.

(Contra the interviewer's claim, many ppl do know me! They're called friends, & you know who you are ❤️)

archive.is/BtZD0

(Contra the interviewer's claim, many ppl do know me! They're called friends, & you know who you are ❤️)

archive.is/BtZD0

August 11, 2025 at 3:46 PM

New Sunday Times profile in which I succeed, like a fencer in a 2hr marathon match, in fending off Qs abt my personal life & consistently turning focus back to my work & ideas.

(Contra the interviewer's claim, many ppl do know me! They're called friends, & you know who you are ❤️)

archive.is/BtZD0

(Contra the interviewer's claim, many ppl do know me! They're called friends, & you know who you are ❤️)

archive.is/BtZD0

Just saying...

![A screenshot of an excerpt from the interview, which reads: "The world has been pretty chaotic for a while now, but it feels like in the last couple of years it has only sharpened. How important is Signal in this current moment?

People want privacy. People are creeped out. People are uneasy. People recognize that the status quo in tech is not safe or savory, and for whatever reason they are trying to find, and in the case of Signal are finding, alternatives that actually give them meaningful privacy.

Anyone who does human rights work or investigative journalism understands that in many cases, it is the difference between life and death. We know throughout history that centralized power constitutes such power via information asymmetry. The more they know, the more stable and lasting their power is. This is the type of domination through knowledge that makes or breaks empires. Ultimately, we are in a world in which the power to know us has been ceded to the tech industry. So ensuring [privacy] in a world where the authority to know us has been ceded to private actors who may or may not cooperate with one or another regime, who may choose to use that data to manipulate or to harm us or to exclude us from access to resources, is existentially important. This is the basis on which I claim, without flinching, that Signal is the most important technical infrastructure in the world right now."](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:so5r7asbd26pmnnoerksklor/bafkreigvh5e3l2w2noxfc4lvlyfizhoalxqcvdue22lu4genaq7qyb2b7u@jpeg)

June 30, 2025 at 1:09 PM

Just saying...

'Meredith,' some guys ask, 'why won't you shove AI into Signal?'

Because we love privacy, and we love you, and this shit is predictable and unacceptable. Use Signal ❤️

Because we love privacy, and we love you, and this shit is predictable and unacceptable. Use Signal ❤️

June 19, 2025 at 7:59 AM

'Meredith,' some guys ask, 'why won't you shove AI into Signal?'

Because we love privacy, and we love you, and this shit is predictable and unacceptable. Use Signal ❤️

Because we love privacy, and we love you, and this shit is predictable and unacceptable. Use Signal ❤️

Use Signal. We promise, no AI clutter, and no surveillance ads, whatever the rest of the industry does. <3

June 16, 2025 at 3:30 PM

Use Signal. We promise, no AI clutter, and no surveillance ads, whatever the rest of the industry does. <3

Tomorrow, Thur May 29, Nuits sonores, Lyon France!

I'm coming dance, I'm coming to party, I'm coming to eat, but first I'm sitting down to talk about tech, privacy, Signal, and what it takes to make a world worth living in <3

I'm coming dance, I'm coming to party, I'm coming to eat, but first I'm sitting down to talk about tech, privacy, Signal, and what it takes to make a world worth living in <3

May 28, 2025 at 9:43 AM

Tomorrow, Thur May 29, Nuits sonores, Lyon France!

I'm coming dance, I'm coming to party, I'm coming to eat, but first I'm sitting down to talk about tech, privacy, Signal, and what it takes to make a world worth living in <3

I'm coming dance, I'm coming to party, I'm coming to eat, but first I'm sitting down to talk about tech, privacy, Signal, and what it takes to make a world worth living in <3

Shot shot, shot missed

March 1, 2025 at 12:33 AM

Shot shot, shot missed

Stand by this: www.politico.com/newsletters/...

February 19, 2025 at 4:42 PM

Stand by this: www.politico.com/newsletters/...

February 6, 2025 at 2:24 PM

February 4, 2025 at 9:19 AM

New Year's resolutions ✨

January 2, 2025 at 7:02 PM

New Year's resolutions ✨

This gets more and more interesting the deeper in I go...

November 23, 2024 at 4:07 PM

This gets more and more interesting the deeper in I go...

Anyone spending the brief liminal window between Christmas and Georgian new year in Hamburg, with the hackers, at CCC?

I am! And I'll be presenting new research and a new talk ☺️

halfnarp.events.ccc.de

I am! And I'll be presenting new research and a new talk ☺️

halfnarp.events.ccc.de

November 18, 2024 at 8:28 AM

Anyone spending the brief liminal window between Christmas and Georgian new year in Hamburg, with the hackers, at CCC?

I am! And I'll be presenting new research and a new talk ☺️

halfnarp.events.ccc.de

I am! And I'll be presenting new research and a new talk ☺️

halfnarp.events.ccc.de

Opened a years old doc called "data" and found a poem

November 17, 2024 at 1:44 PM

Opened a years old doc called "data" and found a poem

New Paper! w/@HeidyKhlaaf + @sarahbmyers. We put the narrative on AI risks & nat'l security under a microscope, finding that the focus on hypothetical AI bioweapons is warping policy and ignoring the real & serious harms of current AI use in surveillance, targeting, etc. 1/

October 22, 2024 at 3:50 PM

New Paper! w/@HeidyKhlaaf + @sarahbmyers. We put the narrative on AI risks & nat'l security under a microscope, finding that the focus on hypothetical AI bioweapons is warping policy and ignoring the real & serious harms of current AI use in surveillance, targeting, etc. 1/

Difficult to take the Big Tech's concerns about "AI disinfo" seriously when...

...behold, Google Gemini!

(the non answer re Palestine would need to be intentionally built into the system, as would the "double checked" cert-via-highlighting of the text that de-maps Palestine.)

...behold, Google Gemini!

(the non answer re Palestine would need to be intentionally built into the system, as would the "double checked" cert-via-highlighting of the text that de-maps Palestine.)

March 4, 2024 at 8:59 PM

Difficult to take the Big Tech's concerns about "AI disinfo" seriously when...

...behold, Google Gemini!

(the non answer re Palestine would need to be intentionally built into the system, as would the "double checked" cert-via-highlighting of the text that de-maps Palestine.)

...behold, Google Gemini!

(the non answer re Palestine would need to be intentionally built into the system, as would the "double checked" cert-via-highlighting of the text that de-maps Palestine.)

If I wanted court drama I'd read Stendhal, who understood how power works and spent hundreds of pages illuminating characters whose desire for it blinded them to this reality.

Or, it was Microsoft all along...

Or, it was Microsoft all along...

November 20, 2023 at 6:48 PM

If I wanted court drama I'd read Stendhal, who understood how power works and spent hundreds of pages illuminating characters whose desire for it blinded them to this reality.

Or, it was Microsoft all along...

Or, it was Microsoft all along...

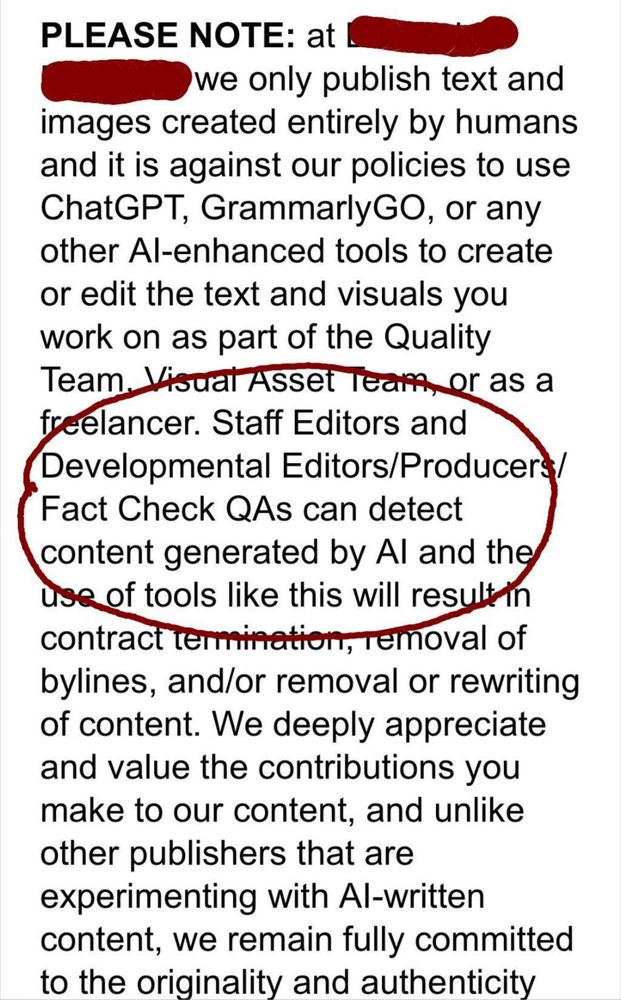

Someone shared a writing contract where the outlet says they can detect AI generated writing. Ofc there's no reliable way to do this.

Bespoke AI guardrails amounting to a parent telling a kid "I can always tell when you lie" hoping credulity & fear lead them never to test it.

Bespoke AI guardrails amounting to a parent telling a kid "I can always tell when you lie" hoping credulity & fear lead them never to test it.

September 9, 2023 at 12:49 PM

Someone shared a writing contract where the outlet says they can detect AI generated writing. Ofc there's no reliable way to do this.

Bespoke AI guardrails amounting to a parent telling a kid "I can always tell when you lie" hoping credulity & fear lead them never to test it.

Bespoke AI guardrails amounting to a parent telling a kid "I can always tell when you lie" hoping credulity & fear lead them never to test it.

📢NEW PAPER!

Where @davidthewid, @sarahbmyers & I unpack what Open Source AI even is.

We find that the terms ‘open’ & ‘open source’ are often more marketing than technical descriptor, and that even the most 'open' systems don't alone democratize AI 1/

papers.ssrn.com/sol3/papers.cf…

Where @davidthewid, @sarahbmyers & I unpack what Open Source AI even is.

We find that the terms ‘open’ & ‘open source’ are often more marketing than technical descriptor, and that even the most 'open' systems don't alone democratize AI 1/

papers.ssrn.com/sol3/papers.cf…

August 17, 2023 at 8:09 PM

📢NEW PAPER!

Where @davidthewid, @sarahbmyers & I unpack what Open Source AI even is.

We find that the terms ‘open’ & ‘open source’ are often more marketing than technical descriptor, and that even the most 'open' systems don't alone democratize AI 1/

papers.ssrn.com/sol3/papers.cf…

Where @davidthewid, @sarahbmyers & I unpack what Open Source AI even is.

We find that the terms ‘open’ & ‘open source’ are often more marketing than technical descriptor, and that even the most 'open' systems don't alone democratize AI 1/

papers.ssrn.com/sol3/papers.cf…

Ordinary harms (like replicating and naturalizing structures of marginalization that entrench historical inequality) can be outweighed by ordinary benefits (like tech guys getting rich). What Hinton is worried about is existential risk (ghost stories).

May 3, 2023 at 12:55 AM

Ordinary harms (like replicating and naturalizing structures of marginalization that entrench historical inequality) can be outweighed by ordinary benefits (like tech guys getting rich). What Hinton is worried about is existential risk (ghost stories).

This is such a powerful example. And TBH one of the best ways to "regulate" AI: organized workers demanding dignified and safe working conditions, rejecting the idea that AI-enabled degradation of work is inevitable!

May 2, 2023 at 1:12 PM

This is such a powerful example. And TBH one of the best ways to "regulate" AI: organized workers demanding dignified and safe working conditions, rejecting the idea that AI-enabled degradation of work is inevitable!