Teaching material: https://github.com/MCKnaus/causalML-teaching

Homepage: mcknaus.github.io

Premium tenure-track (ass to full prof) in "𝐌𝐋 𝐌𝐞𝐭𝐡𝐨𝐝𝐬 𝐢𝐧 𝐁𝐮𝐬𝐢𝐧𝐞𝐬𝐬 𝐚𝐧𝐝 𝐄𝐜𝐨𝐧𝐨𝐦𝐢𝐜𝐬" @unituebingen.bsky.social made possible by @ml4science.bsky.social

Spread the word and feel free to reach out if you have any questions about the position or the environment.

Link: shorturl.at/QHZ5G

Premium tenure-track (ass to full prof) in "𝐌𝐋 𝐌𝐞𝐭𝐡𝐨𝐝𝐬 𝐢𝐧 𝐁𝐮𝐬𝐢𝐧𝐞𝐬𝐬 𝐚𝐧𝐝 𝐄𝐜𝐨𝐧𝐨𝐦𝐢𝐜𝐬" @unituebingen.bsky.social made possible by @ml4science.bsky.social

Spread the word and feel free to reach out if you have any questions about the position or the environment.

Link: shorturl.at/QHZ5G

Check it out:

mcknaus.github.io/OutcomeWeigh...

#causalSky #causalML

Check it out:

mcknaus.github.io/OutcomeWeigh...

#causalSky #causalML

Of course this is unnecessarily complicated, but instructive.

Of course this is unnecessarily complicated, but instructive.

It is so obvious where the policy change happens that it is not even indicated...

It is so obvious where the policy change happens that it is not even indicated...

My Causal ML students draw sth using 2 potential outcome functions and see how causal forest approximates the resulting CATE fct as bonus assignment. Wanna join the challenge? Share your art in the replies

#rstats #CausalSky #EconSky

My Causal ML students draw sth using 2 potential outcome functions and see how causal forest approximates the resulting CATE fct as bonus assignment. Wanna join the challenge? Share your art in the replies

#rstats #CausalSky #EconSky

Outcome weights are available for Causal ML building a nice bridge to pscore methods.

The findings regarding weights properties raise questions that I won't answer in this paper.

For now I am happy to provide you with a framework and results telling you that if you want the weight…

Outcome weights are available for Causal ML building a nice bridge to pscore methods.

The findings regarding weights properties raise questions that I won't answer in this paper.

For now I am happy to provide you with a framework and results telling you that if you want the weight…

The formal details are boring and in the paper BUT here is an appetizer.

Note that only fully-normalized estimators estimate an effect of 1 if the outcome is simulated to be Y = 1 + D.

I run default DoubleML/grf functions and only AIPW with RF recovers the true effect of one.

The formal details are boring and in the paper BUT here is an appetizer.

Note that only fully-normalized estimators estimate an effect of 1 if the outcome is simulated to be Y = 1 + D.

I run default DoubleML/grf functions and only AIPW with RF recovers the true effect of one.

It turns out that implementation details control weights properties and that

1. AIPW is fully-normalized in standard implementations

2. PLR(-IV) and causal/instrumental forest are not. Instead their weights are scale-normalized adding to (minus) the same constant.

It turns out that implementation details control weights properties and that

1. AIPW is fully-normalized in standard implementations

2. PLR(-IV) and causal/instrumental forest are not. Instead their weights are scale-normalized adding to (minus) the same constant.

We can investigate whether weights are fully-normalized adding up to 1 for treated and to -1 for controls or falling into other classes (see figure).

This connects nicely to recent work of @tymonsloczynski.bsky.social and @jmwooldridge.bsky.social on Abadie’s kappa estimators.

We can investigate whether weights are fully-normalized adding up to 1 for treated and to -1 for controls or falling into other classes (see figure).

This connects nicely to recent work of @tymonsloczynski.bsky.social and @jmwooldridge.bsky.social on Abadie’s kappa estimators.

Plug&play with the great cobalt package of @noahgreifer.bsky.social and Double ML with random forests. We see that 5-fold cross-fitting improves covariate balancing over 2-folds.

Also tuning causal/instrumental forests improves balancing for C(L)ATE estimates substantially.

Plug&play with the great cobalt package of @noahgreifer.bsky.social and Double ML with random forests. We see that 5-fold cross-fitting improves covariate balancing over 2-folds.

Also tuning causal/instrumental forests improves balancing for C(L)ATE estimates substantially.

Find attached the derivations for PLR and AIPW. If you want to see even more ugly expressions, check the paper.

If you prefer code over formulas, check the "theory in action notebook" confirming computationally that the math in the paper is correct: shorturl.at/N4I4V

Find attached the derivations for PLR and AIPW. If you want to see even more ugly expressions, check the paper.

If you prefer code over formulas, check the "theory in action notebook" confirming computationally that the math in the paper is correct: shorturl.at/N4I4V

Smoothers, we need smoothers!!! Outcome nuisance parameters have to be estimated using methods like (post-selection) OLS, (kernel) (ridge) or series regressions, tree-based methods, …

Check out @aliciacurth.bsky.social for nice references and cool insights using smoothers in ML.

Smoothers, we need smoothers!!! Outcome nuisance parameters have to be estimated using methods like (post-selection) OLS, (kernel) (ridge) or series regressions, tree-based methods, …

Check out @aliciacurth.bsky.social for nice references and cool insights using smoothers in ML.

Derive outcome weights for the six leading cases of Double ML and Generalized Random Forest.

Luckily it suffices to do the work for instrumental forest, AIPW and Wald-AIPW. Everything else and also outcome weights documented in the literature follow as special cases.

Derive outcome weights for the six leading cases of Double ML and Generalized Random Forest.

Luckily it suffices to do the work for instrumental forest, AIPW and Wald-AIPW. Everything else and also outcome weights documented in the literature follow as special cases.

Establish numerical equivalence between moment-based and weighted representation.

Insights required:

1. (Almost) everything is an IV (@instrumenthull.bsky.social ll.bsky.social), or at least a pseudo-IV

2. A transformation matrix producing your pseudo-outcome is all you need

Establish numerical equivalence between moment-based and weighted representation.

Insights required:

1. (Almost) everything is an IV (@instrumenthull.bsky.social ll.bsky.social), or at least a pseudo-IV

2. A transformation matrix producing your pseudo-outcome is all you need

Outcome weights are a well-established lens to understand how a

concrete estimator implementation processes a

concrete sample to produce a

concrete point estimate.

No asymptotics, expectations or approximations.

Just watch a (potentially complicated multi-stage) estimator digest your sample.

Outcome weights are a well-established lens to understand how a

concrete estimator implementation processes a

concrete sample to produce a

concrete point estimate.

No asymptotics, expectations or approximations.

Just watch a (potentially complicated multi-stage) estimator digest your sample.

1. Recipe to write estimators as weighted outcomes

2. Double ML and causal forests as weighting estimators

3. Plug&play classic covariate balancing checks

4. Explains why Causal ML fails to find an effect of 1 with noiseless outcome Y = 1 + D

5. More fun facts

arxiv.org/abs/2411.11559

1. Recipe to write estimators as weighted outcomes

2. Double ML and causal forests as weighting estimators

3. Plug&play classic covariate balancing checks

4. Explains why Causal ML fails to find an effect of 1 with noiseless outcome Y = 1 + D

5. More fun facts

arxiv.org/abs/2411.11559

When? April 11th

What? 2h of fun understanding how (easy) DoubleML and Causal Forests of @VC31415 and @Susan_Athey work by replicating R output in few lines of code. #RStats

How? Sign up here t.ly/3weFr

When? April 11th

What? 2h of fun understanding how (easy) DoubleML and Causal Forests of @VC31415 and @Susan_Athey work by replicating R output in few lines of code. #RStats

How? Sign up here t.ly/3weFr

Traditionally my Causal ML students draw sth using two potential outcome functions and see how causal forest approximates the resulting CATE fct.

Wanna join the challenge? Share your "art" in the replies 👇

#EconSky #rstats #CausalSky

Traditionally my Causal ML students draw sth using two potential outcome functions and see how causal forest approximates the resulting CATE fct.

Wanna join the challenge? Share your "art" in the replies 👇

#EconSky #rstats #CausalSky

Full course material here github.com/MCKnaus/caus...

Hope some find it useful.

Full course material here github.com/MCKnaus/caus...

Hope some find it useful.

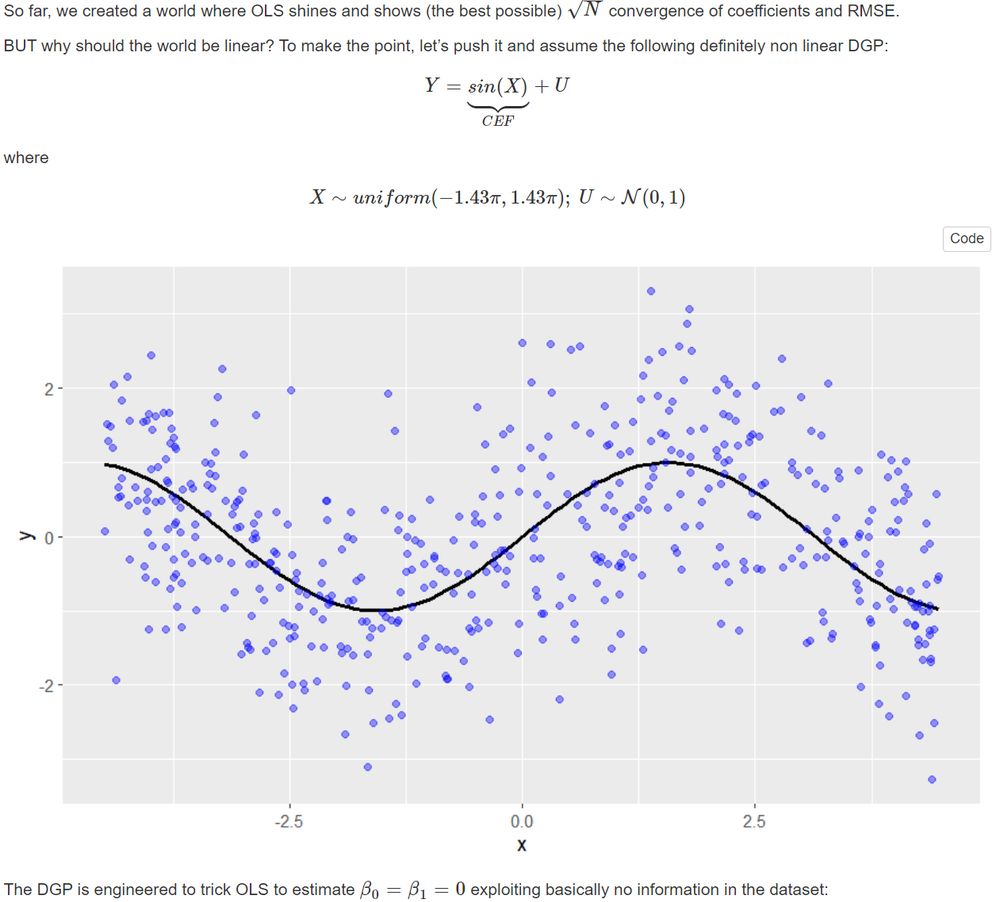

This notebooks starts with N^1/2 convergence of OLS parameters as this is what students should be most familiar with.

This notebooks starts with N^1/2 convergence of OLS parameters as this is what students should be most familiar with.