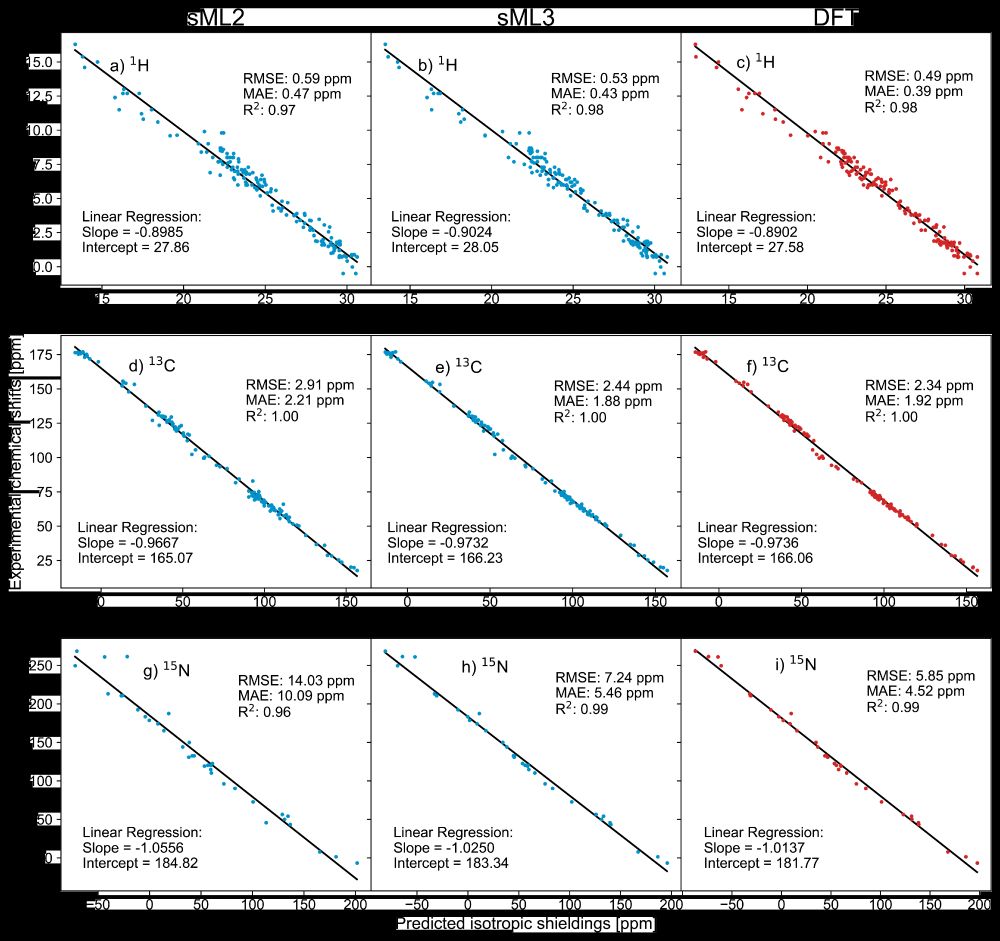

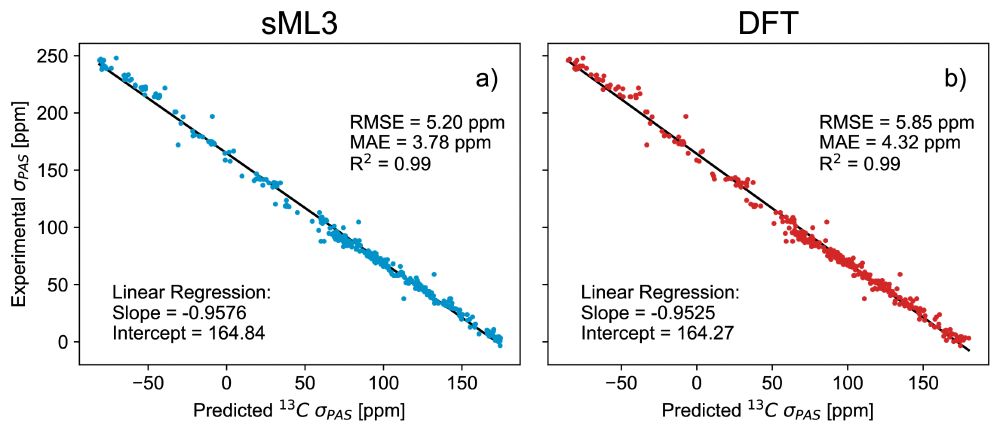

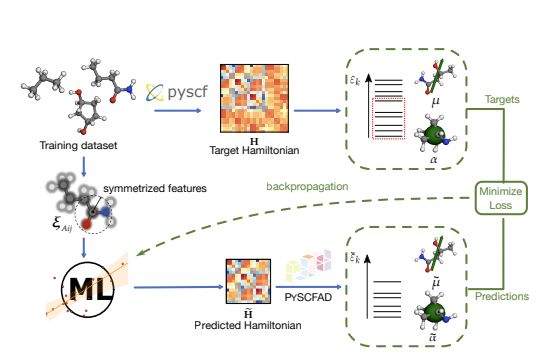

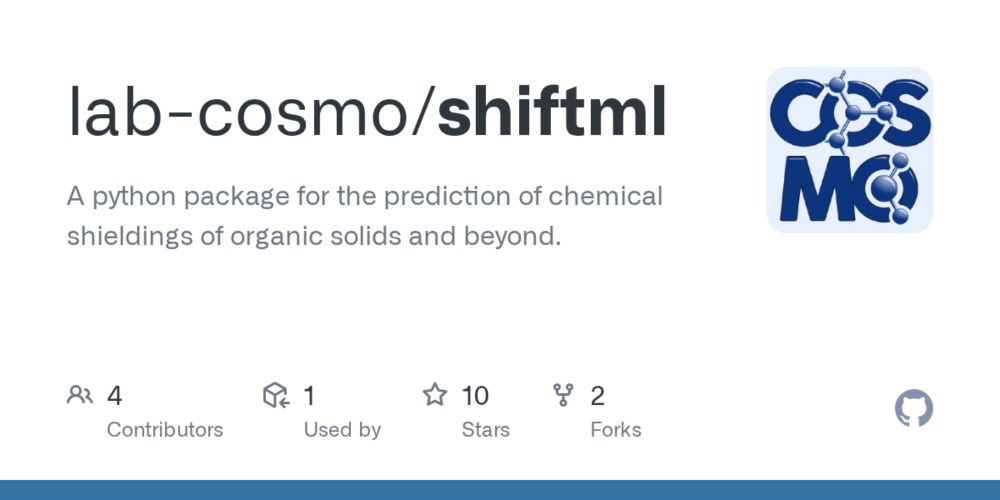

* ShiftML3 predicts full chemical shielding tensors

* DFT accuracy for 1H, 13C, and 15N

* ASE integration

* GPU integration

Code: github.com/lab-cosmo/Sh...

Install from Pypi: pip install shiftml

* ShiftML3 predicts full chemical shielding tensors

* DFT accuracy for 1H, 13C, and 15N

* ASE integration

* GPU integration

Code: github.com/lab-cosmo/Sh...

Install from Pypi: pip install shiftml