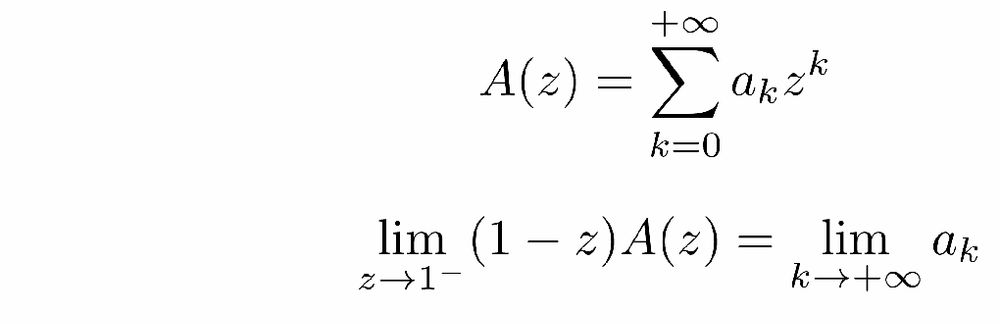

francisbach.com/z-transform/

francisbach.com/z-transform/

With W. Azizian, J. Malick, P. Mertikopoulos, we tackle this fundamental question in our new ICML 2025 paper: "The Global Convergence Time of Stochastic Gradient Descent in Non-Convex Landscapes"

With W. Azizian, J. Malick, P. Mertikopoulos, we tackle this fundamental question in our new ICML 2025 paper: "The Global Convergence Time of Stochastic Gradient Descent in Non-Convex Landscapes"

🤯 Why does flow matching generalize? Did you know that the flow matching target you're trying to learn *can only generate training points*?

w @quentinbertrand.bsky.social @annegnx.bsky.social @remiemonet.bsky.social 👇👇👇

🤯 Why does flow matching generalize? Did you know that the flow matching target you're trying to learn *can only generate training points*?

w @quentinbertrand.bsky.social @annegnx.bsky.social @remiemonet.bsky.social 👇👇👇

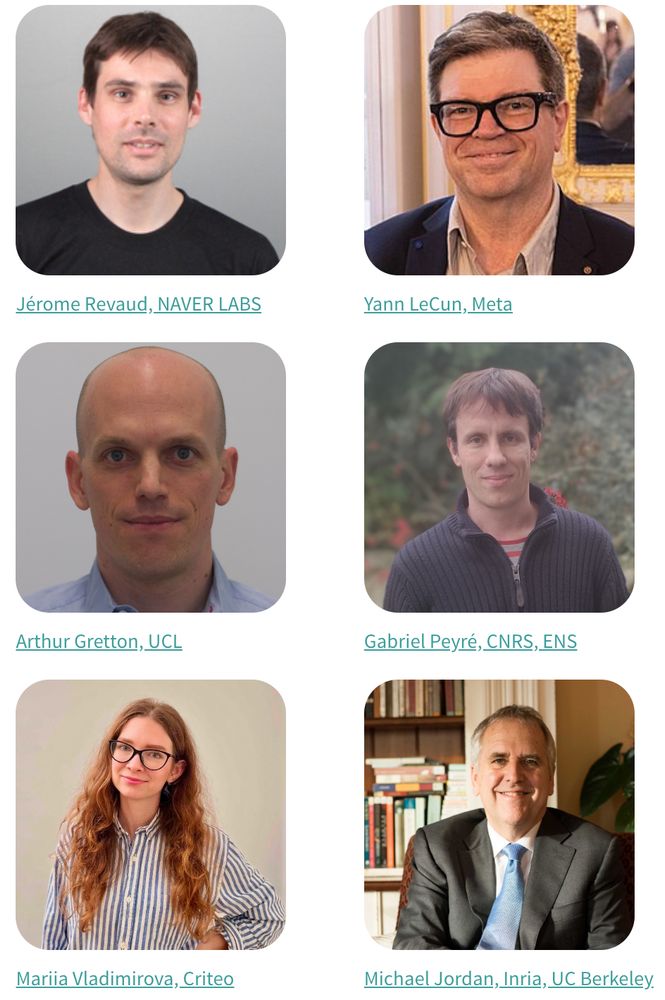

paiss.inria.fr

cc @mvladimirova.bsky.social

paiss.inria.fr

cc @mvladimirova.bsky.social

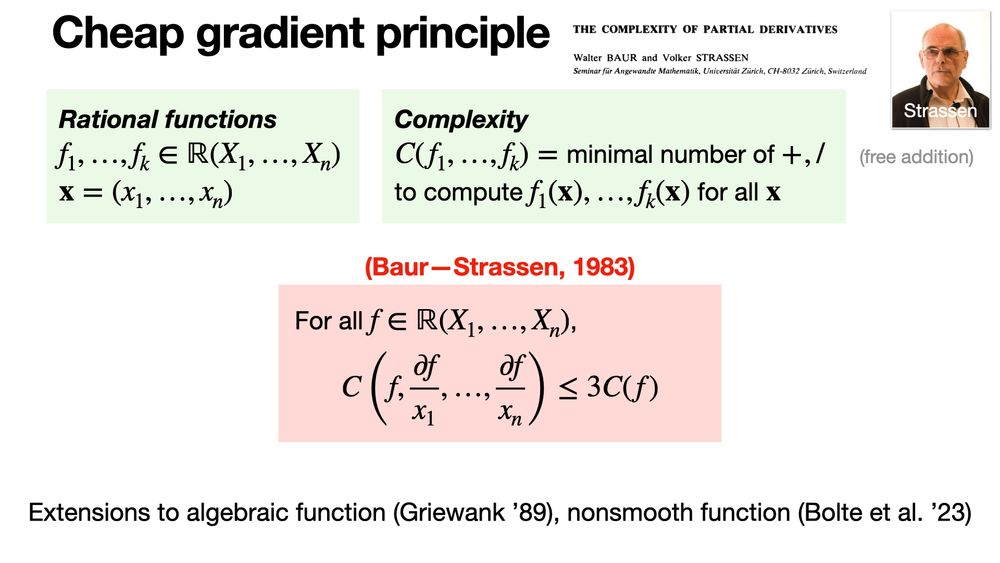

functions" is accepted at Mathematical Programming !! This is a joint work with Jerome Bolte, Eric Moulines and Edouard Pauwels where we study a subgradient method with errors for nonconvex nonsmooth functions.

arxiv.org/pdf/2404.19517

functions" is accepted at Mathematical Programming !! This is a joint work with Jerome Bolte, Eric Moulines and Edouard Pauwels where we study a subgradient method with errors for nonconvex nonsmooth functions.

arxiv.org/pdf/2404.19517

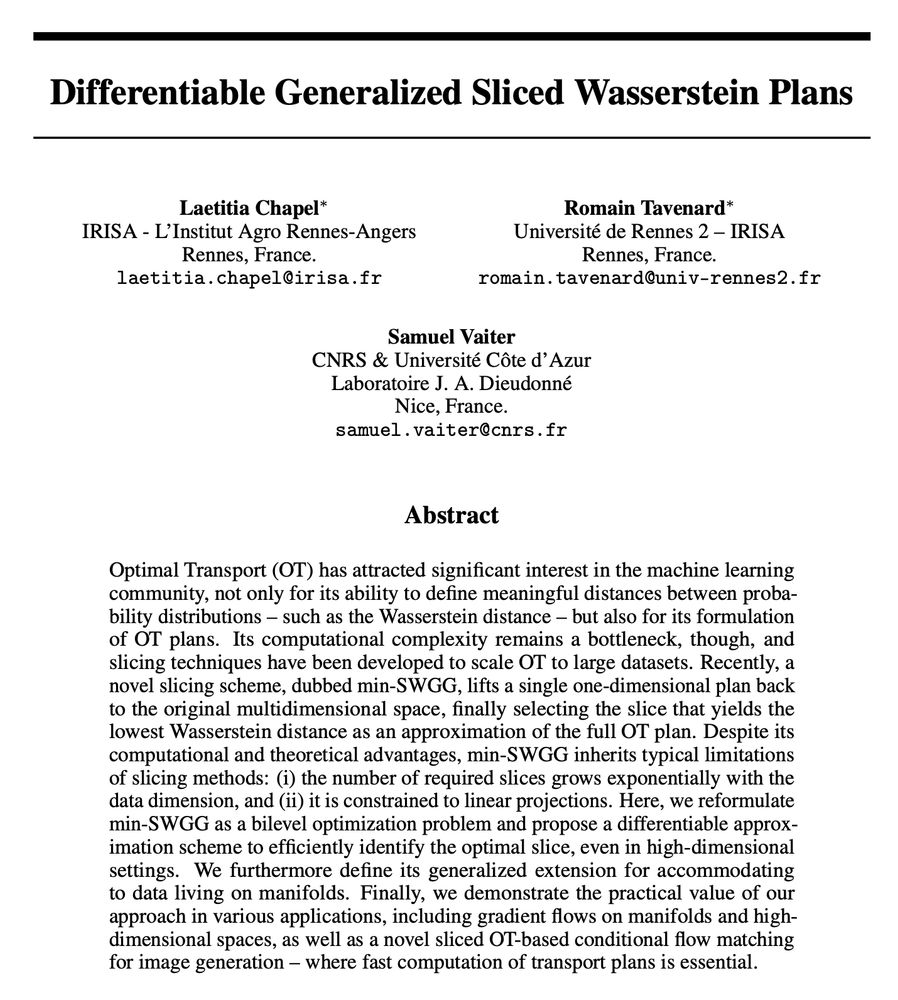

**Differentiable Generalized Sliced Wasserstein Plans**

w/

L. Chapel

@rtavenar.bsky.social

We propose a Generalized Sliced Wasserstein method that provides an approximated transport plan and which admits a differentiable approximation.

arxiv.org/abs/2505.22049 1/5

**Differentiable Generalized Sliced Wasserstein Plans**

w/

L. Chapel

@rtavenar.bsky.social

We propose a Generalized Sliced Wasserstein method that provides an approximated transport plan and which admits a differentiable approximation.

arxiv.org/abs/2505.22049 1/5

**Geometric and computational hardness of bilevel programming**

w/ Jerôme Bolte, Tùng Lê & Edouard Pauwels

has been accepted to Mathematical Programming!

We study how difficult it may be to solve bilevel optimization beyond strongly convex inner problems

arxiv.org/abs/2407.12372

**Geometric and computational hardness of bilevel programming**

w/ Jerôme Bolte, Tùng Lê & Edouard Pauwels

has been accepted to Mathematical Programming!

We study how difficult it may be to solve bilevel optimization beyond strongly convex inner problems

arxiv.org/abs/2407.12372

Learning Theory for Kernel Bilevel Optimization

w/ @fareselkhoury.bsky.social E. Pauwels @michael-arbel.bsky.social

We provide generalization error bounds for bilevel optimization problems where the inner objective is minimized over a RKHS.

arxiv.org/abs/2502.08457

Learning Theory for Kernel Bilevel Optimization

w/ @fareselkhoury.bsky.social E. Pauwels @michael-arbel.bsky.social

We provide generalization error bounds for bilevel optimization problems where the inner objective is minimized over a RKHS.

arxiv.org/abs/2502.08457

Bilevel gradient methods and Morse parametric qualification

w/ J. Bolte, T. Lê, E. Pauwels

We study bilevel optimization with a nonconvex inner objective. To do so, we propose a new setting (Morse parametric qualification) to study bilevel algorithms.

arxiv.org/abs/2502.09074

Bilevel gradient methods and Morse parametric qualification

w/ J. Bolte, T. Lê, E. Pauwels

We study bilevel optimization with a nonconvex inner objective. To do so, we propose a new setting (Morse parametric qualification) to study bilevel algorithms.

arxiv.org/abs/2502.09074

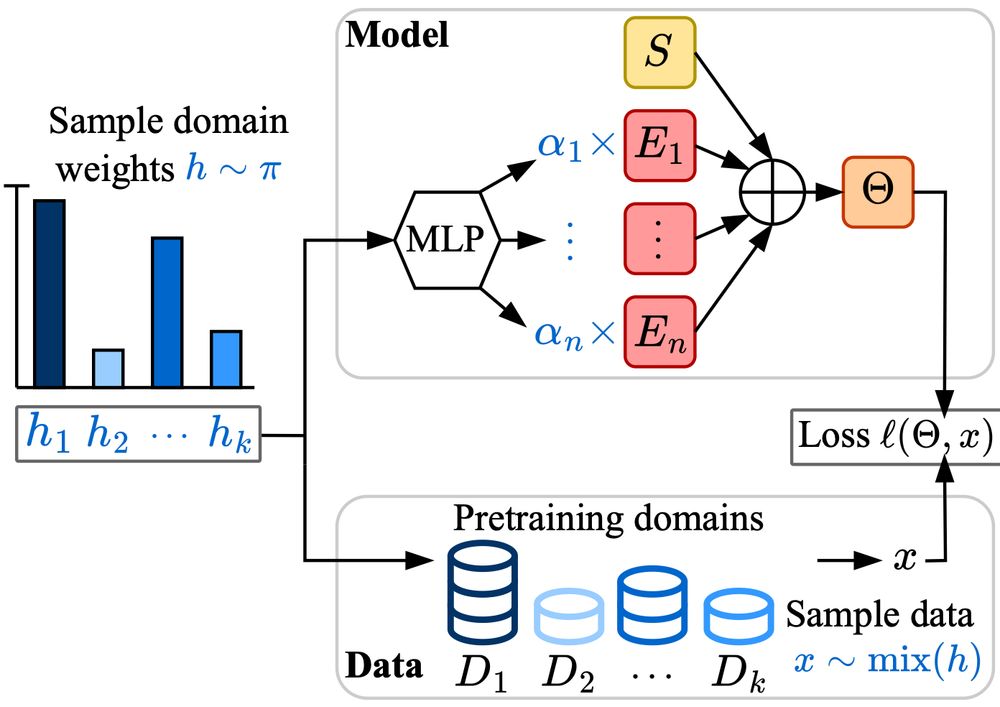

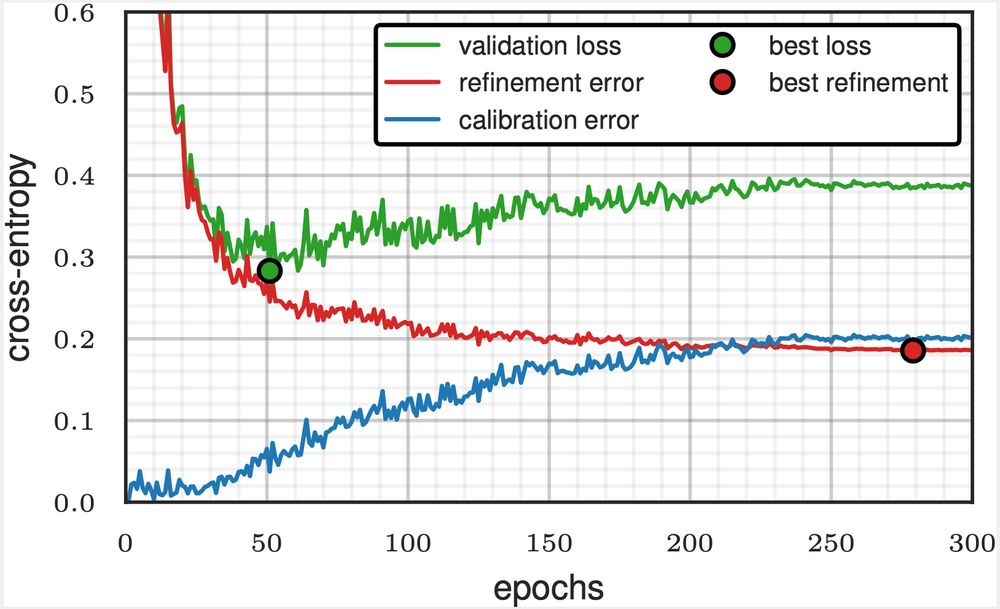

Made with ❤️ at Apple

Thanks to my co-authors David Grangier, Angelos Katharopoulos, and Skyler Seto!

arxiv.org/abs/2502.01804

Made with ❤️ at Apple

Thanks to my co-authors David Grangier, Angelos Katharopoulos, and Skyler Seto!

arxiv.org/abs/2502.01804

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

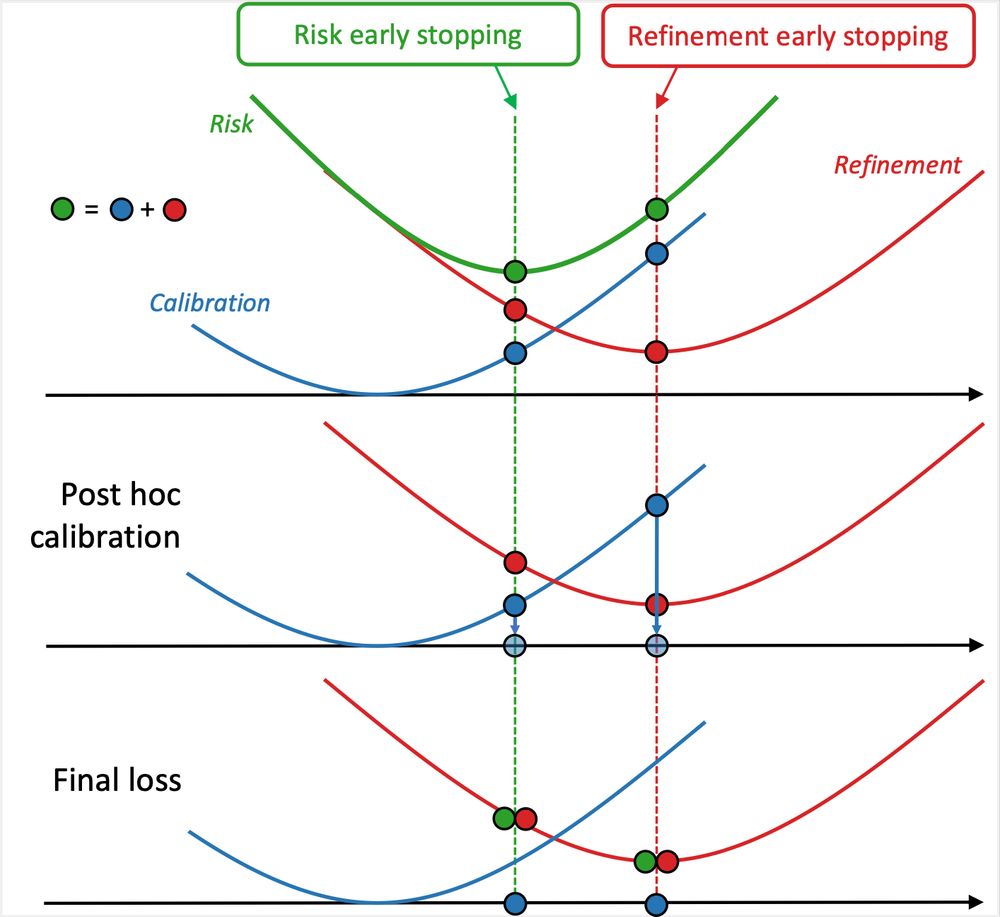

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

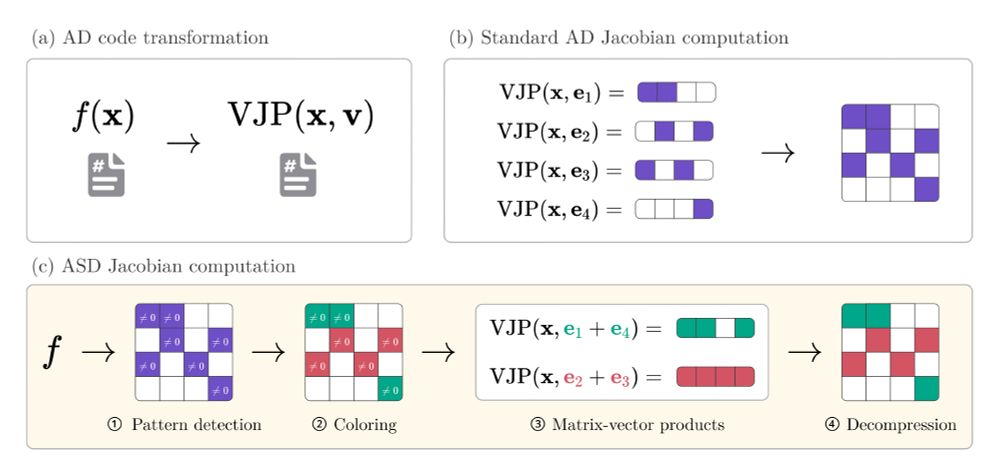

Make attention ~18% faster with a drop-in replacement 🚀

Code:

github.com/apple/ml-sig...

Paper

arxiv.org/abs/2409.04431

Make attention ~18% faster with a drop-in replacement 🚀

Code:

github.com/apple/ml-sig...

Paper

arxiv.org/abs/2409.04431

arxiv.org/abs/2501.17737

🧵1/8

arxiv.org/abs/2501.17737

🧵1/8

1) NLP and predictive ML to improve the management of stroke, in a multi-disciplinary and stimulating environment under the joint supervision of @adrien3000 from the @TeamHeka, me from @soda_INRIA and Eric Jouvent from @APHP team.inria.fr/soda/files/...

1) NLP and predictive ML to improve the management of stroke, in a multi-disciplinary and stimulating environment under the joint supervision of @adrien3000 from the @TeamHeka, me from @soda_INRIA and Eric Jouvent from @APHP team.inria.fr/soda/files/...