In this post on Decisions & Dragons I answer "Should we abandon RL?"

The answer is obviously no, but people ask because they have a fundamental misunderstanding of what RL is.

RL is a problem, not an approach.

www.decisionsanddragons.com/posts/should...

In this post on Decisions & Dragons I answer "Should we abandon RL?"

The answer is obviously no, but people ask because they have a fundamental misunderstanding of what RL is.

RL is a problem, not an approach.

www.decisionsanddragons.com/posts/should...

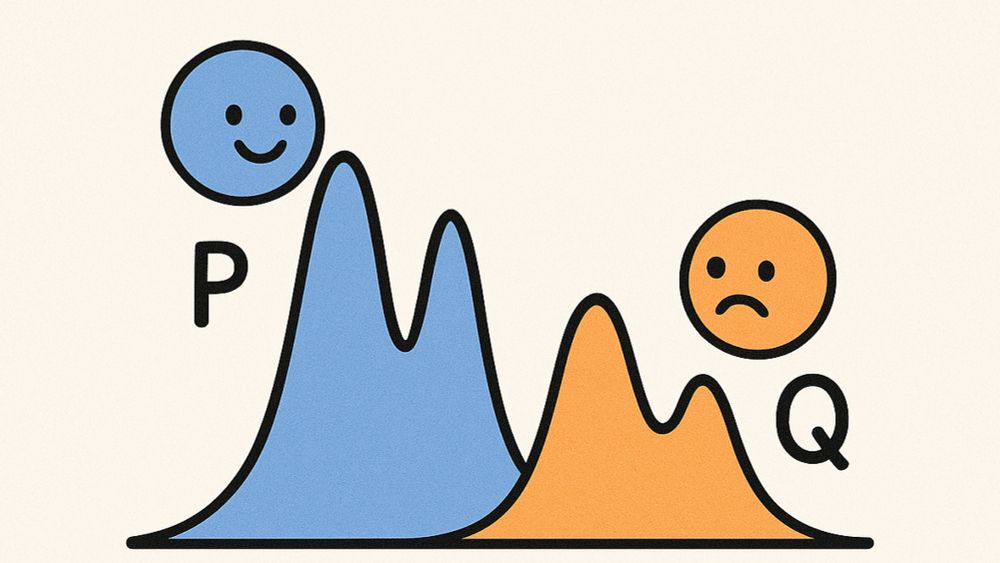

We use it everywhere, from training classifiers and distilling knowledge from models, to learning generative models and aligning LLMs.

BUT, what does it mean, and how do we (actually) compute it?

Video: youtu.be/tXE23653JrU

Come to the poster on Friday afternoon (#376 in Hall 3) or the talk on Saturday (4:30 in Hall 1), and while you’re at it snag him for a postdoc!

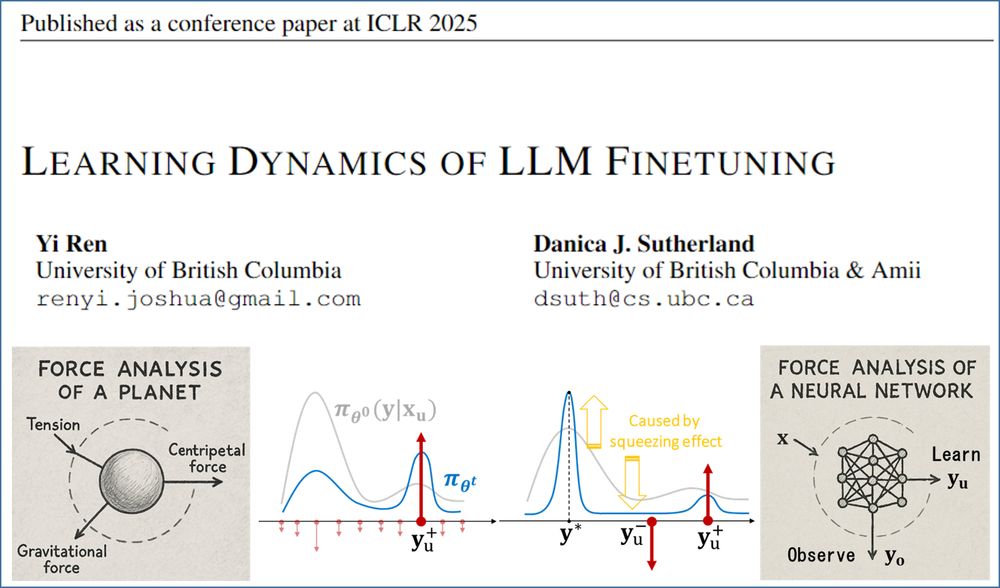

We offer a fresh perspective—consider doing a "force analysis" on your model’s behavior.

Check out our #ICLR2025 Oral paper:

Learning Dynamics of LLM Finetuning!

(0/12)

Come to the poster on Friday afternoon (#376 in Hall 3) or the talk on Saturday (4:30 in Hall 1), and while you’re at it snag him for a postdoc!

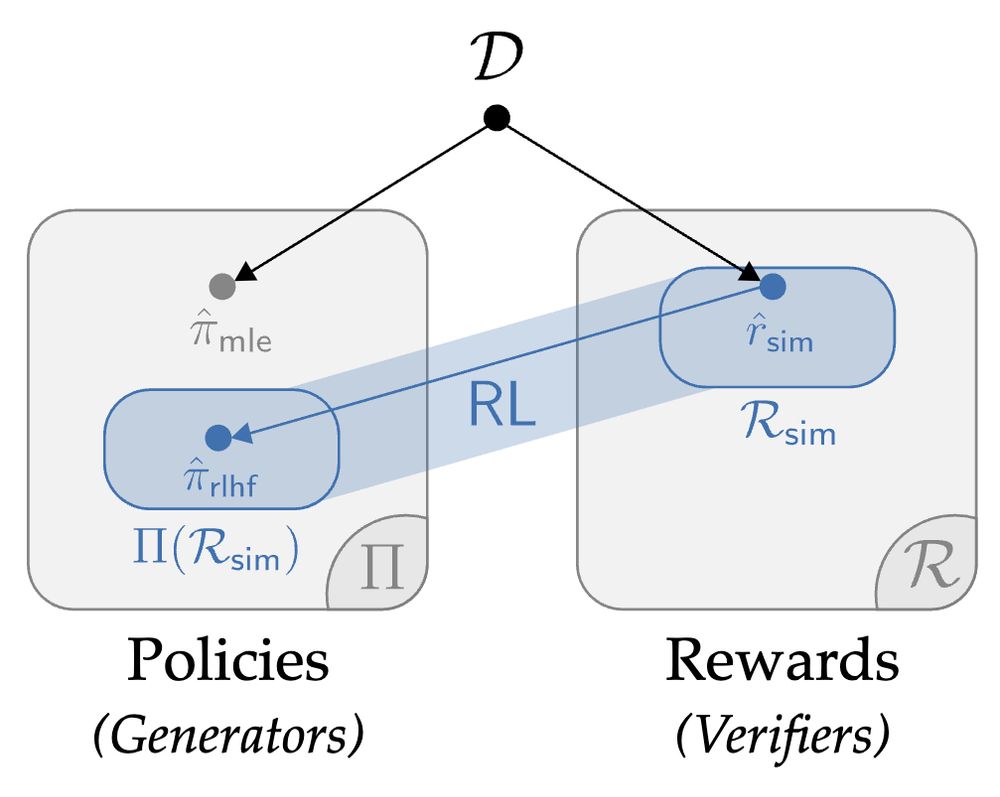

www.lesswrong.com/posts/oKAFFv...

www.lesswrong.com/posts/oKAFFv...

A new language model uses diffusion instead of next-token prediction. That means the text it can back out of a hallucination before it commits. This is a big win for areas like law & contracts, where global consistency is valued

timkellogg.me/blog/2025/02...

A new language model uses diffusion instead of next-token prediction. That means the text it can back out of a hallucination before it commits. This is a big win for areas like law & contracts, where global consistency is valued

timkellogg.me/blog/2025/02...

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

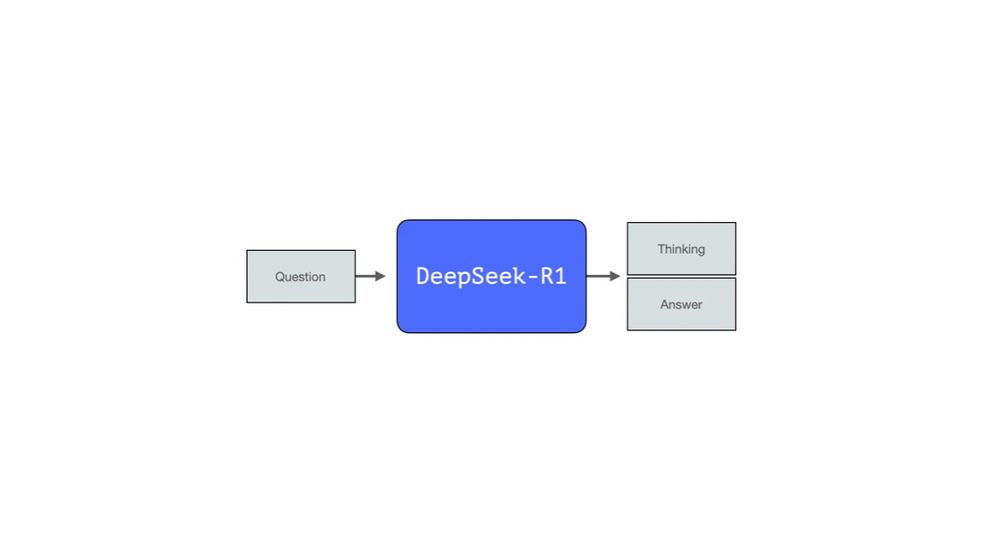

Spent the weekend reading the paper and sorting through the intuitions. Here's a visual guide and the main intuitions to understand the model and the process that created it.

newsletter.languagemodels.co/p/the-illust...

Spent the weekend reading the paper and sorting through the intuitions. Here's a visual guide and the main intuitions to understand the model and the process that created it.

newsletter.languagemodels.co/p/the-illust...

Policy gradient chapter is coming together. Plugging away at the book every day now.

rlhfbook.com/c/11-policy-...

Policy gradient chapter is coming together. Plugging away at the book every day now.

rlhfbook.com/c/11-policy-...

DeepSeek R1 is just the tip of the ice berg of rapid progress.

People underestimate the long-term potential of “reasoning.”

DeepSeek R1 is just the tip of the ice berg of rapid progress.

People underestimate the long-term potential of “reasoning.”

PPO, GPRO, PRIME — doesn’t matter what RL you use, the key is that it’s RL

experiment logs: wandb.ai/jiayipan/Tin...

x: x.com/jiayi_pirate...

PPO, GPRO, PRIME — doesn’t matter what RL you use, the key is that it’s RL

experiment logs: wandb.ai/jiayipan/Tin...

x: x.com/jiayi_pirate...

This is an attempt to consolidate the dizzying rate of AI developments since Christmas. If you're into AI but not deep enough, this should get you oriented again.

timkellogg.me/blog/2025/01...

This is an attempt to consolidate the dizzying rate of AI developments since Christmas. If you're into AI but not deep enough, this should get you oriented again.

timkellogg.me/blog/2025/01...

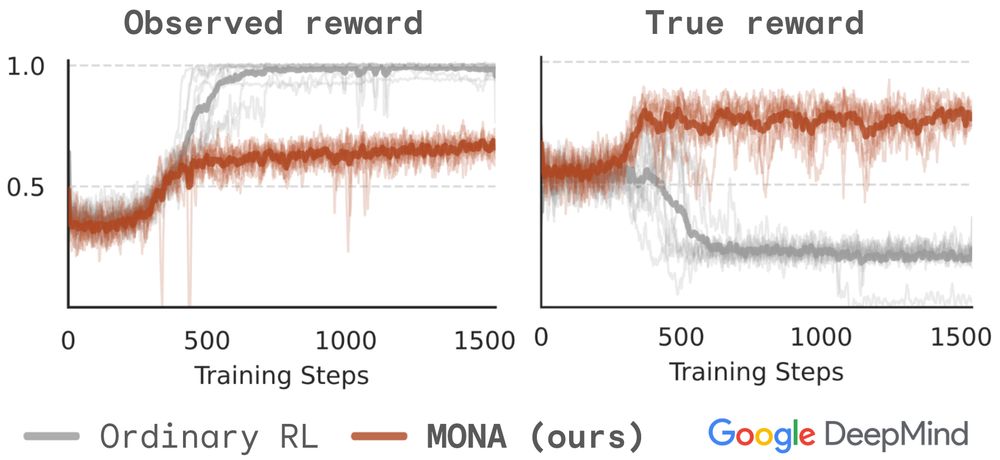

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!

Inspired by myopic optimization but better performance – details in🧵

Test-time compute paradigm seems really fruitful.

Test-time compute paradigm seems really fruitful.

My talk at the NeurIPS Latent Space live event (pre o3).

Slides: https://buff.ly/40hsoTx

Post: https://buff.ly/40i2rDC

YouTube: https://buff.ly/40k8GH3

My talk at the NeurIPS Latent Space live event (pre o3).

Slides: https://buff.ly/40hsoTx

Post: https://buff.ly/40i2rDC

YouTube: https://buff.ly/40k8GH3

docs.google.com/presentation...

docs.google.com/presentation...

drive.google.com/file/d/1eLa3...

drive.google.com/file/d/1eLa3...

👉 x.com/rao2z/status...

(bsky still doesn't allow long posts, so..)

👉 x.com/rao2z/status...

(bsky still doesn't allow long posts, so..)

RSS'25 (abs): 25 days.

SIGGRAPH'25 (paper-md5): 31 days.

RSS'25 (paper): 32 days.

ICML'25: 38 days.

RLC'25 (abs): 53 days.

RLC'25 (paper): 60 days.

ICCV'25: 73 days.