our lab 👉 https://hebartlab.com

The position is funded as part of the Excellence Cluster "The Adaptive Mind" at @jlugiessen.bsky.social.

Please apply here until Nov 25:

www.uni-giessen.de/de/ueber-uns...

The position is funded as part of the Excellence Cluster "The Adaptive Mind" at @jlugiessen.bsky.social.

Please apply here until Nov 25:

www.uni-giessen.de/de/ueber-uns...

I would also like to know more about what is known in that age range!

I would also like to know more about what is known in that age range!

The thing is: the logo is fixed and cannot change. So what had happened?

The thing is: the logo is fixed and cannot change. So what had happened?

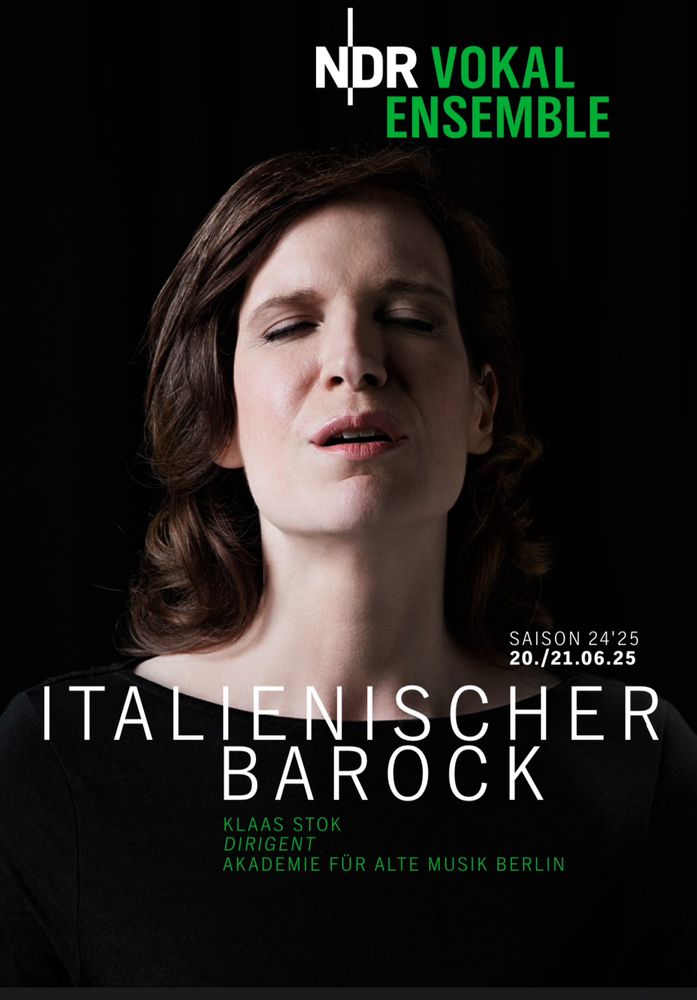

Go Malin! 🥳

Go Malin! 🥳

www.ndr.de/orchester_ch...

www.ndr.de/orchester_ch...