manueltonneau.com

Our new WP shows the answer is no:

-Millions of users post in languages with zero moderators

-Where mods exist, mod count relative to content volume varies widely across langs

osf.io/amfws

Our new WP shows the answer is no:

-Millions of users post in languages with zero moderators

-Where mods exist, mod count relative to content volume varies widely across langs

osf.io/amfws

Big thanks to my wonderful co-authors: @deeliu97.bsky.social, Niyati, @computermacgyver.bsky.social, Sam, Victor, and @paul-rottger.bsky.social!

Thread 👇and data avail at huggingface.co/datasets/man...

Big thanks to my wonderful co-authors: @deeliu97.bsky.social, Niyati, @computermacgyver.bsky.social, Sam, Victor, and @paul-rottger.bsky.social!

Thread 👇and data avail at huggingface.co/datasets/man...

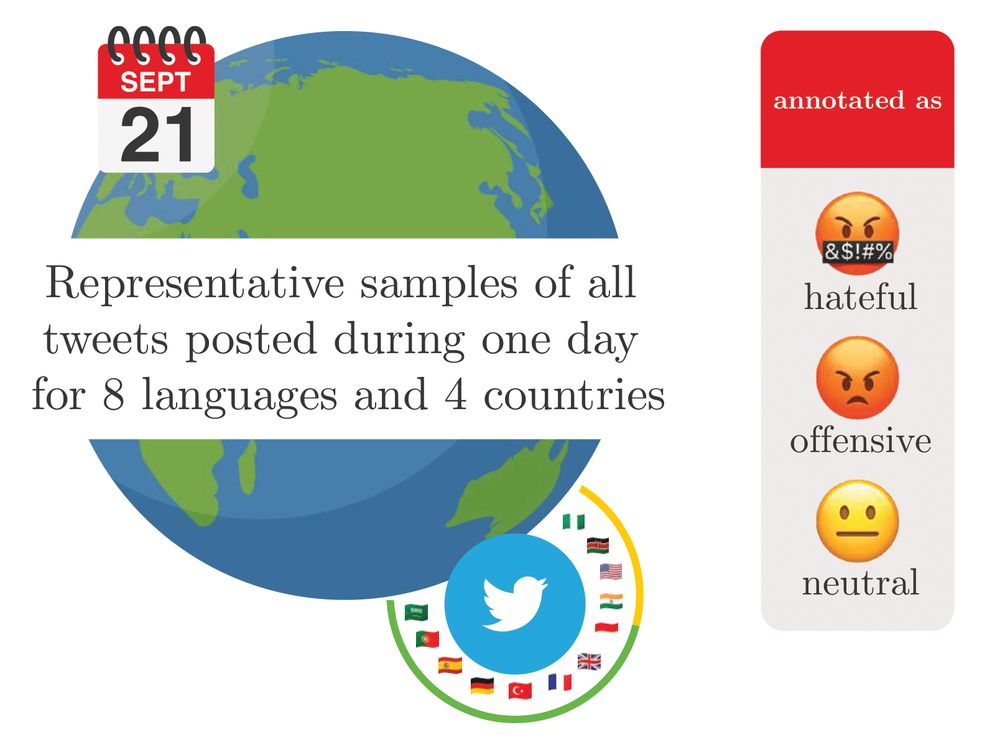

To answer this, we introduce 🤬HateDay🗓️, a global hate speech dataset representative of a day on Twitter.

The answer: not really! Detection perf is low and overestimated by traditional eval methods

arxiv.org/abs/2411.15462

🧵

To answer this, we introduce 🤬HateDay🗓️, a global hate speech dataset representative of a day on Twitter.

The answer: not really! Detection perf is low and overestimated by traditional eval methods

arxiv.org/abs/2411.15462

🧵

🧵

🧵