https://sites.google.com/view/lu-chun-yeh

How can we model natural scene representations in visual cortex? A solution is in active vision: predict the features of the next glimpse! arxiv.org/abs/2511.12715

+ @adriendoerig.bsky.social , @alexanderkroner.bsky.social , @carmenamme.bsky.social , @timkietzmann.bsky.social

🧵 1/14

How can we model natural scene representations in visual cortex? A solution is in active vision: predict the features of the next glimpse! arxiv.org/abs/2511.12715

+ @adriendoerig.bsky.social , @alexanderkroner.bsky.social , @carmenamme.bsky.social , @timkietzmann.bsky.social

🧵 1/14

drive.google.com/drive/mobile...

drive.google.com/drive/mobile...

Check it out: www.sciencedirect.com/science/arti...

Check it out: www.sciencedirect.com/science/arti...

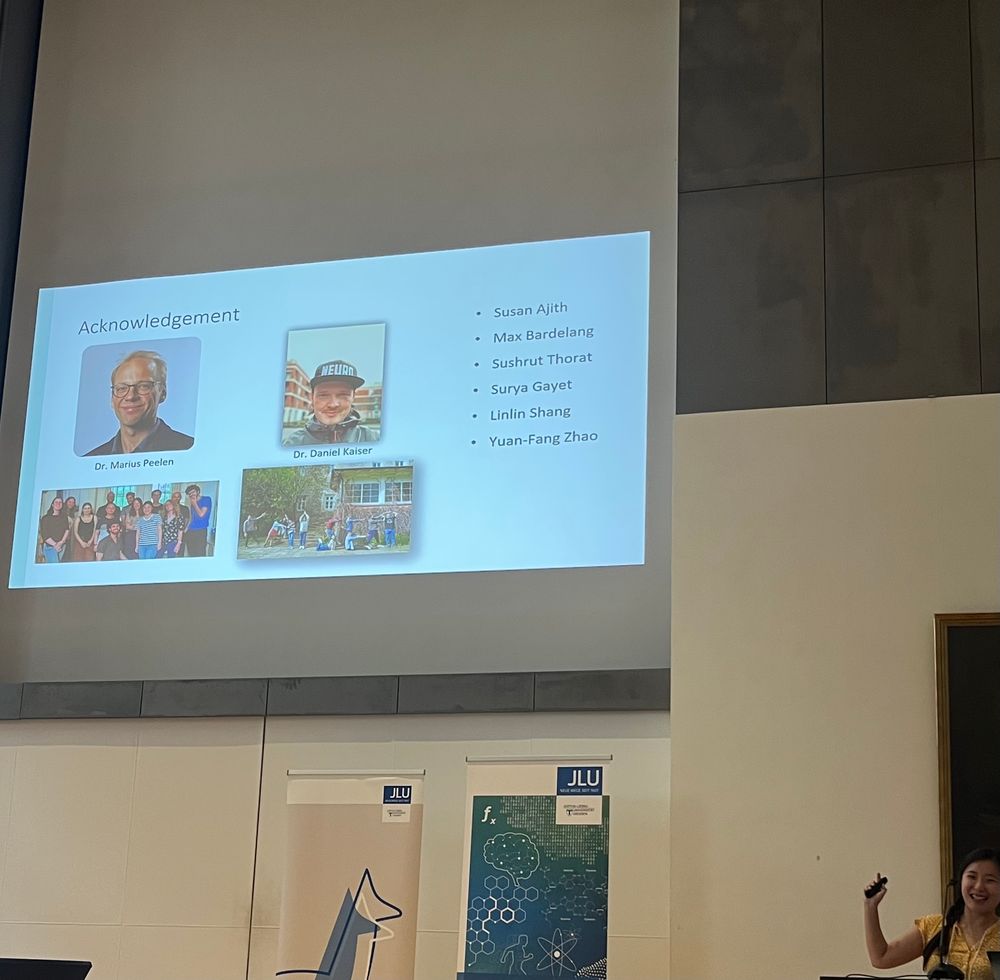

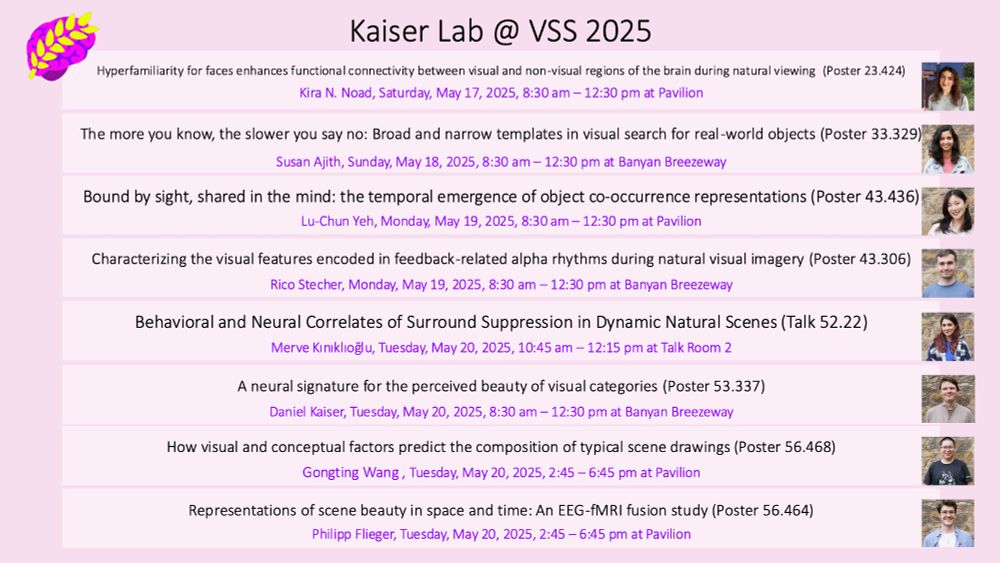

@dkaiserlab.bsky.social

journals.physiology.org/doi/full/10....

@dkaiserlab.bsky.social

journals.physiology.org/doi/full/10....