https://lorenzoscottb.github.io

In this work, we tested the ability of a task-level explainability method to trace biological-sex biases in medical image classification, using general and biomedical VLMs.

@aclmeeting.bsky.social @genderbiasnlp.bsky.social

In this work, we tested the ability of a task-level explainability method to trace biological-sex biases in medical image classification, using general and biomedical VLMs.

@aclmeeting.bsky.social @genderbiasnlp.bsky.social

#sleeppeeps #dream

#sleeppeeps #dream

Do you need to anonymise #dream reports? We've got you covered, with anonymized, a 1-line solution to find and replace entities.

Try it out in our tutorial section, or online

colab.research.google.com/drive/14hHRRC3…

Do you need to anonymise #dream reports? We've got you covered, with anonymized, a 1-line solution to find and replace entities.

Try it out in our tutorial section, or online

colab.research.google.com/drive/14hHRRC3…

#scisky #sleeppeeps

#scisky #sleeppeeps

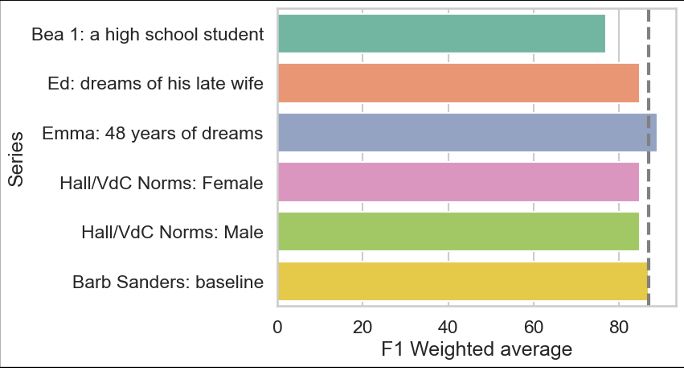

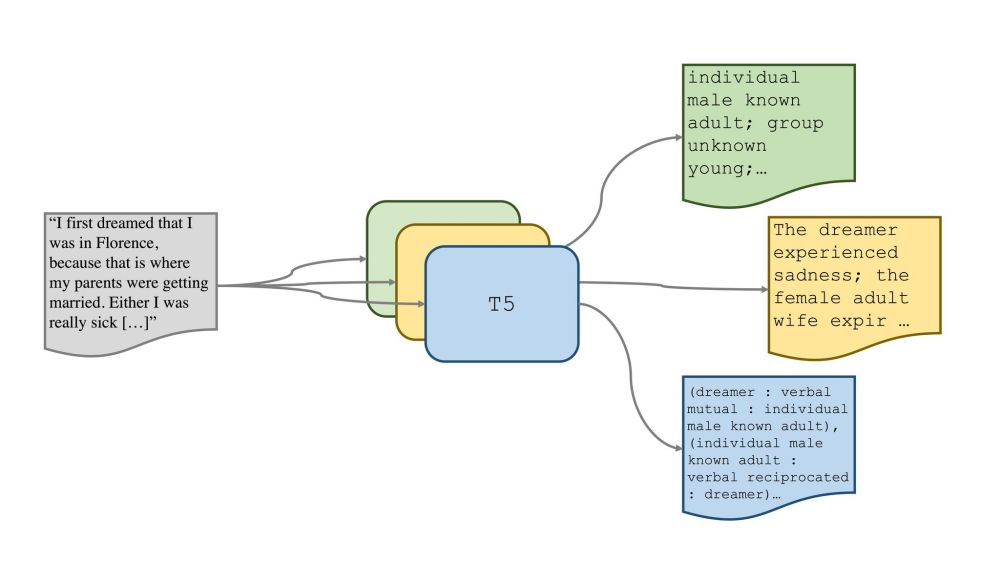

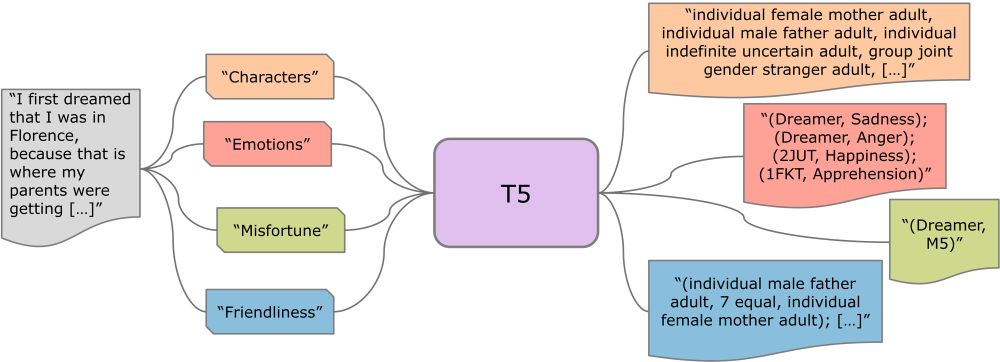

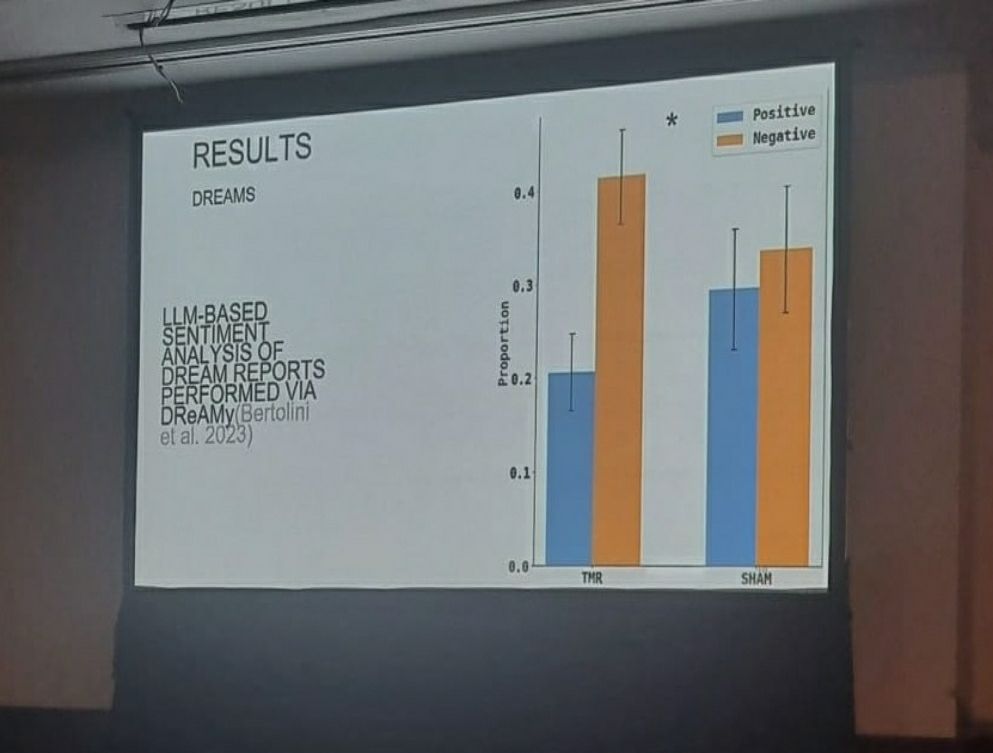

Given the encouraging results, I wanted to empower the dream research community with these tools, as well as other useful classic NLP tools. So I built a fully open source python library, designed for non-expert users.

Given the encouraging results, I wanted to empower the dream research community with these tools, as well as other useful classic NLP tools. So I built a fully open source python library, designed for non-expert users.

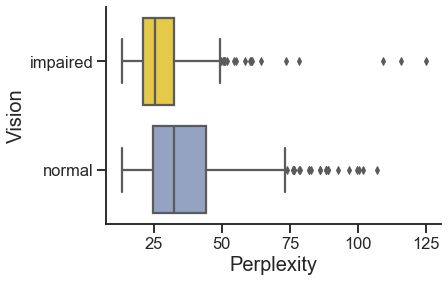

Given items with the same amount of words, the perplexity scores are (on average) notably lower (hence better).

Given items with the same amount of words, the perplexity scores are (on average) notably lower (hence better).