@cogcompneuro.bsky.social

@cogcompneuro.bsky.social

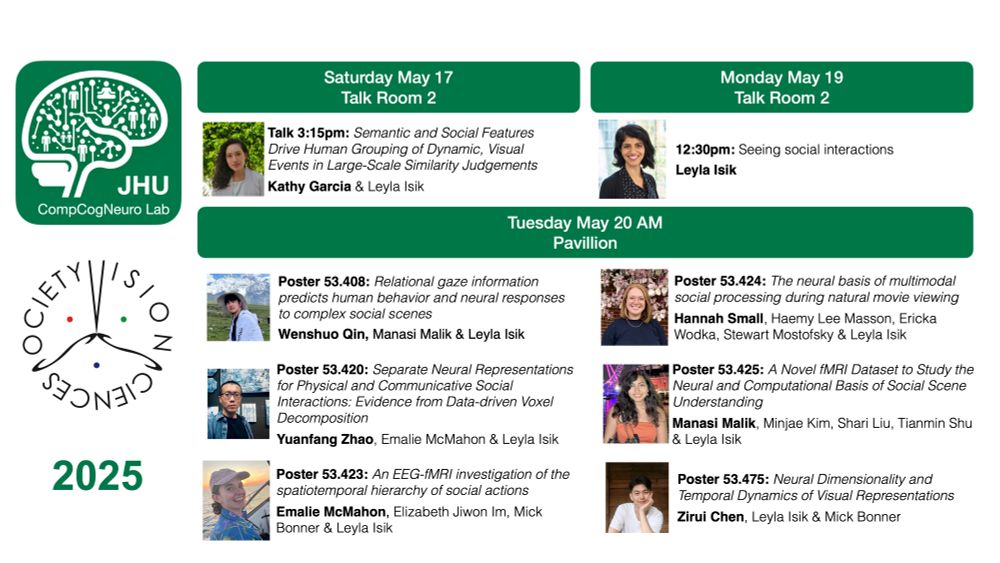

And congratulations again to @emaliemcmahon.bsky.social on her Glushko dissertation prize 🥳

And congratulations again to @emaliemcmahon.bsky.social on her Glushko dissertation prize 🥳

I’m also honored to receive this year’s young investigator award and will give a short talk at the awards ceremony Monday

I’m also honored to receive this year’s young investigator award and will give a short talk at the awards ceremony Monday

2/n

2/n

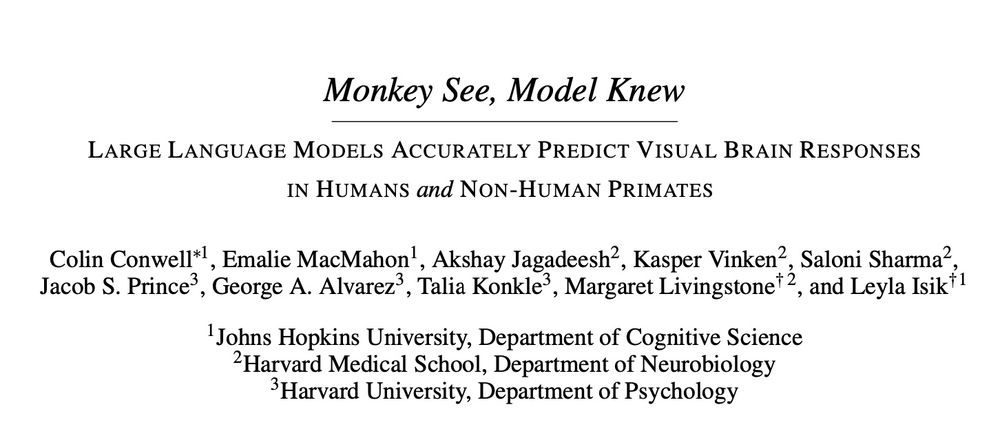

Led by Colin Conwell with @emaliemcmahon.bsky.social Akshay Jagadeesh, Kasper Vinken @amrahs-inolas.bsky.social @jacob-prince.bsky.social George Alvarez @taliakonkle.bsky.social & Marge Livingstone 1/n

Led by Colin Conwell with @emaliemcmahon.bsky.social Akshay Jagadeesh, Kasper Vinken @amrahs-inolas.bsky.social @jacob-prince.bsky.social George Alvarez @taliakonkle.bsky.social & Marge Livingstone 1/n

Colin Conwell, @emaliemcmahon.bsky.social, Akshay Vivek Jagadeesh, Kasper Vinken, @amrahs-inolas.bsky.social, @jacob-prince.bsky.social, George Alvarez, @taliakonkle.bsky.social and Marge Livingstone

Colin Conwell, @emaliemcmahon.bsky.social, Akshay Vivek Jagadeesh, Kasper Vinken, @amrahs-inolas.bsky.social, @jacob-prince.bsky.social, George Alvarez, @taliakonkle.bsky.social and Marge Livingstone

@hsmall.bsky.social, with @hleemasson.bsky.social and Stewart Mostofsky

@hsmall.bsky.social, with @hleemasson.bsky.social and Stewart Mostofsky

www.nature.com/articles/s41...

www.nature.com/articles/s41...

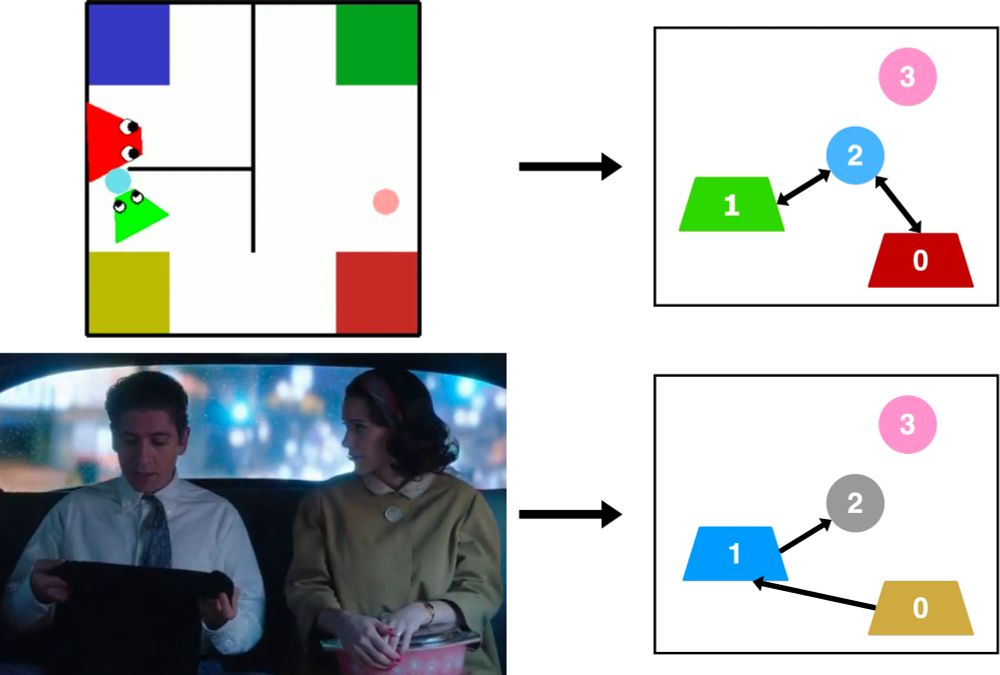

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

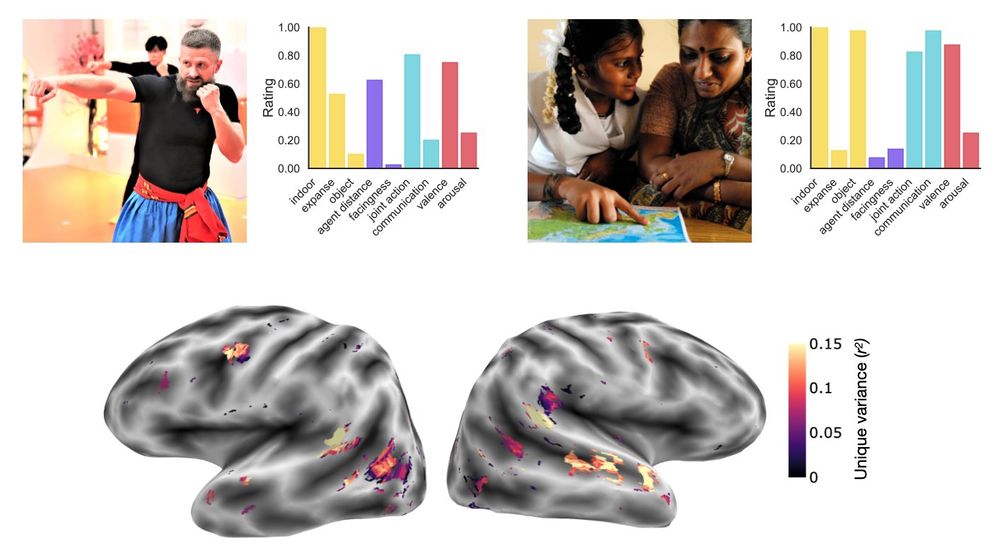

www.jneurosci.org/content/earl...

#neuroskyence #PsychSciSky

www.jneurosci.org/content/earl...

#neuroskyence #PsychSciSky

For example, if I first ask for a "closed, empty box", it seems to do better with "closed box with sheep inside, not visible"(though still only 50-50!)

For example, if I first ask for a "closed, empty box", it seems to do better with "closed box with sheep inside, not visible"(though still only 50-50!)