Andrew Lampinen

@lampinen.bsky.social

Interested in cognition and artificial intelligence. Research Scientist at Google DeepMind. Previously cognitive science at Stanford. Posts are mine.

lampinen.github.io

lampinen.github.io

Pinned

Andrew Lampinen

@lampinen.bsky.social

· Sep 22

Latent learning: episodic memory complements parametric learning by enabling flexible reuse of experiences

When do machine learning systems fail to generalize, and what mechanisms could improve their generalization? Here, we draw inspiration from cognitive science to argue that one weakness of machine lear...

arxiv.org

Why does AI sometimes fail to generalize, and what might help? In a new paper (arxiv.org/abs/2509.16189), we highlight the latent learning gap — which unifies findings from language modeling to agent navigation — and suggest that episodic memory complements parametric learning to bridge it. Thread:

Apologies for being quiet on here lately — been focusing on the more important things in life :)

November 9, 2025 at 11:34 PM

Apologies for being quiet on here lately — been focusing on the more important things in life :)

Pleased to share that our survey "Getting aligned on representational alignment" — on representational alignment across cognitive (neuro)science and machine learning — is now published in TMLR! openreview.net/forum?id=Hiq...

Kudos to @sucholutsky.bsky.social @lukasmut.bsky.social for leading this!

Kudos to @sucholutsky.bsky.social @lukasmut.bsky.social for leading this!

October 29, 2025 at 5:23 PM

Pleased to share that our survey "Getting aligned on representational alignment" — on representational alignment across cognitive (neuro)science and machine learning — is now published in TMLR! openreview.net/forum?id=Hiq...

Kudos to @sucholutsky.bsky.social @lukasmut.bsky.social for leading this!

Kudos to @sucholutsky.bsky.social @lukasmut.bsky.social for leading this!

Reposted by Andrew Lampinen

🚨 New preprint alert!

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

The curriculum effect in visual learning: the role of readout dimensionality

Generalization of visual perceptual learning (VPL) to unseen conditions varies across tasks. Previous work suggests that training curriculum may be integral to generalization, yet a theoretical explan...

tinyurl.com

September 30, 2025 at 2:26 PM

🚨 New preprint alert!

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

Why does AI sometimes fail to generalize, and what might help? In a new paper (arxiv.org/abs/2509.16189), we highlight the latent learning gap — which unifies findings from language modeling to agent navigation — and suggest that episodic memory complements parametric learning to bridge it. Thread:

Latent learning: episodic memory complements parametric learning by enabling flexible reuse of experiences

When do machine learning systems fail to generalize, and what mechanisms could improve their generalization? Here, we draw inspiration from cognitive science to argue that one weakness of machine lear...

arxiv.org

September 22, 2025 at 4:21 AM

Why does AI sometimes fail to generalize, and what might help? In a new paper (arxiv.org/abs/2509.16189), we highlight the latent learning gap — which unifies findings from language modeling to agent navigation — and suggest that episodic memory complements parametric learning to bridge it. Thread:

Reposted by Andrew Lampinen

How can an imitative model like an LLM outperform the experts it is trained on? Our new COLM paper outlines three types of transcendence and shows that each one relies on a different aspect of data diversity. arxiv.org/abs/2508.17669

August 29, 2025 at 9:46 PM

How can an imitative model like an LLM outperform the experts it is trained on? Our new COLM paper outlines three types of transcendence and shows that each one relies on a different aspect of data diversity. arxiv.org/abs/2508.17669

In neuroscience, we often try to understand systems by analyzing their representations — using tools like regression or RSA. But are these analyses biased towards discovering a subset of what a system represents? If you're interested in this question, check out our new commentary! Thread:

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)

August 5, 2025 at 2:36 PM

In neuroscience, we often try to understand systems by analyzing their representations — using tools like regression or RSA. But are these analyses biased towards discovering a subset of what a system represents? If you're interested in this question, check out our new commentary! Thread:

Reposted by Andrew Lampinen

Get ready to enter the simulation...

Genie 3 is a new frontier for world models: its environments remain largely consistent for several minutes, with visual memory extending as far back as 1min. These limitations will only decrease with time.

Welcome to the future.🙌

deepmind.google/discover/blo...

Genie 3 is a new frontier for world models: its environments remain largely consistent for several minutes, with visual memory extending as far back as 1min. These limitations will only decrease with time.

Welcome to the future.🙌

deepmind.google/discover/blo...

August 5, 2025 at 2:10 PM

Get ready to enter the simulation...

Genie 3 is a new frontier for world models: its environments remain largely consistent for several minutes, with visual memory extending as far back as 1min. These limitations will only decrease with time.

Welcome to the future.🙌

deepmind.google/discover/blo...

Genie 3 is a new frontier for world models: its environments remain largely consistent for several minutes, with visual memory extending as far back as 1min. These limitations will only decrease with time.

Welcome to the future.🙌

deepmind.google/discover/blo...

Looking forward to attending CogSci this week! I'll be giving a talk (see below) at the Reasoning Across Minds and Machines workshop on Wednesday at 10:25 AM, and will be around most of the week — feel free to reach out if you'd like to meet up!

July 28, 2025 at 6:07 PM

Looking forward to attending CogSci this week! I'll be giving a talk (see below) at the Reasoning Across Minds and Machines workshop on Wednesday at 10:25 AM, and will be around most of the week — feel free to reach out if you'd like to meet up!

Reposted by Andrew Lampinen

This summer my lab's journal club somewhat unintentionally ended up reading papers on a theme of "more naturalistic computational neuroscience". I figured I'd share the list of papers here 🧵:

July 23, 2025 at 2:59 PM

This summer my lab's journal club somewhat unintentionally ended up reading papers on a theme of "more naturalistic computational neuroscience". I figured I'd share the list of papers here 🧵:

Reposted by Andrew Lampinen

Does vision training change how language is represented and used in meaningful ways?🤔The answer is a nuanced yes! Comparing VLM-LM minimal pairs, we find that while the taxonomic organization of the lexicon is similar, VLMs are better at _deploying_ this knowledge. [1/9]

July 22, 2025 at 4:46 AM

Does vision training change how language is represented and used in meaningful ways?🤔The answer is a nuanced yes! Comparing VLM-LM minimal pairs, we find that while the taxonomic organization of the lexicon is similar, VLMs are better at _deploying_ this knowledge. [1/9]

Quick thread on the recent IMO results and the relationship between symbol manipulation, reasoning, and intelligence in machines and humans:

July 21, 2025 at 10:20 PM

Quick thread on the recent IMO results and the relationship between symbol manipulation, reasoning, and intelligence in machines and humans:

Reposted by Andrew Lampinen

Excited to share a new project spanning cognitive science and AI where we develop a novel deep reinforcement learning model---Multitask Preplay---that explains how people generalize to new tasks that were previously accessible but unpursued.

July 12, 2025 at 4:20 PM

Excited to share a new project spanning cognitive science and AI where we develop a novel deep reinforcement learning model---Multitask Preplay---that explains how people generalize to new tasks that were previously accessible but unpursued.

Reposted by Andrew Lampinen

Exciting new preprint from the lab: “Adopting a human developmental visual diet yields robust, shape-based AI vision”. A most wonderful case where brain inspiration massively improved AI solutions.

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

arxiv.org

July 8, 2025 at 1:04 PM

Exciting new preprint from the lab: “Adopting a human developmental visual diet yields robust, shape-based AI vision”. A most wonderful case where brain inspiration massively improved AI solutions.

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Reposted by Andrew Lampinen

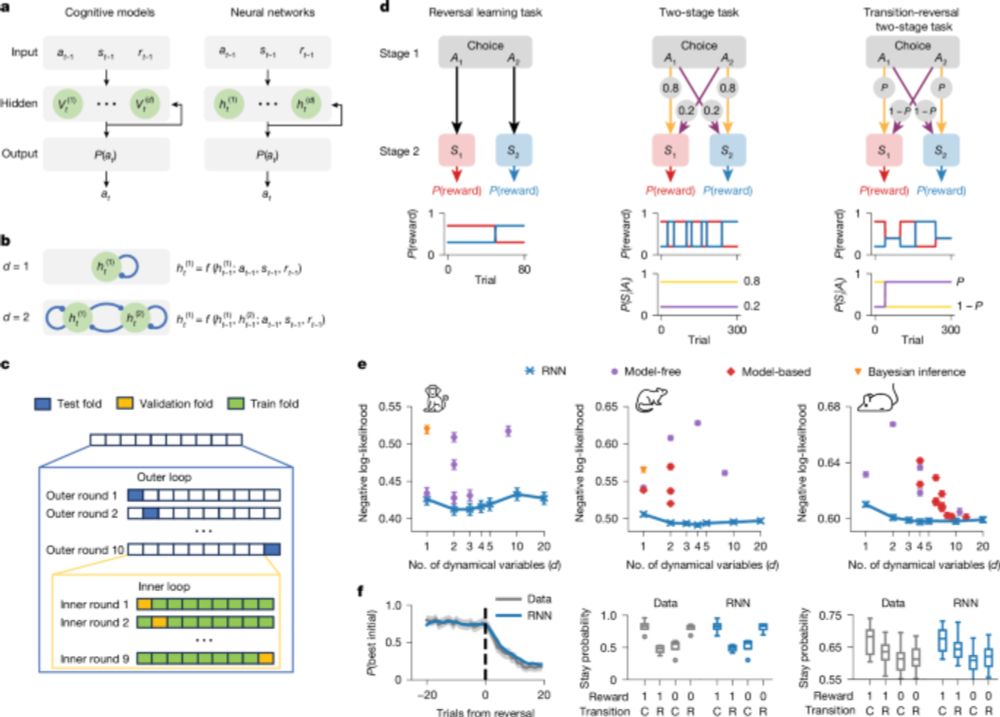

Thrilled to see our TinyRNN paper in @nature! We show how tiny RNNs predict choices of individual subjects accurately while staying fully interpretable. This approach can transform how we model cognitive processes in both healthy and disordered decisions. doi.org/10.1038/s415...

Discovering cognitive strategies with tiny recurrent neural networks - Nature

Modelling biological decision-making with tiny recurrent neural networks enables more accurate predictions of animal choices than classical cognitive models and offers insights into the underlying cog...

doi.org

July 2, 2025 at 7:03 PM

Thrilled to see our TinyRNN paper in @nature! We show how tiny RNNs predict choices of individual subjects accurately while staying fully interpretable. This approach can transform how we model cognitive processes in both healthy and disordered decisions. doi.org/10.1038/s415...

Reposted by Andrew Lampinen

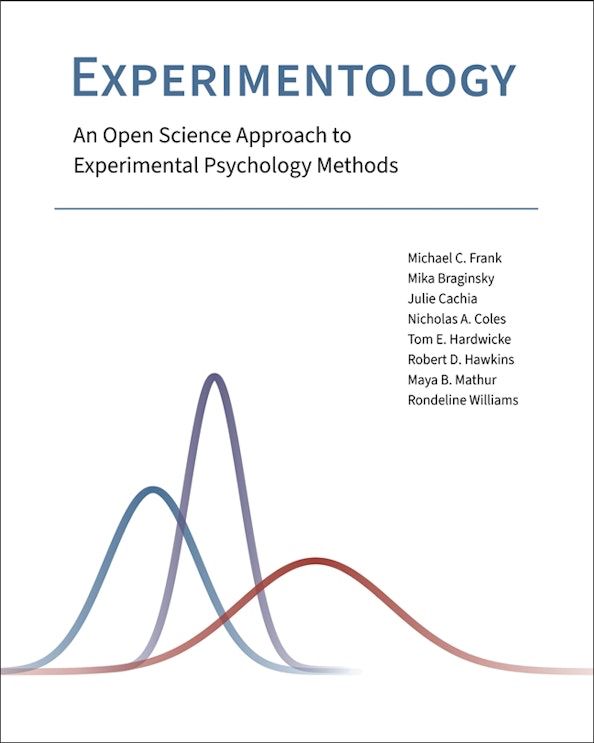

Experimentology is out today!!! A group of us wrote a free online textbook for experimental methods, available at experimentology.io - the idea was to integrate open science into all aspects of the experimental workflow from planning to design, analysis, and writing.

July 1, 2025 at 6:26 PM

Experimentology is out today!!! A group of us wrote a free online textbook for experimental methods, available at experimentology.io - the idea was to integrate open science into all aspects of the experimental workflow from planning to design, analysis, and writing.

Really nice analysis!

🚨New paper! We know models learn distinct in-context learning strategies, but *why*? Why generalize instead of memorize to lower loss? And why is generalization transient?

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

June 28, 2025 at 8:03 AM

Really nice analysis!

Reposted by Andrew Lampinen

Humans and animals can rapidly learn in new environments. What computations support this? We study the mechanisms of in-context reinforcement learning in transformers, and propose how episodic memory can support rapid learning. Work w/ @kanakarajanphd.bsky.social : arxiv.org/abs/2506.19686

From memories to maps: Mechanisms of in context reinforcement learning in transformers

Humans and animals show remarkable learning efficiency, adapting to new environments with minimal experience. This capability is not well captured by standard reinforcement learning algorithms that re...

arxiv.org

June 26, 2025 at 7:01 PM

Humans and animals can rapidly learn in new environments. What computations support this? We study the mechanisms of in-context reinforcement learning in transformers, and propose how episodic memory can support rapid learning. Work w/ @kanakarajanphd.bsky.social : arxiv.org/abs/2506.19686

Reposted by Andrew Lampinen

I'm excited about this TICS Opinion with @yngwienielsen.bsky.social, challenging the view that structural priming—the tendency to reuse a recent syntactic structure—provides evidence for the psychological reality of grammar-based constituent structure.

authors.elsevier.com/a/1lIFK4sIRv...

🧵1/4

authors.elsevier.com/a/1lIFK4sIRv...

🧵1/4

June 25, 2025 at 5:46 PM

I'm excited about this TICS Opinion with @yngwienielsen.bsky.social, challenging the view that structural priming—the tendency to reuse a recent syntactic structure—provides evidence for the psychological reality of grammar-based constituent structure.

authors.elsevier.com/a/1lIFK4sIRv...

🧵1/4

authors.elsevier.com/a/1lIFK4sIRv...

🧵1/4

Reposted by Andrew Lampinen

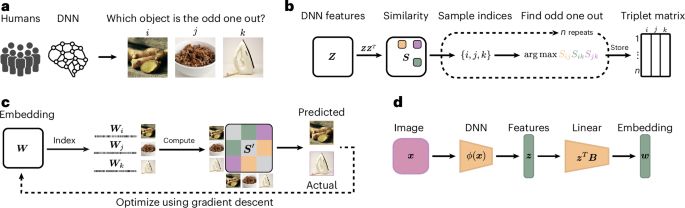

What makes humans similar or different to AI? In a paper out in @natmachintell.nature.com led by @florianmahner.bsky.social & @lukasmut.bsky.social, w/ Umut Güclü, we took a deep look at the factors underlying their representational alignment, with surprising results.

www.nature.com/articles/s42...

www.nature.com/articles/s42...

Dimensions underlying the representational alignment of deep neural networks with humans - Nature Machine Intelligence

An interpretability framework that compares how humans and deep neural networks process images has been presented. Their findings reveal that, unlike humans, deep neural networks focus more on visual ...

www.nature.com

June 23, 2025 at 8:03 PM

What makes humans similar or different to AI? In a paper out in @natmachintell.nature.com led by @florianmahner.bsky.social & @lukasmut.bsky.social, w/ Umut Güclü, we took a deep look at the factors underlying their representational alignment, with surprising results.

www.nature.com/articles/s42...

www.nature.com/articles/s42...

Reposted by Andrew Lampinen

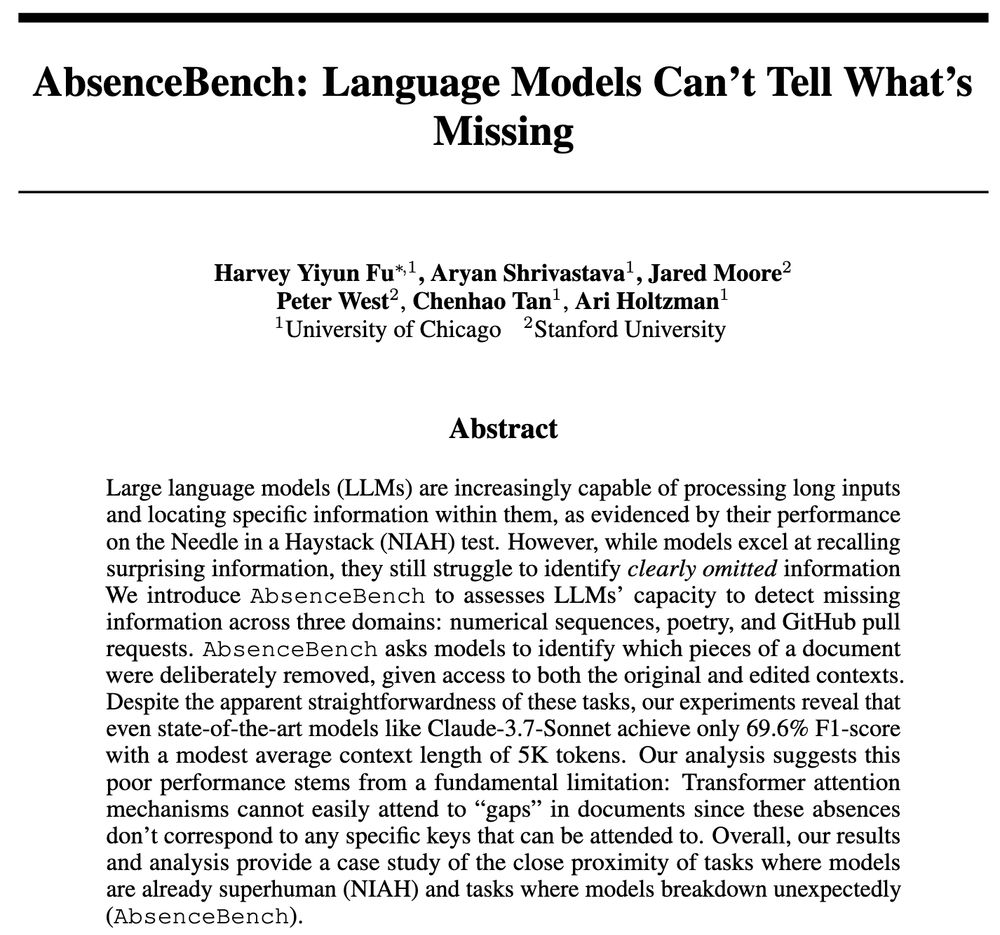

LLMs excel at finding surprising “needles” in very long documents, but can they detect when information is conspicuously missing?

🫥AbsenceBench🫥 shows that even SoTA LLMs struggle on this task, suggesting that LLMs have trouble perceiving “negative spaces”.

Paper: arxiv.org/abs/2506.11440

🧵[1/n]

🫥AbsenceBench🫥 shows that even SoTA LLMs struggle on this task, suggesting that LLMs have trouble perceiving “negative spaces”.

Paper: arxiv.org/abs/2506.11440

🧵[1/n]

June 20, 2025 at 10:03 PM

LLMs excel at finding surprising “needles” in very long documents, but can they detect when information is conspicuously missing?

🫥AbsenceBench🫥 shows that even SoTA LLMs struggle on this task, suggesting that LLMs have trouble perceiving “negative spaces”.

Paper: arxiv.org/abs/2506.11440

🧵[1/n]

🫥AbsenceBench🫥 shows that even SoTA LLMs struggle on this task, suggesting that LLMs have trouble perceiving “negative spaces”.

Paper: arxiv.org/abs/2506.11440

🧵[1/n]

New and improved version of our recent preprint on how to build generalizable models & theories in cognitive science by (in part) drawing on lessons and tools from AI!

Excited to share this project specifying a research direction I think will be particularly fruitful for theory-driven cognitive science that aims to explain natural behavior!

We're calling this direction "Naturalistic Computational Cognitive Science"

We're calling this direction "Naturalistic Computational Cognitive Science"

June 16, 2025 at 7:36 PM

New and improved version of our recent preprint on how to build generalizable models & theories in cognitive science by (in part) drawing on lessons and tools from AI!

Reposted by Andrew Lampinen

ACL paper alert! What structure is lost when using linearizing interp methods like Shapley? We show the nonlinear interactions between features reflect structures described by the sciences of syntax, semantics, and phonology.

June 12, 2025 at 6:56 PM

ACL paper alert! What structure is lost when using linearizing interp methods like Shapley? We show the nonlinear interactions between features reflect structures described by the sciences of syntax, semantics, and phonology.

Reposted by Andrew Lampinen

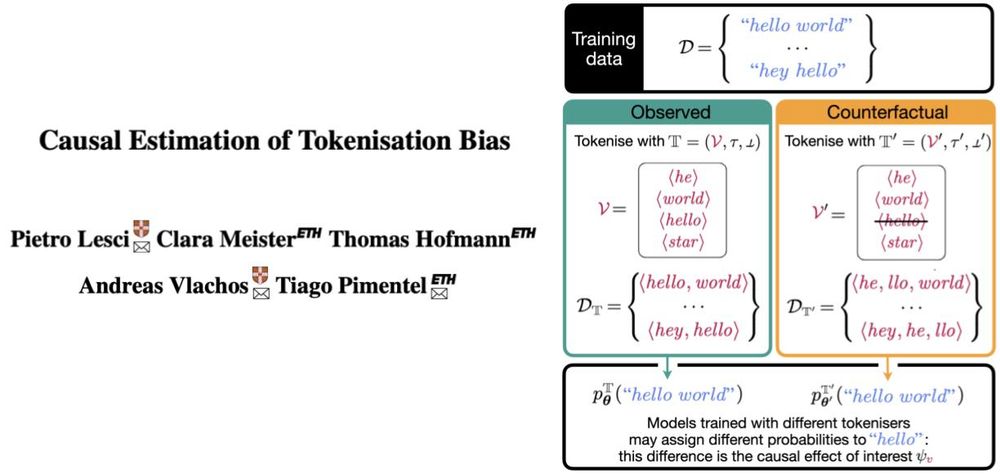

A string may get 17 times less probability if tokenised as two symbols (e.g., ⟨he, llo⟩) than as one (e.g., ⟨hello⟩)—by an LM trained from scratch in each situation! Our new ACL paper proposes an observational method to estimate this causal effect! Longer thread soon!

June 4, 2025 at 10:51 AM

A string may get 17 times less probability if tokenised as two symbols (e.g., ⟨he, llo⟩) than as one (e.g., ⟨hello⟩)—by an LM trained from scratch in each situation! Our new ACL paper proposes an observational method to estimate this causal effect! Longer thread soon!

Reposted by Andrew Lampinen

Transformer-based neural networks achieve impressive performance on coding, math & reasoning tasks that require keeping track of variables and their values. But how can they do that without explicit memory?

📄 Our new ICML paper investigates this in a synthetic setting!

🎥 youtu.be/Ux8iNcXNEhw

🧵 1/13

📄 Our new ICML paper investigates this in a synthetic setting!

🎥 youtu.be/Ux8iNcXNEhw

🧵 1/13

How Do Transformers Learn Variable Binding in Symbolic Programs?

YouTube video by Raphaël Millière

youtu.be

June 3, 2025 at 1:19 PM

Transformer-based neural networks achieve impressive performance on coding, math & reasoning tasks that require keeping track of variables and their values. But how can they do that without explicit memory?

📄 Our new ICML paper investigates this in a synthetic setting!

🎥 youtu.be/Ux8iNcXNEhw

🧵 1/13

📄 Our new ICML paper investigates this in a synthetic setting!

🎥 youtu.be/Ux8iNcXNEhw

🧵 1/13

Reposted by Andrew Lampinen

🎥 The recording of the first ELLISxUniReps Speaker Series session with Andrew Lampinen @lampinen.bsky.social and Jack Lindsey is now available here: www.youtube.com/watch?v=eXE0...

Next appointment: 20th June 2025 – 16:00 CEST on Zoom

🔵 Keynote: Mariya Toneva (MPI)

🔴 Flash: Lenka Tětková (DTU)

Next appointment: 20th June 2025 – 16:00 CEST on Zoom

🔵 Keynote: Mariya Toneva (MPI)

🔴 Flash: Lenka Tětková (DTU)

ELLISxUniReps First Session (May 29th) - with A. Lampinen and J. Lindsey

YouTube video by Unifying Representations in Neural Models

www.youtube.com

June 3, 2025 at 9:14 AM

🎥 The recording of the first ELLISxUniReps Speaker Series session with Andrew Lampinen @lampinen.bsky.social and Jack Lindsey is now available here: www.youtube.com/watch?v=eXE0...

Next appointment: 20th June 2025 – 16:00 CEST on Zoom

🔵 Keynote: Mariya Toneva (MPI)

🔴 Flash: Lenka Tětková (DTU)

Next appointment: 20th June 2025 – 16:00 CEST on Zoom

🔵 Keynote: Mariya Toneva (MPI)

🔴 Flash: Lenka Tětková (DTU)