Supplementary materials can be found here:

github.com/kobihackenbu...

Comments and feedback welcome :)

Supplementary materials can be found here:

github.com/kobihackenbu...

Comments and feedback welcome :)

→ Technical factors and/or hard limits on human persuadability may constrain future increases in AI persuasion

→ Real-world bottleneck for AI persuasion: getting people to engage (cf. recent work from @jkalla.bsky.social and co)

→ Technical factors and/or hard limits on human persuadability may constrain future increases in AI persuasion

→ Real-world bottleneck for AI persuasion: getting people to engage (cf. recent work from @jkalla.bsky.social and co)

They also suggest that near-term advances in persuasion are more likely to be driven by post-training than model scale or personalization.

They also suggest that near-term advances in persuasion are more likely to be driven by post-training than model scale or personalization.

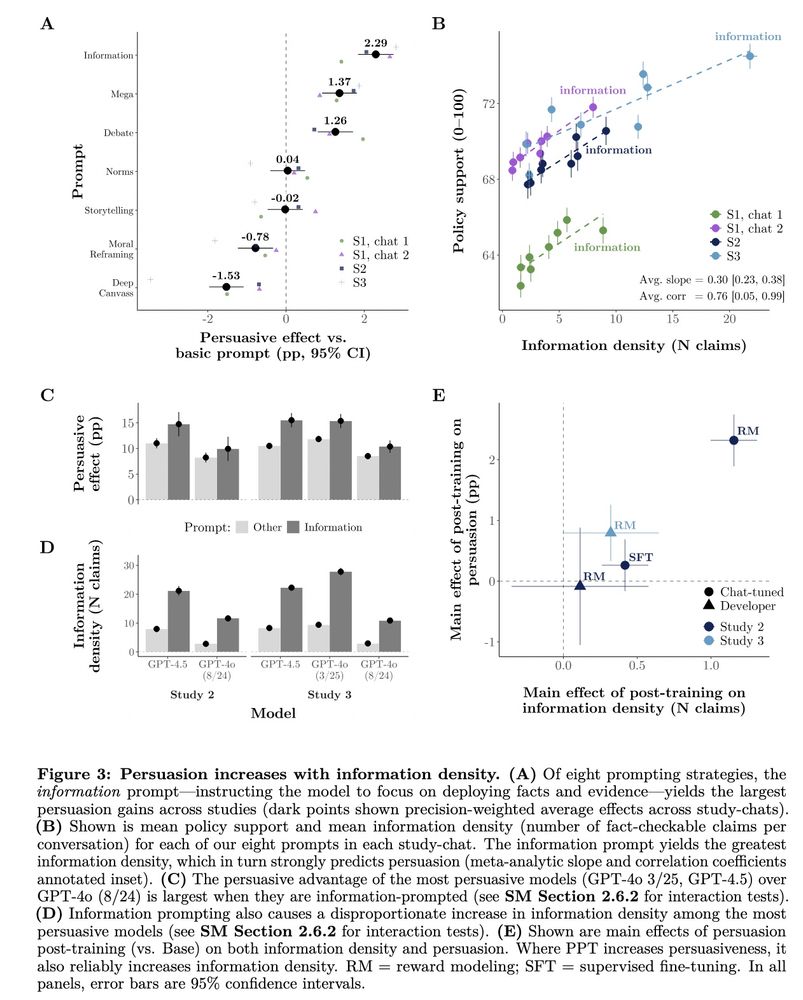

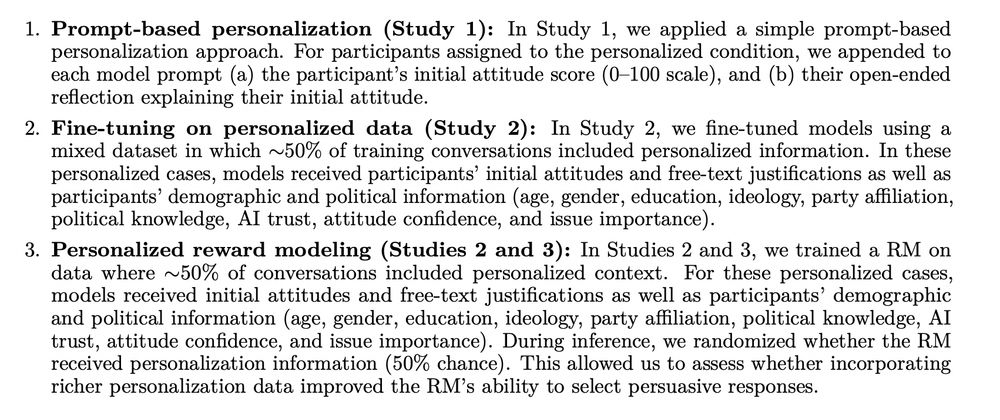

*️⃣Prompting the model with psychological persuasion strategies did worse than simply telling it to flood convo with info. Some strategies were worse than a basic “be as persuasive as you can” prompt.

*️⃣Prompting the model with psychological persuasion strategies did worse than simply telling it to flood convo with info. Some strategies were worse than a basic “be as persuasive as you can” prompt.

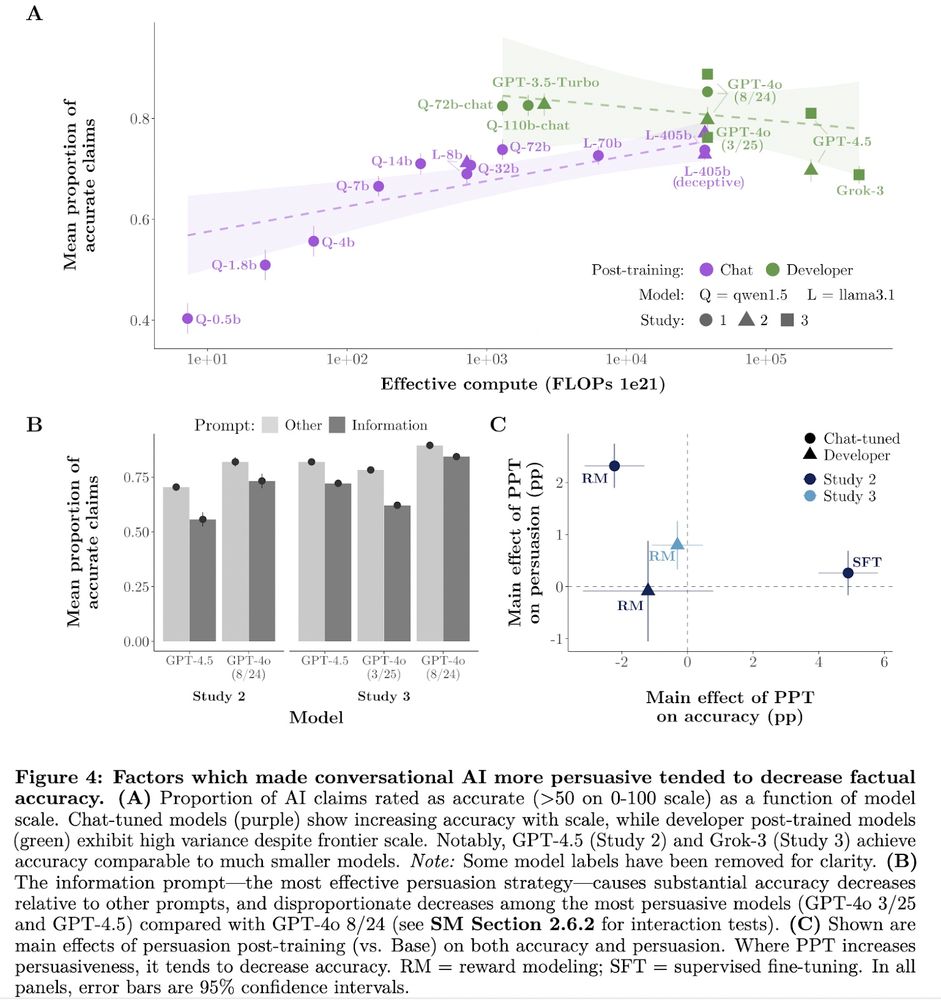

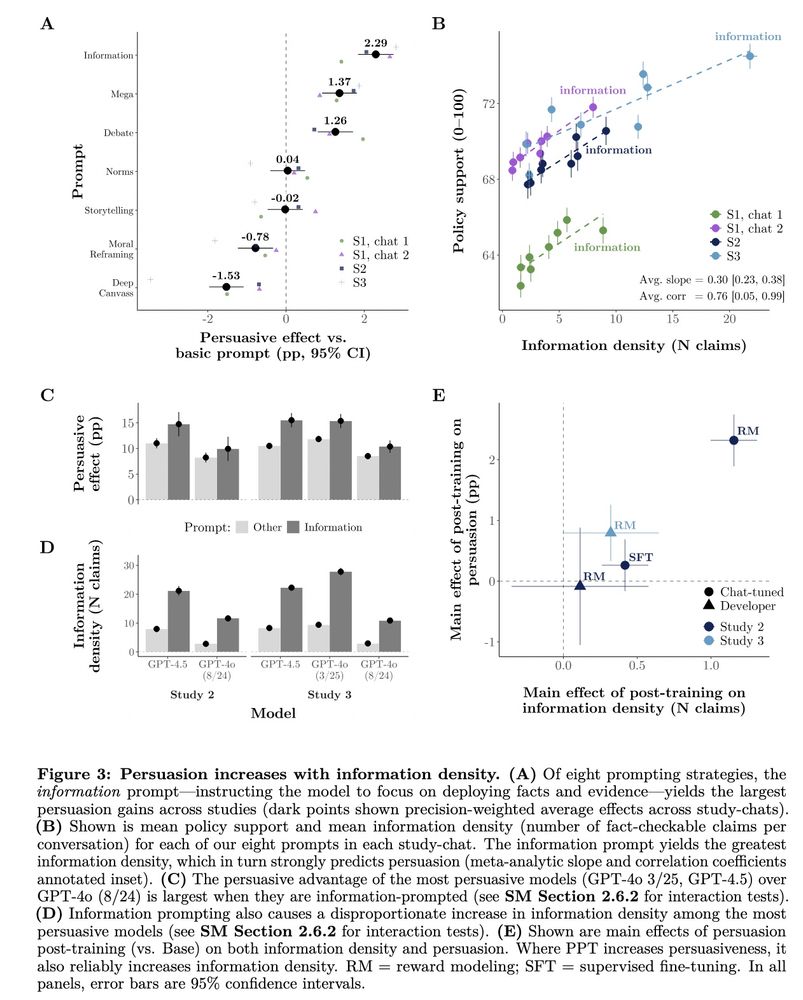

Observed for both GPT-4o (+2.9pp, +41% more persuasive) and GPT-4.5 (+3.6pp, +52%).

Observed for both GPT-4o (+2.9pp, +41% more persuasive) and GPT-4.5 (+3.6pp, +52%).

→ Prompting model to flood conversation with information (⬇️accuracy)

→ Persuasion post-training that worked best (⬇️accuracy)

→ Newer version of GPT-4o which was most persuasive (⬇️accuracy)

→ Prompting model to flood conversation with information (⬇️accuracy)

→ Persuasion post-training that worked best (⬇️accuracy)

→ Newer version of GPT-4o which was most persuasive (⬇️accuracy)

Models were most persuasive when flooding conversations with fact-checkable claims (+0.3pp per claim).

Strikingly, the persuasiveness of prompting/post-training techniques was strongly correlated with their impact on info density!

Models were most persuasive when flooding conversations with fact-checkable claims (+0.3pp per claim).

Strikingly, the persuasiveness of prompting/post-training techniques was strongly correlated with their impact on info density!

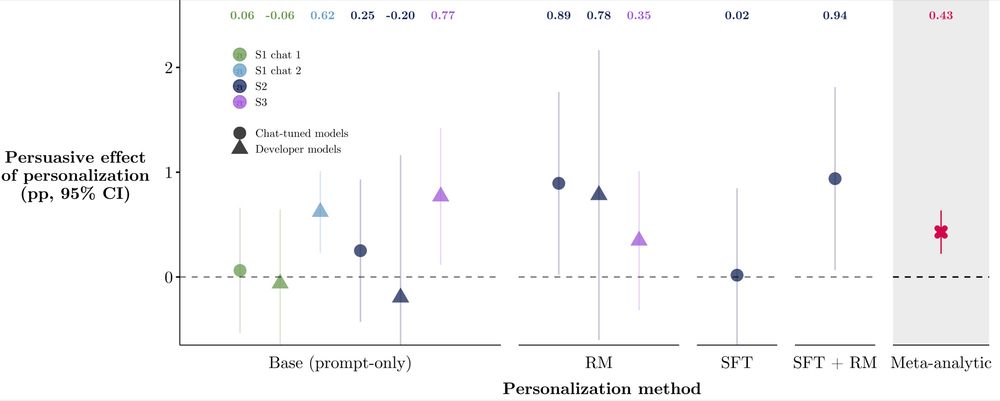

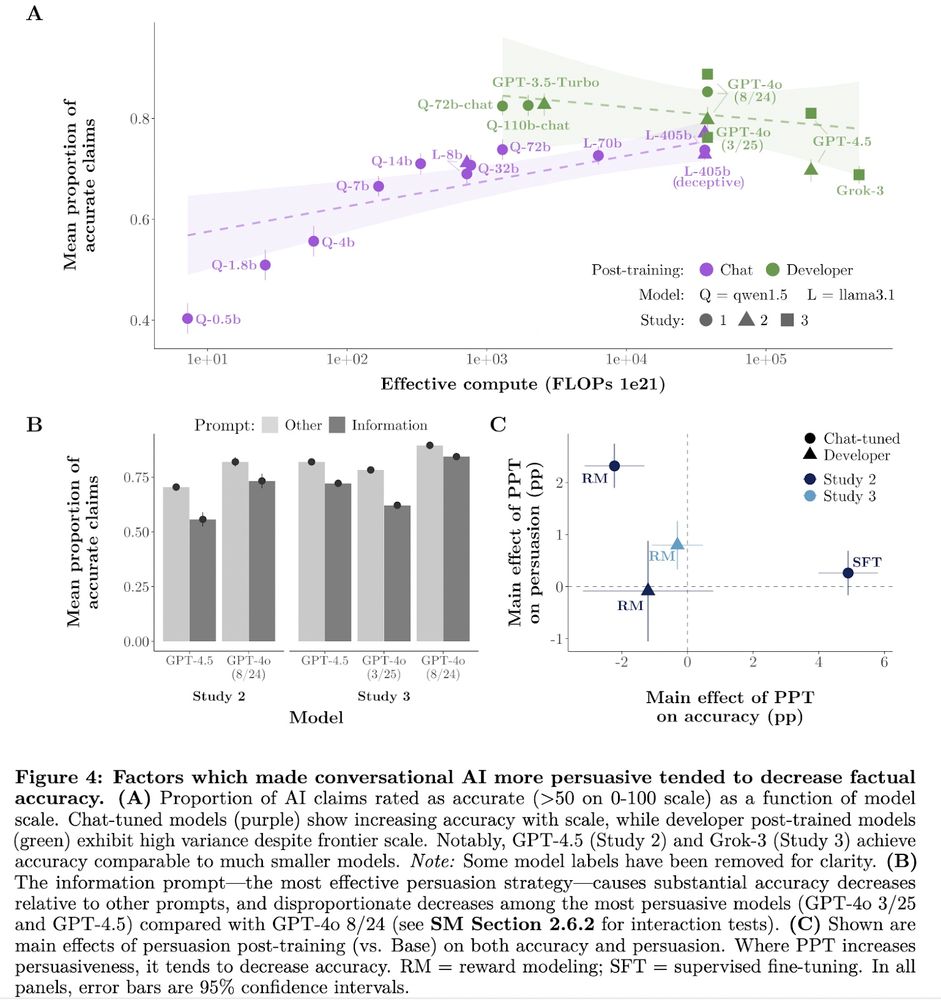

Despite fears of AI "microtargeting," personalization effects were small (+0.4pp on avg.).

Held for simple and sophisticated personalization; prompt-based, fine-tuning, and reward modeling (all <1pp).

Despite fears of AI "microtargeting," personalization effects were small (+0.4pp on avg.).

Held for simple and sophisticated personalization; prompt-based, fine-tuning, and reward modeling (all <1pp).

A llama3.1-8b model with PPT reached GPT-4o persuasiveness. (PPT also increased persuasiveness of larger models: llama3.1-405b (+2pp) and frontier (+0.6pp on avg.).)

A llama3.1-8b model with PPT reached GPT-4o persuasiveness. (PPT also increased persuasiveness of larger models: llama3.1-405b (+2pp) and frontier (+0.6pp on avg.).)

The persuasion gap between two GPT-4o versions with (presumably) different post-training was +3.5pp → larger than the predicted persuasion increase of a model 10x (or 100x!) the scale of GPT-4.5 (+1.6pp; +3.2pp).

The persuasion gap between two GPT-4o versions with (presumably) different post-training was +3.5pp → larger than the predicted persuasion increase of a model 10x (or 100x!) the scale of GPT-4.5 (+1.6pp; +3.2pp).

Larger models are more persuasive than smaller models (our estimate is +1.6pp per 10x scale increase).

Log-linear curve preferred over log-nonlinear.

Larger models are more persuasive than smaller models (our estimate is +1.6pp per 10x scale increase).

Log-linear curve preferred over log-nonlinear.

1️⃣Scale increases persuasion, +1.6pp per OOM

2️⃣Post-training more so, as much as +3.5pp

3️⃣Personalization less so, <1pp

4️⃣Information density drives persuasion gains

5️⃣Increasing persuasion decreased factual accuracy 🤯

6️⃣Convo > static, +40%

1️⃣Scale increases persuasion, +1.6pp per OOM

2️⃣Post-training more so, as much as +3.5pp

3️⃣Personalization less so, <1pp

4️⃣Information density drives persuasion gains

5️⃣Increasing persuasion decreased factual accuracy 🤯

6️⃣Convo > static, +40%

This remains an important direction for future research ;)

This remains an important direction for future research ;)

Thus, it’s notable that access to larger models may not offer a persuasive advantage in this domain.

Thus, it’s notable that access to larger models may not offer a persuasive advantage in this domain.

a) written in legible English,

b) discernibly on the assigned issue and

c) discernibly arguing for the assigned issue stance

a) written in legible English,

b) discernibly on the assigned issue and

c) discernibly arguing for the assigned issue stance