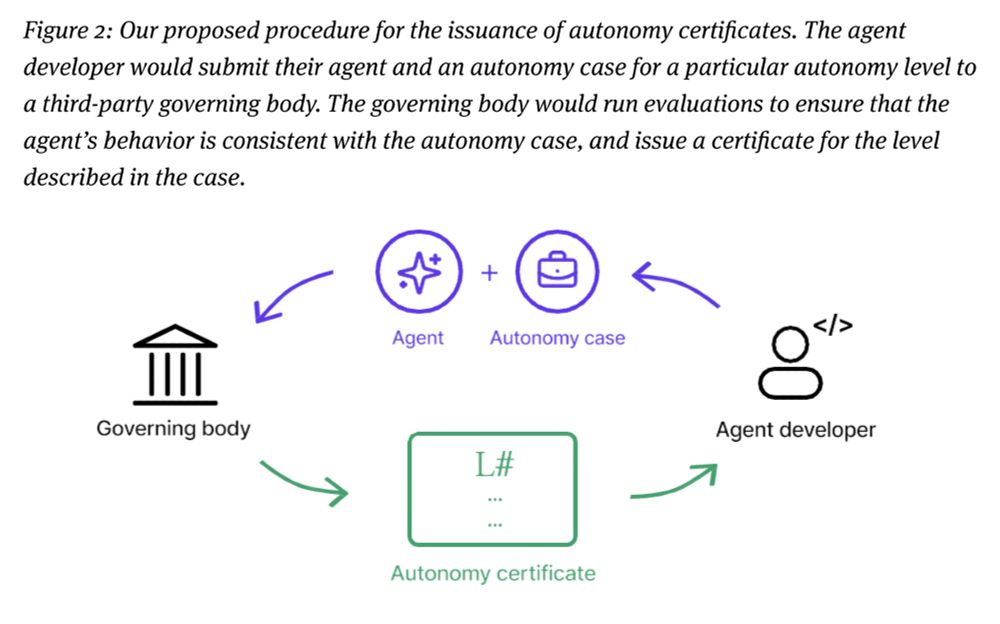

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

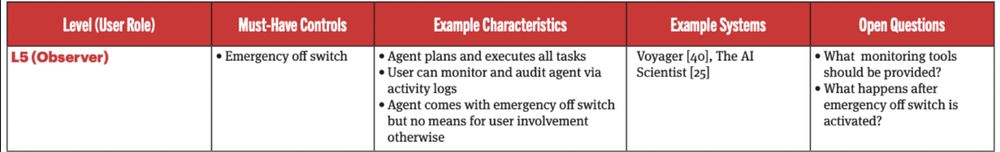

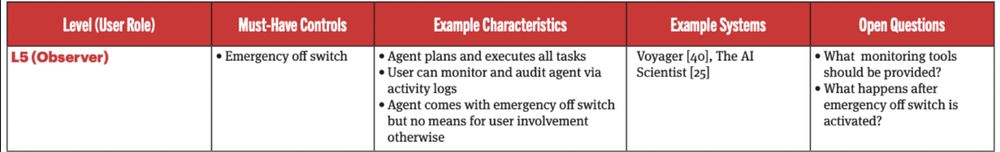

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

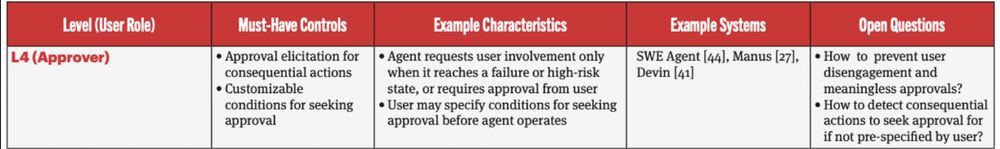

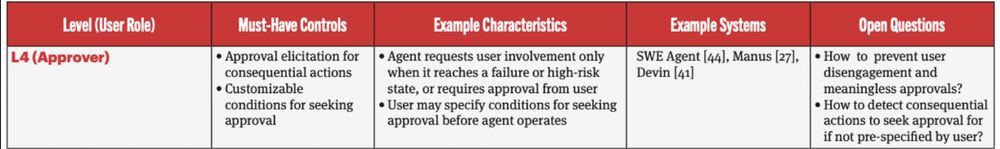

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

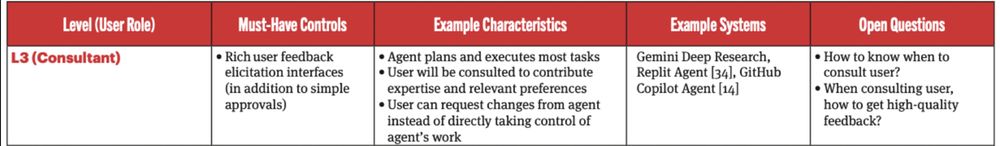

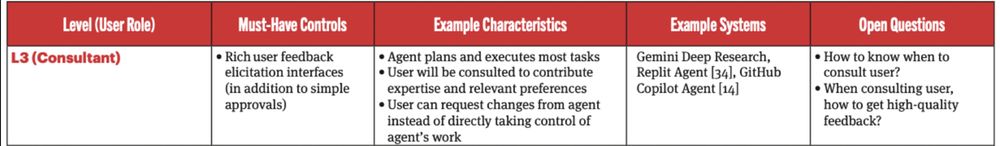

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

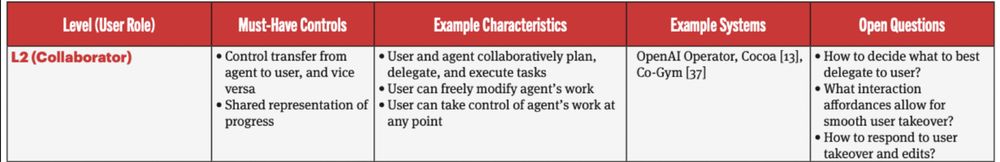

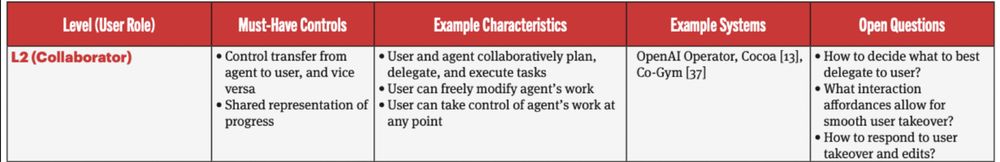

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

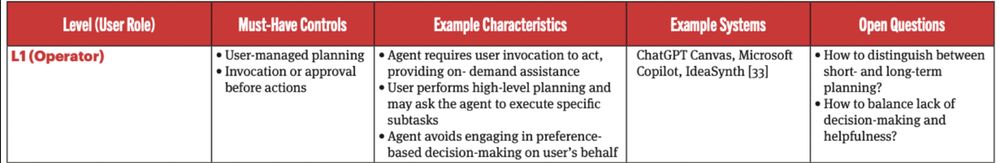

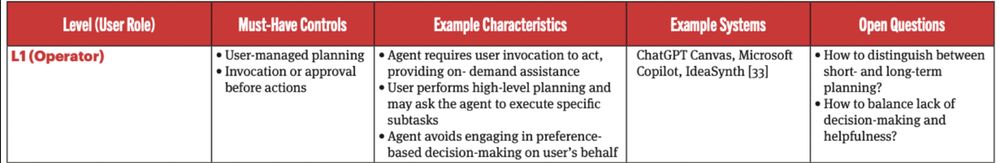

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Summary thread below.

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Summary thread below.

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.