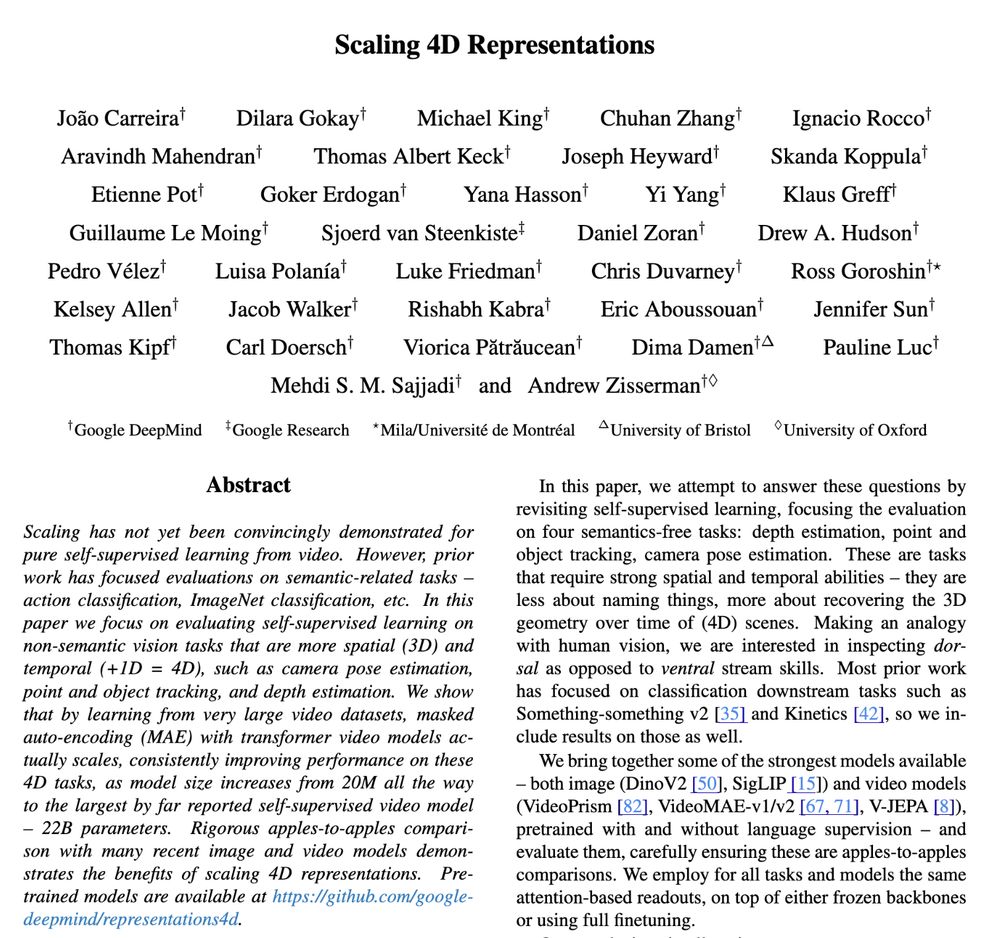

Self-supervised learning from video does scale! In our latest work, we scaled masked auto-encoding models to 22B params, boosting performance on pose estimation, tracking & more.

Paper: arxiv.org/abs/2412.15212

Code & models: github.com/google-deepmind/representations4d

Self-supervised learning from video does scale! In our latest work, we scaled masked auto-encoding models to 22B params, boosting performance on pose estimation, tracking & more.

Paper: arxiv.org/abs/2412.15212

Code & models: github.com/google-deepmind/representations4d

At @naverlabseurope.bsky.social in Grenoble (Meylan), France.

careers.werecruit.io/en/naver-lab...

At @naverlabseurope.bsky.social in Grenoble (Meylan), France.

careers.werecruit.io/en/naver-lab...

We’ll also be presenting this work at:

📍 @egu.eu on 02/04 in Vienna

📍 @esa.int / NASA Workshop on Foundation Models on 05/04 in Rome

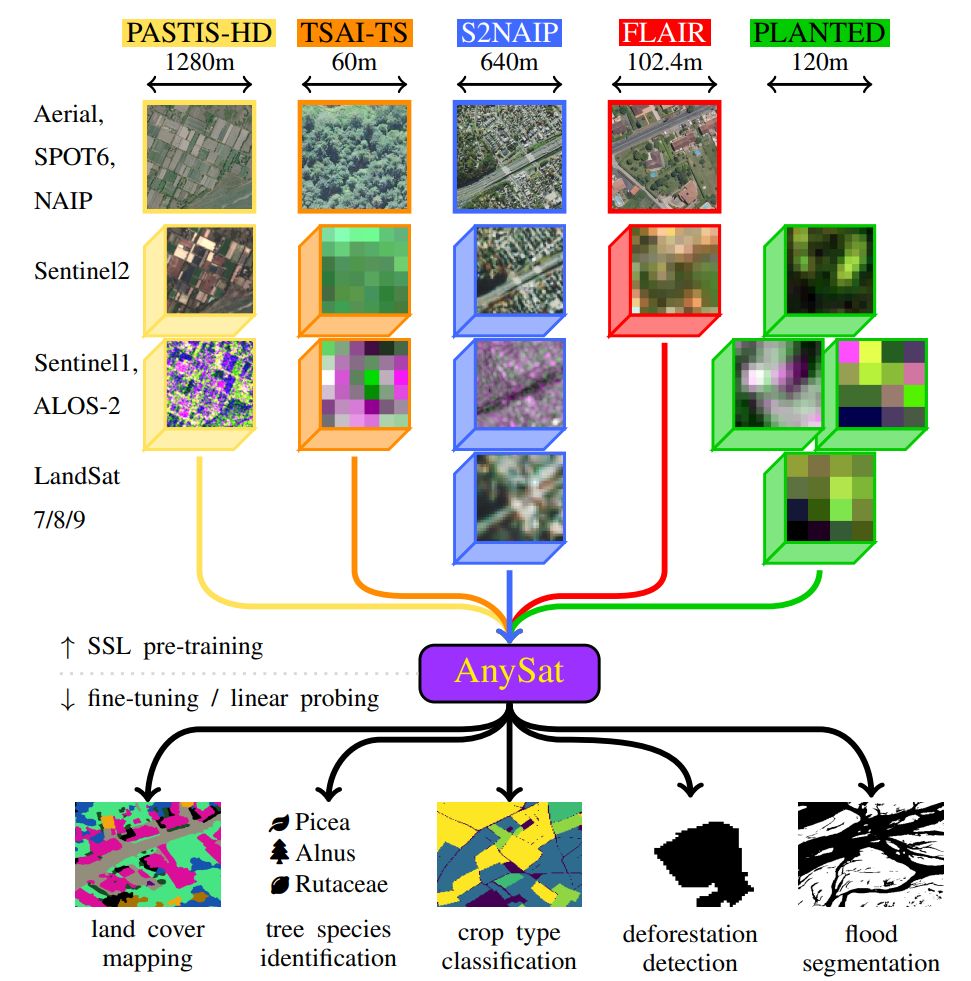

🛰️ AnySat: One Earth Observation Model for Many Resolutions, Scales, and Modalities

@gastruc.bsky.social @nicaogr.bsky.social @loicland.bsky.social

📄 pdf: arxiv.org/abs/2412.14123

🌐 webpage: gastruc.github.io/anysat

LPOSS is a training-free method for open-vocabulary semantic segmentation using Vision-Language Models.

LPOSS is a training-free method for open-vocabulary semantic segmentation using Vision-Language Models.

Webpage & code: juliettemarrie.github.io/ludvig

Webpage & code: juliettemarrie.github.io/ludvig

TL;DR: comprehensive benchmark dataset; various physical principles (fluid dynamics, optics, solid mechanics, magnetism and thermodynamics); Performed on recent video generators

TL;DR: comprehensive benchmark dataset; various physical principles (fluid dynamics, optics, solid mechanics, magnetism and thermodynamics); Performed on recent video generators