📍Assistant Professor at UNC-Chapel Hill (🇺🇸), PhD from EPFL (🇨🇭).

🌍 josephlemaitre.com

If priced out of a bet, the best things is to sell the opportunity to bet to a higher bankroll party (if possible).

There are many cases in life where uncertainty can be traded at discount for certainty (insurance policy is a bet on a home getting flooded, bank loans, ...)

If priced out of a bet, the best things is to sell the opportunity to bet to a higher bankroll party (if possible).

There are many cases in life where uncertainty can be traded at discount for certainty (insurance policy is a bet on a home getting flooded, bank loans, ...)

Task is also important, it is incredible and safe for plotting and front-end... found it slows me down on many pure modeling task. All anecdotal obviously.

Task is also important, it is incredible and safe for plotting and front-end... found it slows me down on many pure modeling task. All anecdotal obviously.

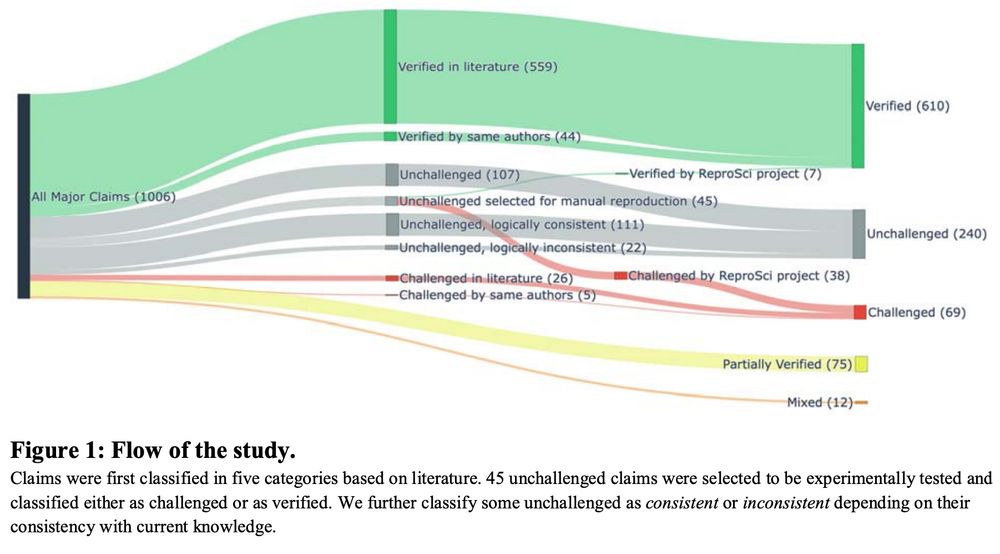

~80% of claims could be verified. Moreover, some challenged claims just reflect the field advancing its knowledge/tools.

The lesson: if the tools are good and the research largely exempt from direct translation pressures, science works.

3.5/n

~80% of claims could be verified. Moreover, some challenged claims just reflect the field advancing its knowledge/tools.

The lesson: if the tools are good and the research largely exempt from direct translation pressures, science works.

3.5/n

But, many caveats (cont.)

4.3/n

But, many caveats (cont.)

4.3/n

Hope you get back the you get a list of the questions

Hope you get back the you get a list of the questions

Perhaps my notes are too scattered. Or my prompt is bad.

Perhaps my notes are too scattered. Or my prompt is bad.

arxiv.org/pdf/2403.154... is also a good read (yay for independent researchers).

arxiv.org/pdf/2403.154... is also a good read (yay for independent researchers).

That's for non-coders. For coders, there is a before and after using Claude Code. A lot of the academic literature on LLMs brushes over code (like the big PNAS piece recently forgot who).

That's for non-coders. For coders, there is a before and after using Claude Code. A lot of the academic literature on LLMs brushes over code (like the big PNAS piece recently forgot who).