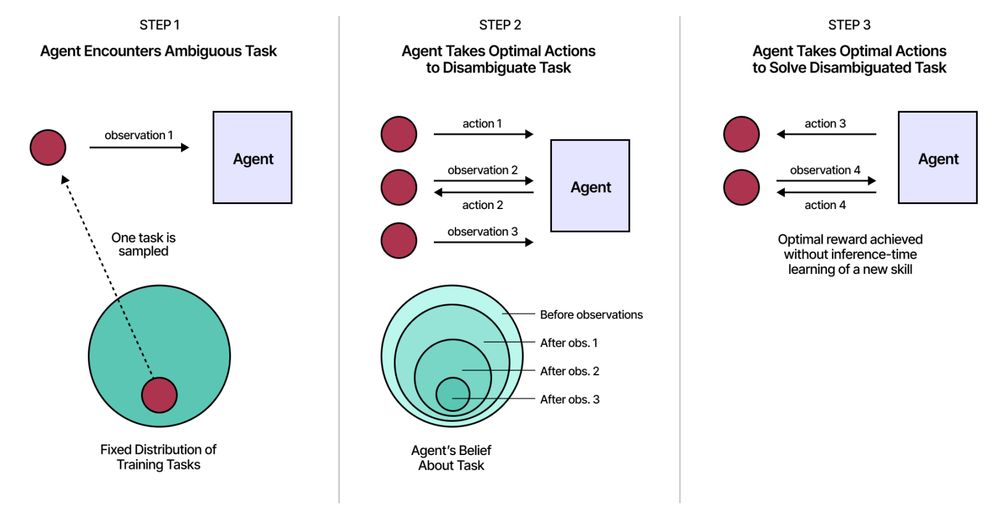

Related to @jeffclune's AI-GAs, @_rockt, @kenneth0stanley, @err_more, @MichaelD1729, @pyoudeyer

Related to @jeffclune's AI-GAs, @_rockt, @kenneth0stanley, @err_more, @MichaelD1729, @pyoudeyer

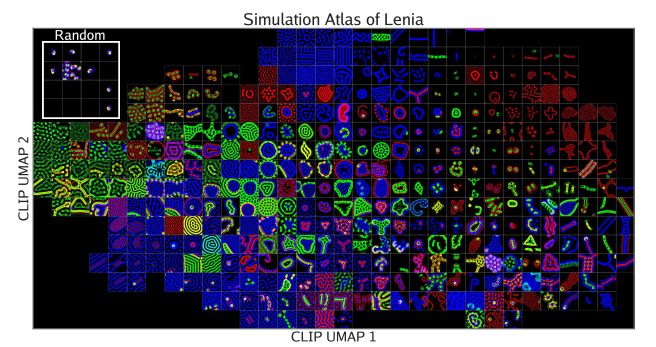

See work done by @risi1979 @drmichaellevin @hardmaru @BertChakovsky @sina_lana + many others

See work done by @risi1979 @drmichaellevin @hardmaru @BertChakovsky @sina_lana + many others

From "Let's Take the Con out of Econometrics" by Edward Leamer, >3k citations

From "Let's Take the Con out of Econometrics" by Edward Leamer, >3k citations