https://jeremie-beucler.github.io/

We also re-analyzed existing base-rate stimuli from past research using our method. The results revealed a large, previously unnoticed variability in belief strength, which could be problematic in some cases.

We also re-analyzed existing base-rate stimuli from past research using our method. The results revealed a large, previously unnoticed variability in belief strength, which could be problematic in some cases.

This method allows us to create a massive database of over 100,000 base-rate items, each with an associated belief strength value.

Here is an example of every possible items for one single adjective out of 66 ("Arrogant")! Best to be a kindergarten teacher than a politician in this case. 🤭

This method allows us to create a massive database of over 100,000 base-rate items, each with an associated belief strength value.

Here is an example of every possible items for one single adjective out of 66 ("Arrogant")! Best to be a kindergarten teacher than a politician in this case. 🤭

And it works really well! LLM-generated ratings showed a very strong correlation with human judgments.

More importantly, our belief-strength measure robustly predicted participants' actual choices in a separate base-rate neglect experiment!

And it works really well! LLM-generated ratings showed a very strong correlation with human judgments.

More importantly, our belief-strength measure robustly predicted participants' actual choices in a separate base-rate neglect experiment!

We argue that measuring “belief strength” is a major bottleneck in reasoning research, which mostly relies on conflict vs. no-conflict items.

It requires costly human ratings and is rarely done parametrically, limiting the development of theoretical & computational models of biased reasoning.

We argue that measuring “belief strength” is a major bottleneck in reasoning research, which mostly relies on conflict vs. no-conflict items.

It requires costly human ratings and is rarely done parametrically, limiting the development of theoretical & computational models of biased reasoning.

🚨 New preprint: Using Large Language Models to Estimate Belief Strength in Reasoning 🚨

When asked: "There are 995 politicians and 5 nurses. Person 'L' is kind. Is Person 'L' more likely to be a politician or a nurse?", most people will answer "nurse", neglecting the base-rate info.

A 🧵👇

🚨 New preprint: Using Large Language Models to Estimate Belief Strength in Reasoning 🚨

When asked: "There are 995 politicians and 5 nurses. Person 'L' is kind. Is Person 'L' more likely to be a politician or a nurse?", most people will answer "nurse", neglecting the base-rate info.

A 🧵👇

📍 Come see our poster at #CCN2025, Aug 12, 1:30–4:30pm

We show how a biased drift-diffusion model can explain choice, RT and confidence in a base-rate neglect task, revealing why more deliberation doesn’t always fix bias.

📍 Come see our poster at #CCN2025, Aug 12, 1:30–4:30pm

We show how a biased drift-diffusion model can explain choice, RT and confidence in a base-rate neglect task, revealing why more deliberation doesn’t always fix bias.

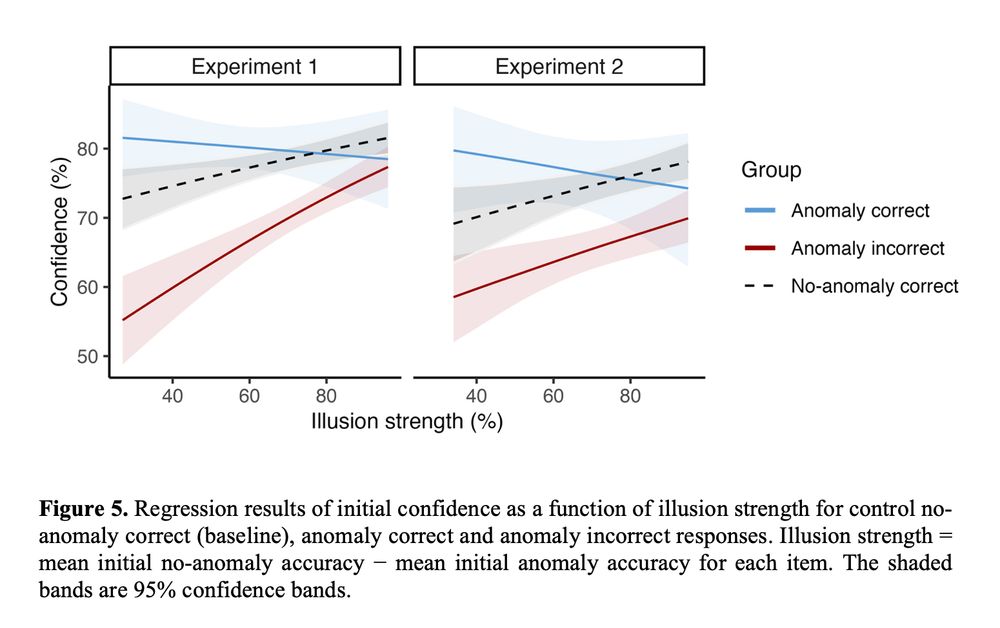

Finding #3: The strength of the illusion is key! As the semantic overlap gets stronger (e.g., "Moses" is closer to "Noah" than "Goliath" is), confidence in incorrect answers tended to increase, while confidence in correct answers tended to decrease. 📈📉

Finding #3: The strength of the illusion is key! As the semantic overlap gets stronger (e.g., "Moses" is closer to "Noah" than "Goliath" is), confidence in incorrect answers tended to increase, while confidence in correct answers tended to decrease. 📈📉

Finding #2: Even when participants got it wrong and fell for the illusion, they showed a significant error sensitivity (lower confidence). Interestingly, this effect was not affected by load and deadline, suggesting this error sensitivity is intuitive.

Finding #2: Even when participants got it wrong and fell for the illusion, they showed a significant error sensitivity (lower confidence). Interestingly, this effect was not affected by load and deadline, suggesting this error sensitivity is intuitive.

Finding #1: You don't always need to be slow to be right! 🐢 A significant number of participants intuitively spotted the anomaly from the start, without needing extra time and resources to deliberate. 🐇 Sound intuitive reasoning does happen.

Finding #1: You don't always need to be slow to be right! 🐢 A significant number of participants intuitively spotted the anomaly from the start, without needing extra time and resources to deliberate. 🐇 Sound intuitive reasoning does happen.

To test this, we ran 4 experiments with over 500 participants! We used a two-response paradigm: first, a quick intuitive answer under time pressure & cognitive load. Then, a final, deliberated response with no constraints. Here are the main results:

To test this, we ran 4 experiments with over 500 participants! We used a two-response paradigm: first, a quick intuitive answer under time pressure & cognitive load. Then, a final, deliberated response with no constraints. Here are the main results:

These semantic illusions are often used to test for deliberate "System 2" thinking (e.g., in the verbal Cognitive Reflection Test). The classic theory? We intuitively fall for the illusion & need slow, effortful deliberation to correct the mistake. But is it really that simple?

These semantic illusions are often used to test for deliberate "System 2" thinking (e.g., in the verbal Cognitive Reflection Test). The classic theory? We intuitively fall for the illusion & need slow, effortful deliberation to correct the mistake. But is it really that simple?

New (and first) paper accepted at JEP:LMC 🎉

Ever fallen for this type of questions: "How many animals of each kind did Moses take on the Ark?" Most say "Two," forgetting it was Noah, and not Moses, who took the animals on the Ark. But what’s really going on here? 🧵

New (and first) paper accepted at JEP:LMC 🎉

Ever fallen for this type of questions: "How many animals of each kind did Moses take on the Ark?" Most say "Two," forgetting it was Noah, and not Moses, who took the animals on the Ark. But what’s really going on here? 🧵