Read the full paper from the @Voxel51 team: arxiv.org/abs/2506.02359

Read the full paper from the @Voxel51 team: arxiv.org/abs/2506.02359

- Annotating large visual datasets can be done 100,000x cheaper and 5,000x faster with public, off-the-shelf models while maintaining quality.

- Highly accurate models can be trained at a fraction of the time and cost of those trained from human labels.

- Annotating large visual datasets can be done 100,000x cheaper and 5,000x faster with public, off-the-shelf models while maintaining quality.

- Highly accurate models can be trained at a fraction of the time and cost of those trained from human labels.

Understanding this balance enables better tuning of auto-labeling pipelines, prioritizing overall model effectiveness over superficial label cleanliness.

Understanding this balance enables better tuning of auto-labeling pipelines, prioritizing overall model effectiveness over superficial label cleanliness.

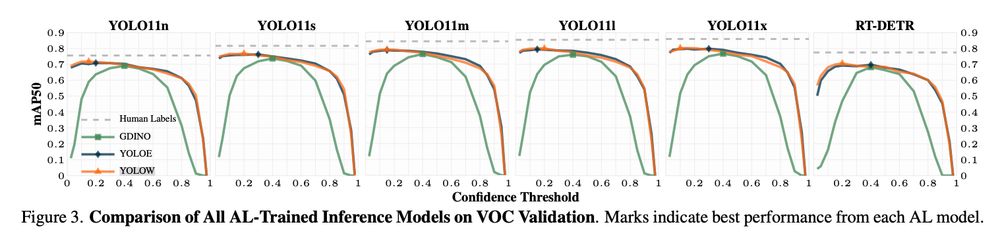

This is an interesting one. Somewhat counterintuitively, setting a relatively low confidence level (ɑ ≈ 0.2) for auto labels maximized the precision and recall of downstream models trained from auto-labeled data.

This is an interesting one. Somewhat counterintuitively, setting a relatively low confidence level (ɑ ≈ 0.2) for auto labels maximized the precision and recall of downstream models trained from auto-labeled data.

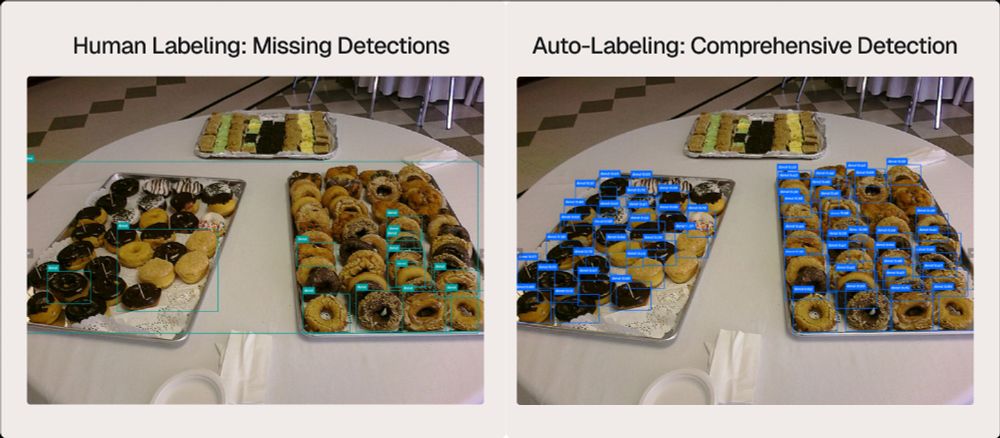

The image below compares a human-labeled image (left) with an auto-labeled one (right). Humans are clearly, umm, lazy here :)

The image below compares a human-labeled image (left) with an auto-labeled one (right). Humans are clearly, umm, lazy here :)

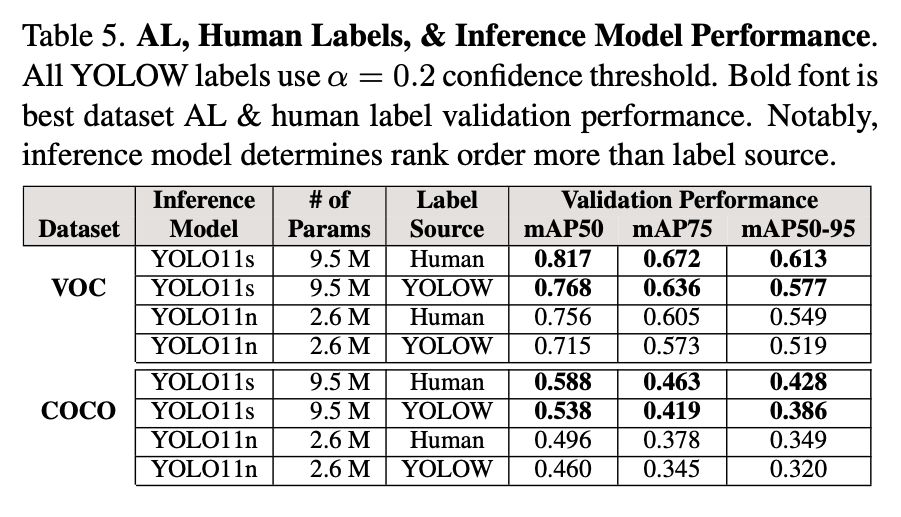

The mean average precision (mAP) of inference models trained from auto labels approached those trained from human labels.

On VOC, auto-labeled models achieved mAP50 scores of 0.768, closely matching the 0.817 achieved with human-labeled data.

The mean average precision (mAP) of inference models trained from auto labels approached those trained from human labels.

On VOC, auto-labeled models achieved mAP50 scores of 0.768, closely matching the 0.817 achieved with human-labeled data.

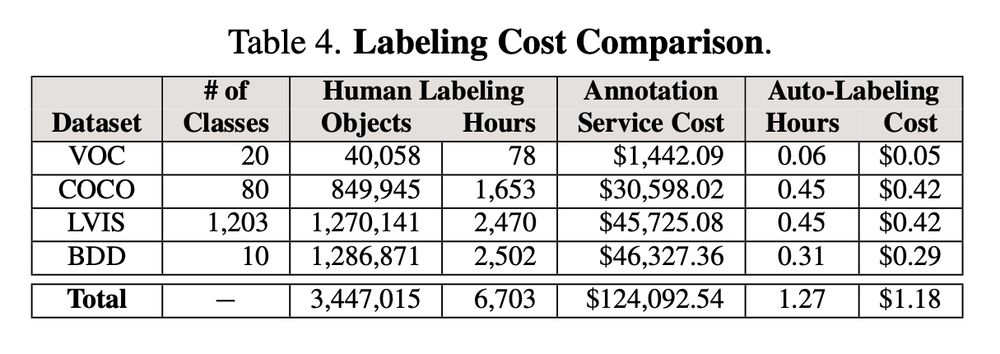

📊 Massive cost and time savings.

Using Verified Auto Labeling costs $1.18 and 1 hour in @NVIDIA L40S GPU time, vs. over $124,092 and 6,703 hours for human annotation.

Read our blog to dive deeper: link.voxel51.com/verified-auto-labeling-tw/

📊 Massive cost and time savings.

Using Verified Auto Labeling costs $1.18 and 1 hour in @NVIDIA L40S GPU time, vs. over $124,092 and 6,703 hours for human annotation.

Read our blog to dive deeper: link.voxel51.com/verified-auto-labeling-tw/

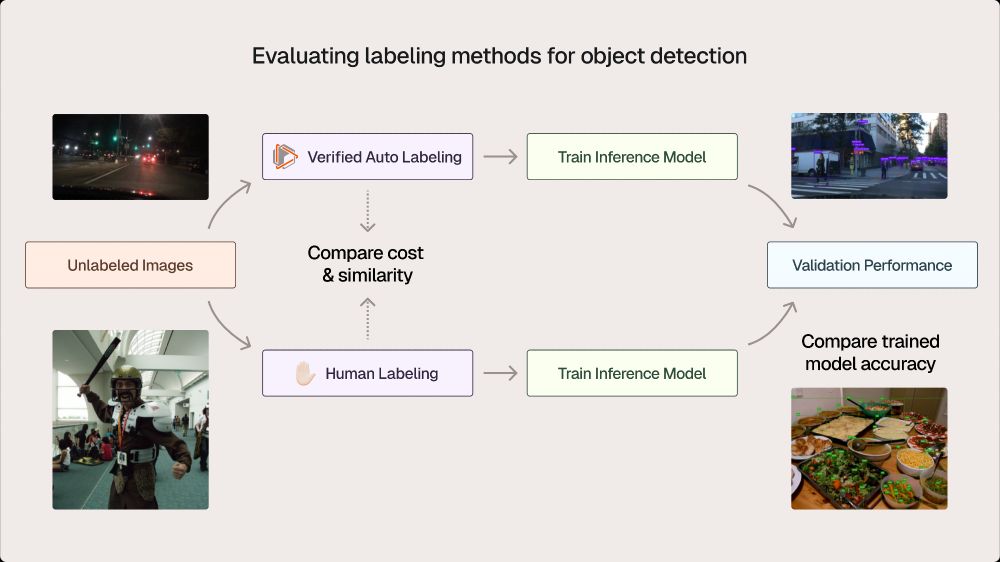

- Translated our research into a new annotation tool called Verified Auto Labeling

- Translated our research into a new annotation tool called Verified Auto Labeling

- Used off-the-shelf foundation models to label several benchmark datasets

- Evaluated these labels relative to the human-annotated ground truth

- Used off-the-shelf foundation models to label several benchmark datasets

- Evaluated these labels relative to the human-annotated ground truth

Our goal with this experiment was to quantitatively evaluate how well zero-shot approaches perform and identify the parameters and configurations that unlock optimal results.

Our goal with this experiment was to quantitatively evaluate how well zero-shot approaches perform and identify the parameters and configurations that unlock optimal results.