prompt: “I’m trying to dial in this v60 of huatusco with my vario. temp / grind recommendations?”

prompt: “I’m trying to dial in this v60 of huatusco with my vario. temp / grind recommendations?”

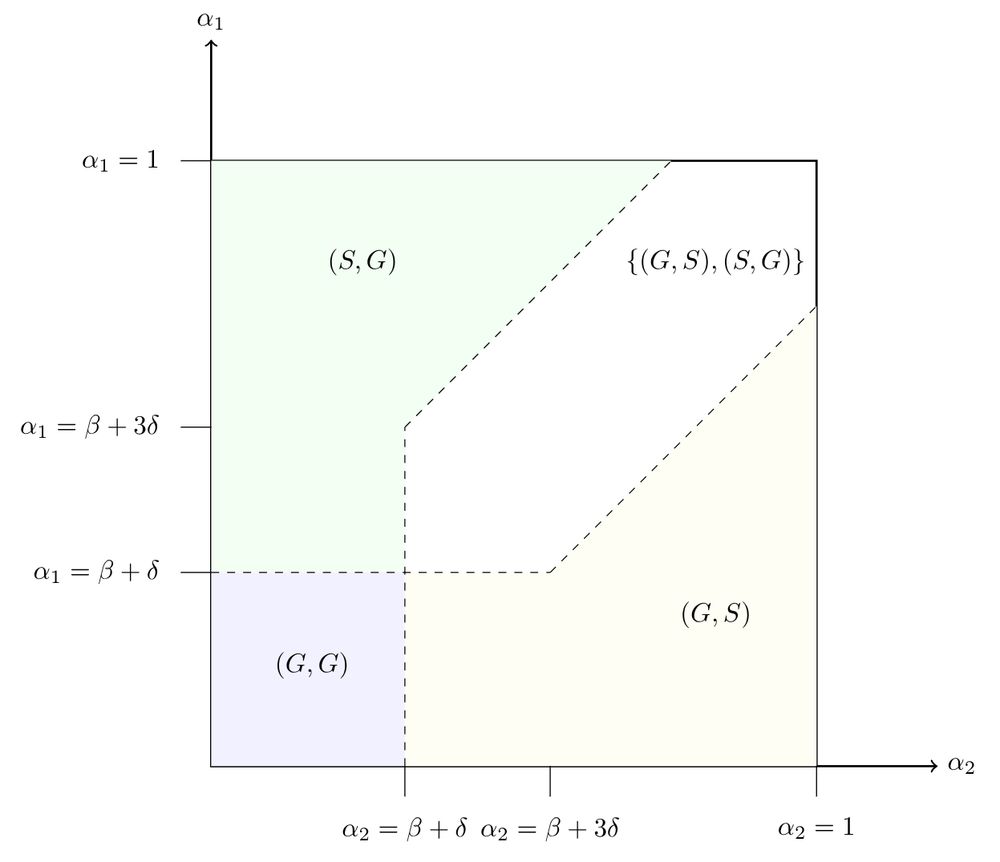

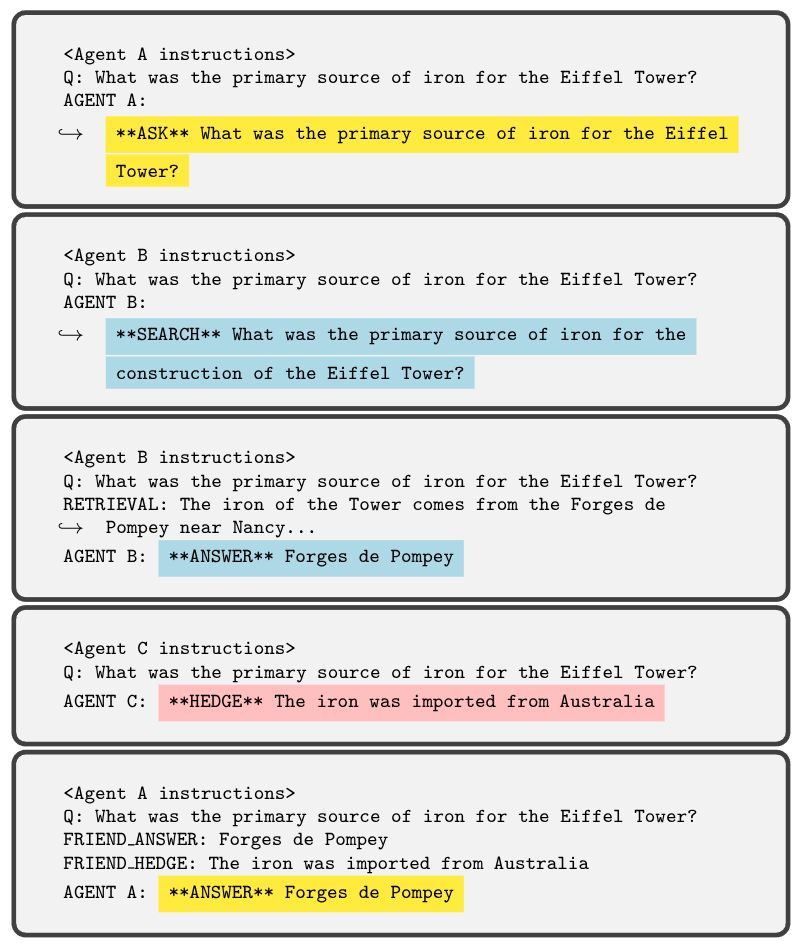

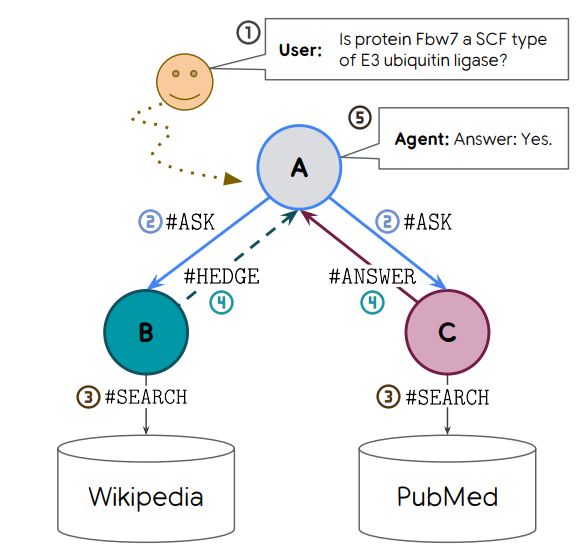

We use a bunch of synthetic and real data to show when active example selection can help and how much cost-optimal annotation can save.

We use a bunch of synthetic and real data to show when active example selection can help and how much cost-optimal annotation can save.