We recently had the great fortune to publish in @nature.com. We even made the cover of the issue, with a witty tagline that summarizes the paper: "Cheat Code: Delegating to AI can encourage dishonest behaviour"

🧵 1/n

We recently had the great fortune to publish in @nature.com. We even made the cover of the issue, with a witty tagline that summarizes the paper: "Cheat Code: Delegating to AI can encourage dishonest behaviour"

🧵 1/n

Paper: www.nature.com/articles/s41...

Paper: www.nature.com/articles/s41...

If you're interested in studying with me, here's a new funding scheme just launched by @maxplanck.de: The Max Planck AI Network

ai.mpg.de

Application deadline 31 October

If you're interested in studying with me, here's a new funding scheme just launched by @maxplanck.de: The Max Planck AI Network

ai.mpg.de

Application deadline 31 October

Built-in LLM safeguards are insufficient to prevent this kind of misuse. We tested various guardrail strategies and found that highly specific prohibitions on cheating inserted at the user-level are the most effective. However, this solution isn't scalable nor practical.

Built-in LLM safeguards are insufficient to prevent this kind of misuse. We tested various guardrail strategies and found that highly specific prohibitions on cheating inserted at the user-level are the most effective. However, this solution isn't scalable nor practical.

People are more likely to request dishonest behaviour when they can delegate the action to an AI. This effect was especially pronounced when the interface allowed for ambiguity in the agent’s behaviour.

People are more likely to request dishonest behaviour when they can delegate the action to an AI. This effect was especially pronounced when the interface allowed for ambiguity in the agent’s behaviour.

Our new paper in @nature.com, 5 years in the making, is out today.

www.nature.com/articles/s41...

Our new paper in @nature.com, 5 years in the making, is out today.

www.nature.com/articles/s41...

We are organizing this in-person event in Berlin on 10 Oct 2025, with a 'School on Cross Cultural AI' on 9 Oct.

We have an amazing line-up of speakers (see link)

Registration is open, but places are limited: derdivan.org/event/sympos...

We are organizing this in-person event in Berlin on 10 Oct 2025, with a 'School on Cross Cultural AI' on 9 Oct.

We have an amazing line-up of speakers (see link)

Registration is open, but places are limited: derdivan.org/event/sympos...

I am delighted to share that applications are now open for the MAXMINDS mentoring program.

I am delighted to share that applications are now open for the MAXMINDS mentoring program.

Experimental Evidence for the Propagation and Preservation of Machine Discoveries in Human Populations

arxiv.org/abs/2506.17741

with team members @levinbrinkmann.bsky.social @thomasfmueller.bsky.social Ann-Marie Nussberger, @maximederex.bsky.social, Sara Bonati, Valerii Chirkov

Experimental Evidence for the Propagation and Preservation of Machine Discoveries in Human Populations

arxiv.org/abs/2506.17741

with team members @levinbrinkmann.bsky.social @thomasfmueller.bsky.social Ann-Marie Nussberger, @maximederex.bsky.social, Sara Bonati, Valerii Chirkov

AI scare you, but can you scare it?

Check out our new Halloween AI project. Each AI has a different phobia. You can try to spook the machine by crafting a prompt to generate a spooky image.

We are giving away $ prizes for the best images.

spookthemachine.com

AI scare you, but can you scare it?

Check out our new Halloween AI project. Each AI has a different phobia. You can try to spook the machine by crafting a prompt to generate a spooky image.

We are giving away $ prizes for the best images.

spookthemachine.com

Mutual benefits of social learning and algorithmic mediation for cumulative culture

Read the paper here: arxiv.org/html/2410.00...

Mutual benefits of social learning and algorithmic mediation for cumulative culture

Read the paper here: arxiv.org/html/2410.00...

waymo.com/safety/impact/

If true, will humans adopt these cars now that they are safer?

Our work (with @azimshariff.bsky.social & @jfbonnefon.bsky.social) suggests not.

doi.org/10.1016/j.tr...

waymo.com/safety/impact/

If true, will humans adopt these cars now that they are safer?

Our work (with @azimshariff.bsky.social & @jfbonnefon.bsky.social) suggests not.

doi.org/10.1016/j.tr...

Friends, you are cordially invited to the opening of my 2nd solo exhibition, titled 'Portraits of the Artificial', and curated by Nadim Samman

Time: 7:00pm, Friday 28 June

Location: Schützallee 27, 14169 Berlin

RSVP link: www.eventbrite.de/e/eroffnung-...

Friends, you are cordially invited to the opening of my 2nd solo exhibition, titled 'Portraits of the Artificial', and curated by Nadim Samman

Time: 7:00pm, Friday 28 June

Location: Schützallee 27, 14169 Berlin

RSVP link: www.eventbrite.de/e/eroffnung-...

machinebehavior.science

machinebehavior.science

Delighted to share this Aesthetica Magazine interview about my oil paintings, in which I give faces to AI algorithms.

aestheticamagazine.com/exploratory-...

Delighted to share this Aesthetica Magazine interview about my oil paintings, in which I give faces to AI algorithms.

aestheticamagazine.com/exploratory-...

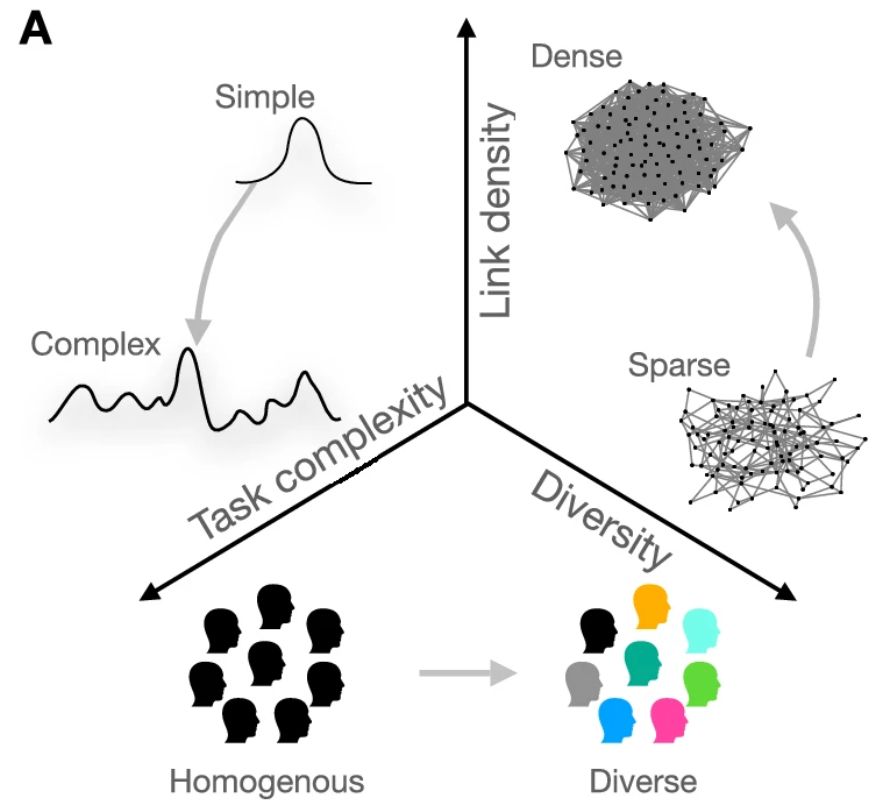

Network structure shapes the impact of diversity in collective learning

We study the interaction between

1. Task complexity (simple vs multi-peaked)

2. Social network density, and

3. Team's skill diversity

We find interesting & non-trivial interactions

www.nature.com/articles/s41...

Network structure shapes the impact of diversity in collective learning

We study the interaction between

1. Task complexity (simple vs multi-peaked)

2. Social network density, and

3. Team's skill diversity

We find interesting & non-trivial interactions

www.nature.com/articles/s41...

Also, check out our stellar list of speakers.

machinebehavior.science

Also, check out our stellar list of speakers.

machinebehavior.science

My co-chairs @azimshariff.bsky.social, @jfbonnefon.bsky.social and I look forward to seeing you in Berlin!

machinebehavior.science

My co-chairs @azimshariff.bsky.social, @jfbonnefon.bsky.social and I look forward to seeing you in Berlin!

machinebehavior.science

Free access version: rdcu.be/drzoS

Free access version: rdcu.be/drzoS