#HCI #PeerReview #SciPub

#toolsforthought #ResearchSynthesis

#OpenScience #MetaSci #FoSci

🔎 Research: ethnography of peer review

🧑🏫 Teaching: Stats, DataViz

🐢 UMD: College of Info

🌐 PhD Candidate: Info Studies / HCI + Data

🏝️ OASISlab

Is this common to data mining conferences?

I like this sort of statement; it reminds me a bit of the 21-word solution. #metascience

Is this common to data mining conferences?

I like this sort of statement; it reminds me a bit of the 21-word solution. #metascience

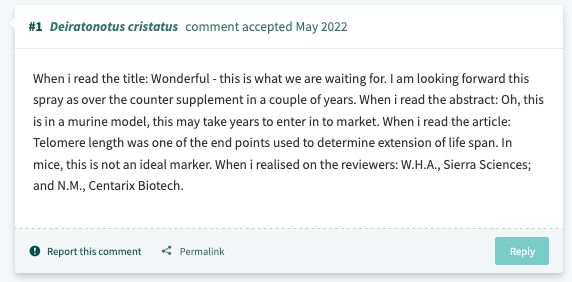

The story should be one of errors/potential fraud instead of a breakthrough, right?

pubpeer.com/publications...

Author reply in the thread:

The story should be one of errors/potential fraud instead of a breakthrough, right?

pubpeer.com/publications...

Author reply in the thread:

The Google white paper that was published a while ago can be helpful: cloud.google.com/discover/wha...

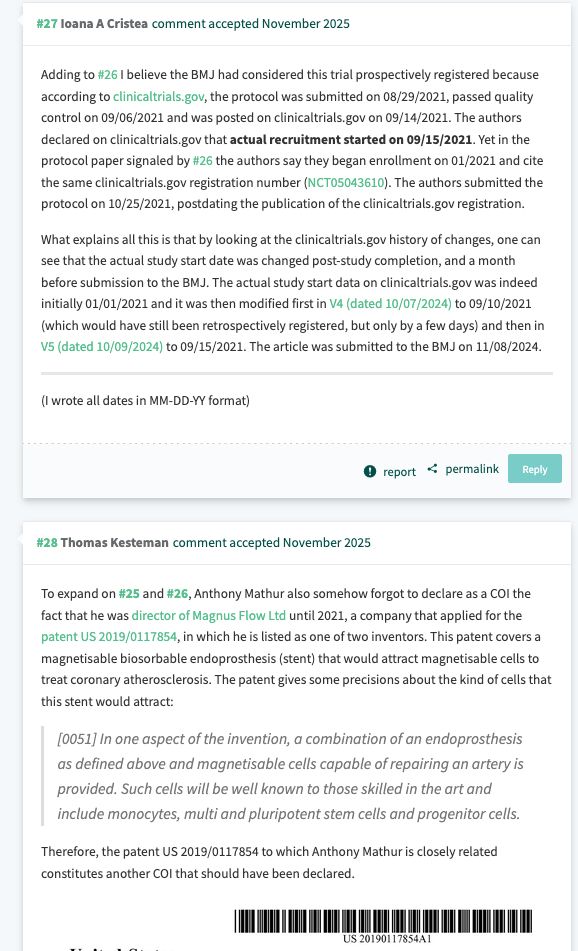

Section: Strategies for writing better prompts

The Google white paper that was published a while ago can be helpful: cloud.google.com/discover/wha...

Section: Strategies for writing better prompts

apastyle.apa.org/jars/quant-t...

APA JARS-QUANT reporting guidelines mention diagnostics:

apastyle.apa.org/jars/quant-t...

APA JARS-QUANT reporting guidelines mention diagnostics:

Another gem in the #peerreview literature, a joke paper, finds that it's Reviewer #3 who's the real problem.

The paper even has a credulous PubPeer comment!

Paper: doi:10.1111/ssqu.12824s

PubPeer: pubpeer.com/publications/80F9ACFE1DC2E6510A4CC3D2D841C1

Another gem in the #peerreview literature, a joke paper, finds that it's Reviewer #3 who's the real problem.

The paper even has a credulous PubPeer comment!

Paper: doi:10.1111/ssqu.12824s

PubPeer: pubpeer.com/publications/80F9ACFE1DC2E6510A4CC3D2D841C1

Maybe try again?

The second screenshot is Liang et al. 2024: ai.nejm.org/doi/abs/10.1...

Maybe try again?

The second screenshot is Liang et al. 2024: ai.nejm.org/doi/abs/10.1...

"Should Large Language Models" returns 73 hits.

Before asking can...?, ask should...? and you'll save yourself a year's worth of research in some cases.

"Should Large Language Models" returns 73 hits.

Before asking can...?, ask should...? and you'll save yourself a year's worth of research in some cases.

Those who use their tools will do so as they like.

Disclaimers won't matter much in the long-run.

Those who use their tools will do so as they like.

Disclaimers won't matter much in the long-run.

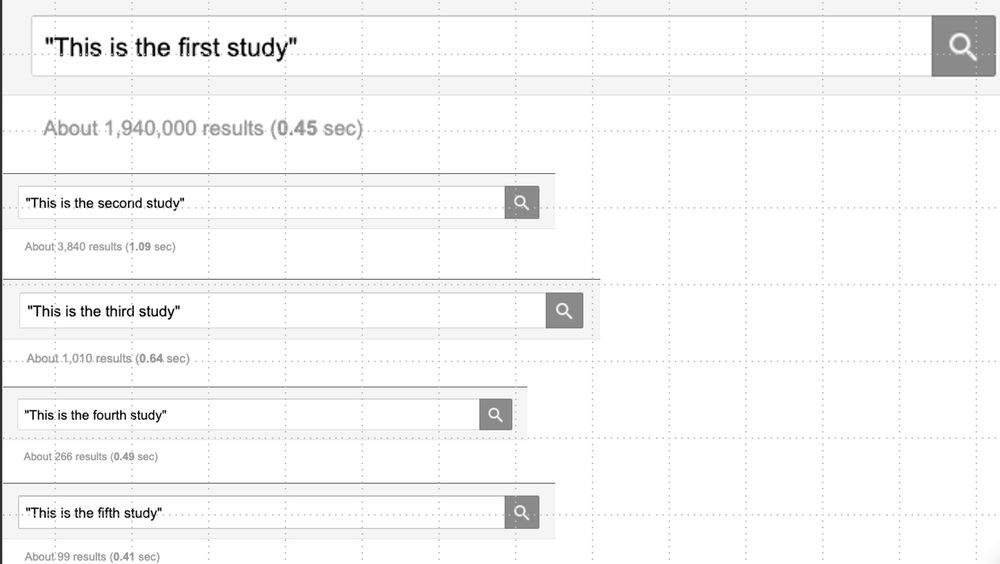

On Google Scholar, it returns almost 2 million hits.

"This is the second study..." returns 3,840 hits.

That's a difference of ~520X.

I'm more likely to believe the latter claim.

📖 If you make a novelty claim, then back it up.

On Google Scholar, it returns almost 2 million hits.

"This is the second study..." returns 3,840 hits.

That's a difference of ~520X.

I'm more likely to believe the latter claim.

📖 If you make a novelty claim, then back it up.

❌ Desk reject failure to comply

Which other venues do this sort of thing? #metascience

❌ Desk reject failure to comply

Which other venues do this sort of thing? #metascience

"Festina lente"

(Latin translation: Make haste slowly)

"Festina lente"

(Latin translation: Make haste slowly)

This is the laziest and most honest method I've seen in my review so far:

"Whether this could have influenced the results remains currently unknown... Prompt designing is also time-consuming..."

This is the laziest and most honest method I've seen in my review so far:

"Whether this could have influenced the results remains currently unknown... Prompt designing is also time-consuming..."

A few thoughts from my recent use to consider:

1. Can I view a feed of papers/posts by sentiment category (e.g. only papers with post > 10% negative)? That'd be useful to find problematic papers.

A few thoughts from my recent use to consider:

1. Can I view a feed of papers/posts by sentiment category (e.g. only papers with post > 10% negative)? That'd be useful to find problematic papers.

❌ 7/10 contain shortcuts or impossible tasks.

❌ 7/10 fail outcome validity.

❌ 8/10 fail to disclose known issues.

preprint: arxiv.org/abs/2507.02825

blog: ddkang.substack.com/p/ai-agent-b...

❌ 7/10 contain shortcuts or impossible tasks.

❌ 7/10 fail outcome validity.

❌ 8/10 fail to disclose known issues.

preprint: arxiv.org/abs/2507.02825

blog: ddkang.substack.com/p/ai-agent-b...