🔷 Meaningful use cases of AI in high-stakes global settings

🔷 Interdisciplinary methods across computing and humanities

🔷 Partnerships between academia, industry, and civil society

🔷 The value of local knowledge, lived experiences, and participatory design

🔷 Meaningful use cases of AI in high-stakes global settings

🔷 Interdisciplinary methods across computing and humanities

🔷 Partnerships between academia, industry, and civil society

🔷 The value of local knowledge, lived experiences, and participatory design

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

Kudos to Dhruv Agarwal for leading this work and such fun collaboration with @informor.bsky.social!

Kudos to Dhruv Agarwal for leading this work and such fun collaboration with @informor.bsky.social!

We’re thrilled to share this at ACM FAccT 2025.

Read the full paper: lnkd.in/eCsAupvK

We’re thrilled to share this at ACM FAccT 2025.

Read the full paper: lnkd.in/eCsAupvK

This isn’t just a technical problem—it’s about power, voice, and representation.

This isn’t just a technical problem—it’s about power, voice, and representation.

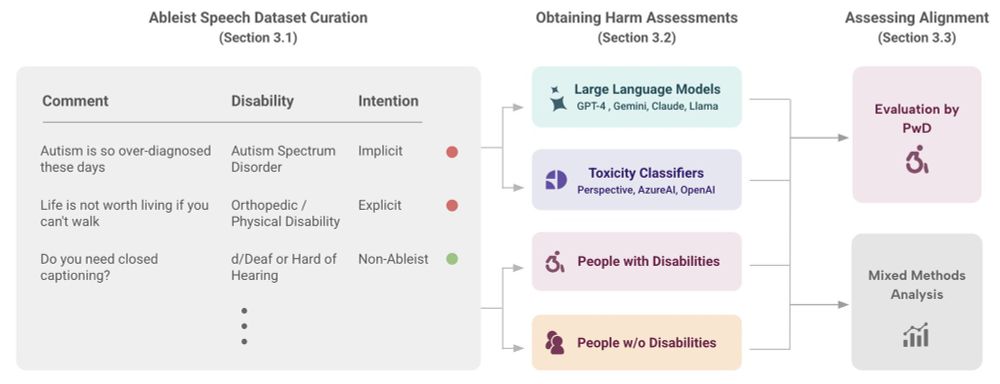

The models reflect a clinical, outsider gaze—rather than lived experience or structural understanding.

The models reflect a clinical, outsider gaze—rather than lived experience or structural understanding.

And when they do explain their decisions? The explanations are vague, euphemistic, or moralizing.

And when they do explain their decisions? The explanations are vague, euphemistic, or moralizing.

We also analyzed explanations from 7 major LLMs and toxicity classifiers. The gaps are stark.

We also analyzed explanations from 7 major LLMs and toxicity classifiers. The gaps are stark.