Come see our poster at 4pm on Tuesday in East Exhibition hall A-B, E-1208!

Come see our poster at 4pm on Tuesday in East Exhibition hall A-B, E-1208!

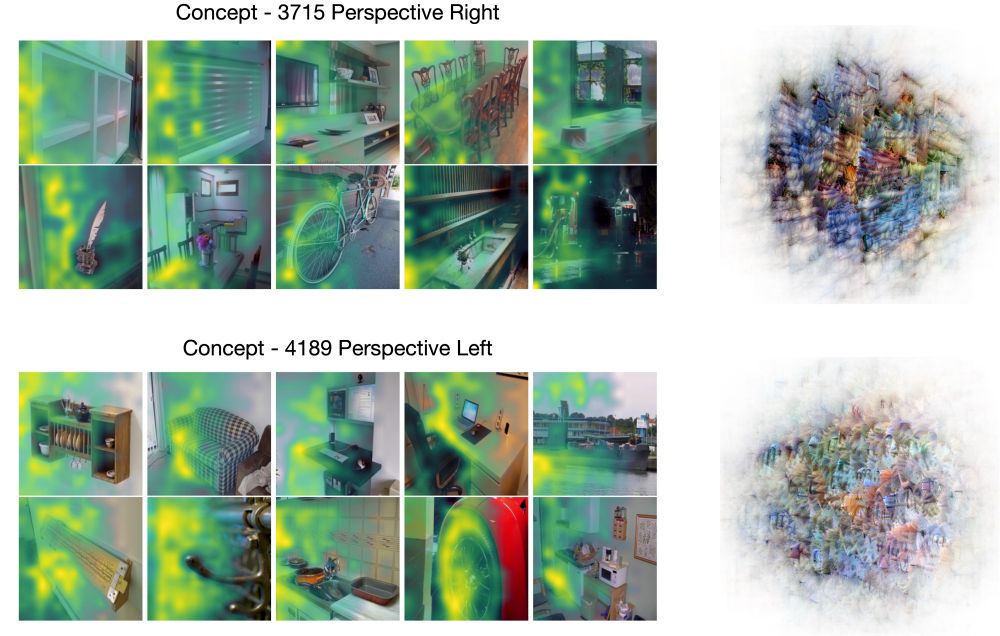

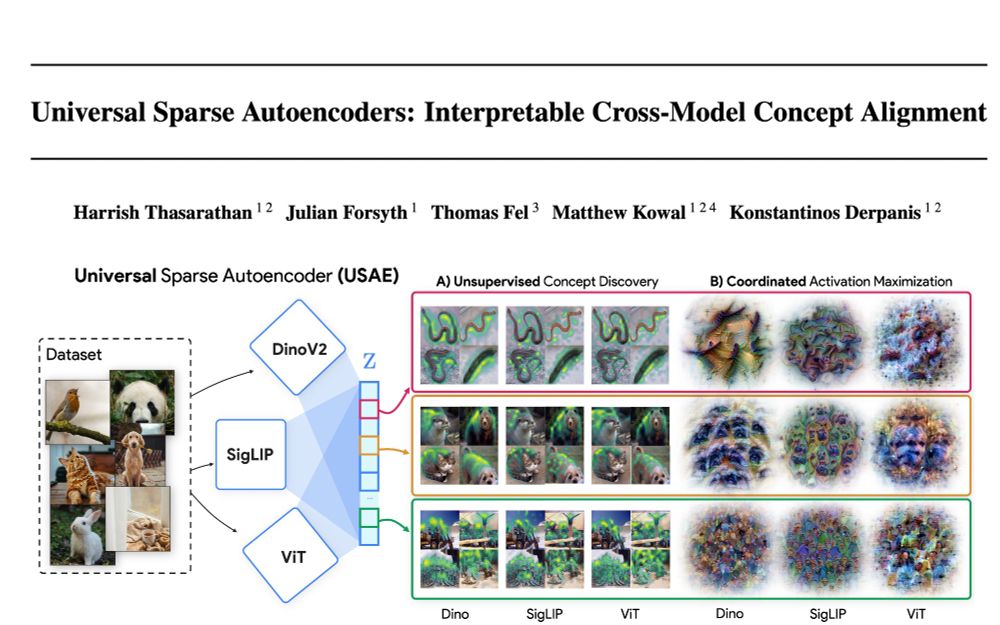

This opens new paths for understanding model differences!

(7/9)

This opens new paths for understanding model differences!

(7/9)

(6/9)

(6/9)

(5/9)

(5/9)

(4/9)

(4/9)

(2/9)

(2/9)

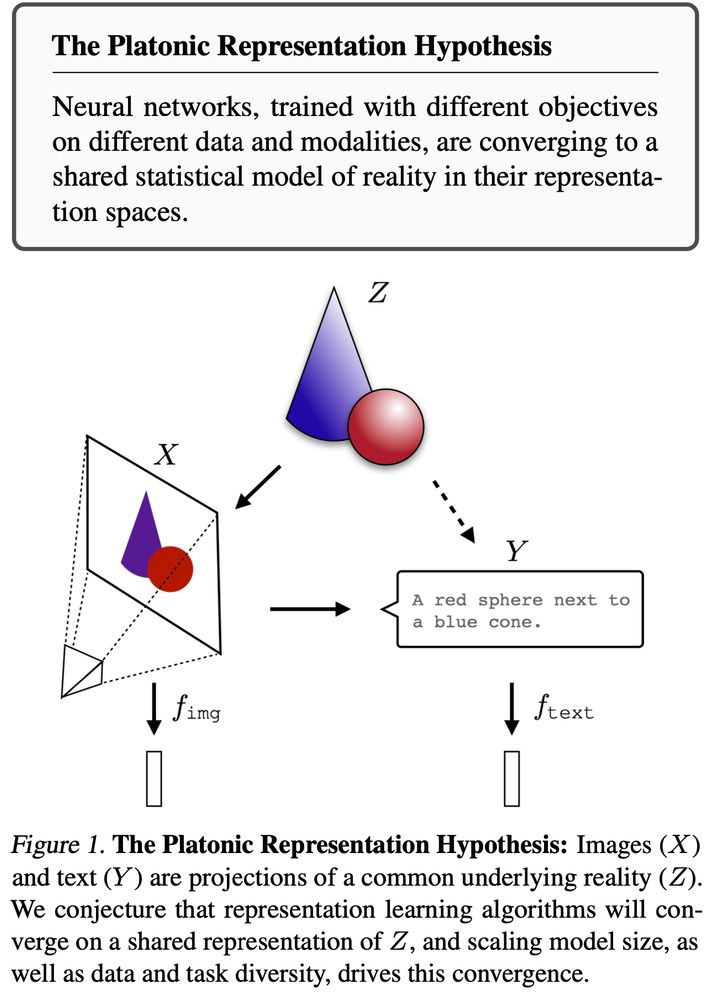

arxiv.org/abs/2502.03714

(1/9)

arxiv.org/abs/2502.03714

(1/9)