computational cognitive neuroscience 🧠

postdoc princeton neuro 🍕

he/him 🇨🇦 harrisonritz.github.io

So polysemanticity might not be a property of architectures/ learning rules, but tasks and training data

So polysemanticity might not be a property of architectures/ learning rules, but tasks and training data

www.science.org/doi/10.1126/...

IIUC a network trained on face and object classification tasks will develop specialized units for both.

www.science.org/doi/10.1126/...

IIUC a network trained on face and object classification tasks will develop specialized units for both.

Deadlines in decision making often truncate too-slow responses. Failing to account for these omissions can (severely) bias your DDM parameter estimates.

They offer a great solution to correct for this issue.

doi.org/10.31234/osf...

Deadlines in decision making often truncate too-slow responses. Failing to account for these omissions can (severely) bias your DDM parameter estimates.

They offer a great solution to correct for this issue.

doi.org/10.31234/osf...

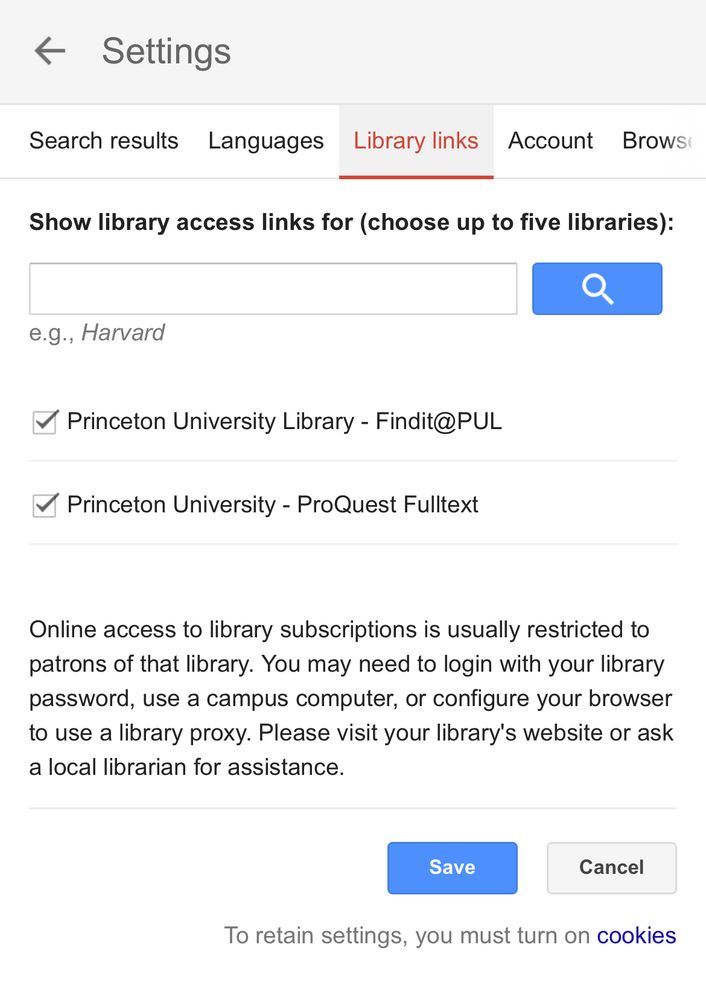

(1) disable iCloud relay

(2) enable one-off IP peeking

I definitely prefer (2) — quick once you’ve done it a few times. Annoying how Google tries to push us towards surveillance.

(1) disable iCloud relay

(2) enable one-off IP peeking

I definitely prefer (2) — quick once you’ve done it a few times. Annoying how Google tries to push us towards surveillance.

To be clear, explained variance was not our goal; confirmed good fit before interpreting params

To be clear, explained variance was not our goal; confirmed good fit before interpreting params

Switch-dep control energy (‘gram contrast’) didn’t depend strongly on A.

Switch-dep control energy (‘gram contrast’) didn’t depend strongly on A.

We found that switch-trained RNNs had greater energy on switch trials, similar to what we found in EEG.

This shows that control occurs both before and after the task cue.

We found that switch-trained RNNs had greater energy on switch trials, similar to what we found in EEG.

This shows that control occurs both before and after the task cue.

During the ITI, RNNs move into a neutral state, like a tennis player recovering to the center of the court. Short ITIs don’t give enough time.

In both switch-trained RNNs and EEG, the initial conditions (end of ITI) were near the midpoint between the task states.

During the ITI, RNNs move into a neutral state, like a tennis player recovering to the center of the court. Short ITIs don’t give enough time.

In both switch-trained RNNs and EEG, the initial conditions (end of ITI) were near the midpoint between the task states.

This was also the case with EEG. Critically, just changing the ITI for RNNs reproduced the differences between these EEG datasets.

This just ‘fell-out’ of the modeling!

This was also the case with EEG. Critically, just changing the ITI for RNNs reproduced the differences between these EEG datasets.

This just ‘fell-out’ of the modeling!

Like RNNs, these datasets had very different ITIs (900ms vs 2600ms).

High-d SSMs fit great here too, better than AR models or even EEG-trained RNNs.

*So what can SSMs tell us about RNN’s apparent task-switching signatures?*

Like RNNs, these datasets had very different ITIs (900ms vs 2600ms).

High-d SSMs fit great here too, better than AR models or even EEG-trained RNNs.

*So what can SSMs tell us about RNN’s apparent task-switching signatures?*

(1) RNNs have similar dynamics on switch and repeat trials

(2) RNNs converge to the center of the task space between trials

(3) RNNs have stronger dynamics when switching tasks

(1) RNNs have similar dynamics on switch and repeat trials

(2) RNNs converge to the center of the task space between trials

(3) RNNs have stronger dynamics when switching tasks

High-d SSMs (latents dim > obs dim) have great performance, and are interpretable through tools from dynamical systems and control theory.

High-d SSMs (latents dim > obs dim) have great performance, and are interpretable through tools from dynamical systems and control theory.

To capture interference from previous trials (‘inertia’ theory), we varied the ITI between trials.

To capture interference from previous trials (‘inertia’ theory), we varied the ITI between trials.

fwiw, we found that reliability-based stats did even better than encoding models under high noise

www.nature.com/articles/s41...

fwiw, we found that reliability-based stats did even better than encoding models under high noise

www.nature.com/articles/s41...

When you have more noise in your data (neuroimaging) than you do in your labels (face, house), encoding is better than decoding.

Not to mention that encoding models make it easier to control for covariates.

When you have more noise in your data (neuroimaging) than you do in your labels (face, house), encoding is better than decoding.

Not to mention that encoding models make it easier to control for covariates.