We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

For neuro fans: conn. structure can be invisible in single neurons but shape pop. activity

For low-rank RNN fans: a theory of rank=O(N)

For physics fans: fluctuations around DMFT saddle⇒dimension of activity

TUESDAY 16:30 – 18:00

P1 62 “Measuring and controlling solution degeneracy across task-trained recurrent neural networks” by @flavioh.bsky.social

TUESDAY 16:30 – 18:00

P1 62 “Measuring and controlling solution degeneracy across task-trained recurrent neural networks” by @flavioh.bsky.social

We study how humans explore a 61-state environment with a stochastic region that mimics a “noisy-TV.”

Results: Participants keep exploring the stochastic part even when it’s unhelpful, and novelty-seeking best explains this behavior.

#cogsci #neuroskyence

We study how humans explore a 61-state environment with a stochastic region that mimics a “noisy-TV.”

Results: Participants keep exploring the stochastic part even when it’s unhelpful, and novelty-seeking best explains this behavior.

#cogsci #neuroskyence

Feeling very grateful that reviewers and chairs appreciated concise mathematical explanations, in this age of big models.

www.biorxiv.org/content/10.1...

1/2

Feeling very grateful that reviewers and chairs appreciated concise mathematical explanations, in this age of big models.

www.biorxiv.org/content/10.1...

1/2

Our study proposes a bio-plausible meta-plasticity rule that shapes synapses over time, enabling selective recall based on context

Our study proposes a bio-plausible meta-plasticity rule that shapes synapses over time, enabling selective recall based on context

Huge thanks to our two amazing reviewers who pushed us to make the paper much stronger. A truly joyful collaboration with @lucasgruaz.bsky.social, @sobeckerneuro.bsky.social, and Johanni Brea! 🥰

Tweeprint on an earlier version: bsky.app/profile/modi... 🧠🧪👩🔬

Huge thanks to our two amazing reviewers who pushed us to make the paper much stronger. A truly joyful collaboration with @lucasgruaz.bsky.social, @sobeckerneuro.bsky.social, and Johanni Brea! 🥰

Tweeprint on an earlier version: bsky.app/profile/modi... 🧠🧪👩🔬

Come by our poster in the afternoon (4th floor, Poster 72) to talk about the sense of control, empowerment, and agency. 🧠🤖

We propose a unifying formulation of the sense of control and use it to empirically characterize the human subjective sense of control.

🧑🔬🧪🔬

Come by our poster in the afternoon (4th floor, Poster 72) to talk about the sense of control, empowerment, and agency. 🧠🤖

We propose a unifying formulation of the sense of control and use it to empirically characterize the human subjective sense of control.

🧑🔬🧪🔬

We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

We study curiosity using intrinsically motivated RL agents and developed an algorithm to generate diverse, targeted environments for comparing curiosity drives.

Preprint (accepted but not yet published): osf.io/preprints/ps...

We study curiosity using intrinsically motivated RL agents and developed an algorithm to generate diverse, targeted environments for comparing curiosity drives.

Preprint (accepted but not yet published): osf.io/preprints/ps...

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...

How to analyse a *high-dimensional* dynamical system? Find its fixed points, study their properties!

We do that for a chaotic neural network! finally published: doi.org/10.1103/Phys...

theoreticalneuroscience.no/thn22

John Hopfield received the 2024 Physics Nobel prize for his model published in 1982. What is the model all about? @icepfl.bsky.social

theoreticalneuroscience.no/thn22

John Hopfield received the 2024 Physics Nobel prize for his model published in 1982. What is the model all about? @icepfl.bsky.social

Super engaging text by science communicater Nik Papageorgiou.

actu.epfl.ch/news/brain-m...

Definitely more accessible than the original physics-style, 4.5-page letter 🤓

journals.aps.org/prl/abstract...

Super engaging text by science communicater Nik Papageorgiou.

actu.epfl.ch/news/brain-m...

Definitely more accessible than the original physics-style, 4.5-page letter 🤓

journals.aps.org/prl/abstract...

actu.epfl.ch/news/learnin...

P.S.: There's even a French version of the article! It feels so fancy! 😎 👨🎨 🇫🇷

actu.epfl.ch/news/apprend...

actu.epfl.ch/news/learnin...

P.S.: There's even a French version of the article! It feels so fancy! 😎 👨🎨 🇫🇷

actu.epfl.ch/news/apprend...

The concentration of measure phenomenon can explain the emergence of rate-based dynamics in networks of spiking neurons, even when no two neurons are the same.

This is what's shown in the last paper of my PhD, out today in Physical Review Letters 🎉 tinyurl.com/4rprwrw5

The concentration of measure phenomenon can explain the emergence of rate-based dynamics in networks of spiking neurons, even when no two neurons are the same.

This is what's shown in the last paper of my PhD, out today in Physical Review Letters 🎉 tinyurl.com/4rprwrw5

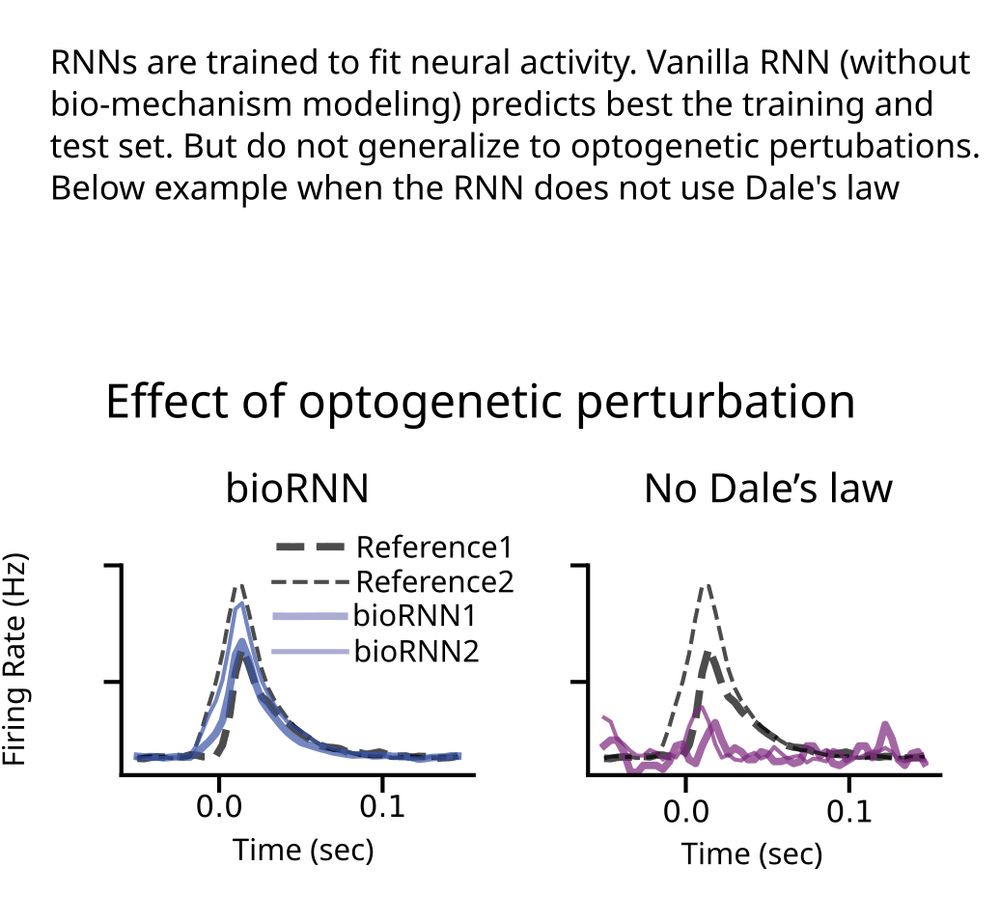

Is mechanism modeling dead in the AI era?

ML models trained to predict neural activity fail to generalize to unseen opto perturbations. But mechanism modeling can solve that.

We say "perturbation testing" is the right way to evaluate mechanisms in data-constrained models

1/8

Is mechanism modeling dead in the AI era?

ML models trained to predict neural activity fail to generalize to unseen opto perturbations. But mechanism modeling can solve that.

We say "perturbation testing" is the right way to evaluate mechanisms in data-constrained models

1/8