Working on Self-supervised Cross-modal Geospatial Learning.

Personal WebPage: https://gastruc.github.io/

Check it out:

📄 Paper: arxiv.org/abs/2412.14123

🌐 Project: gastruc.github.io/anysat

Check it out:

📄 Paper: arxiv.org/abs/2412.14123

🌐 Project: gastruc.github.io/anysat

📜 Paper: arxiv.org/abs/2412.14123

🌐 Project: gastruc.github.io/anysat

🤗 HuggingFace: huggingface.co/g-astruc/Any...

🐙 GitHub: github.com/gastruc/AnySat

📜 Paper: arxiv.org/abs/2412.14123

🌐 Project: gastruc.github.io/anysat

🤗 HuggingFace: huggingface.co/g-astruc/Any...

🐙 GitHub: github.com/gastruc/AnySat

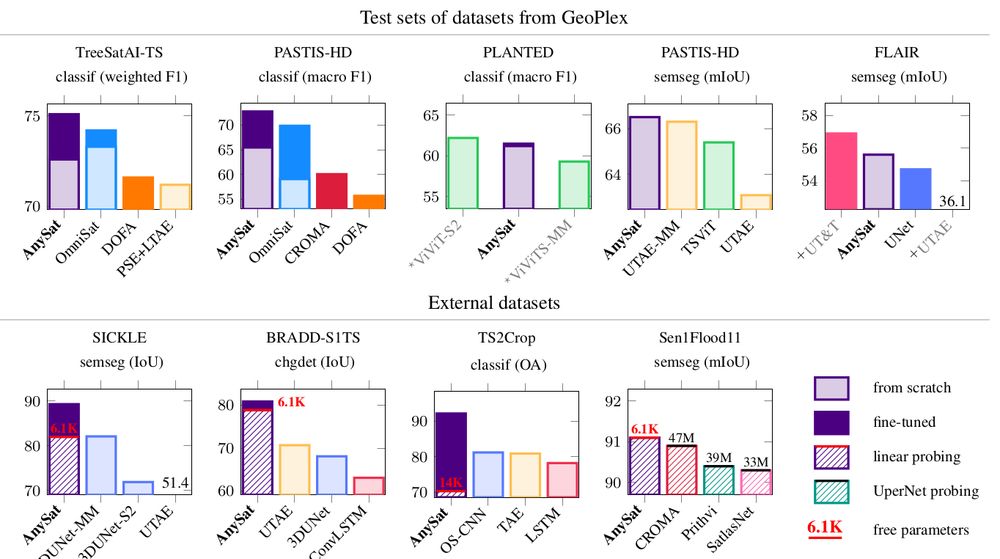

That means you can fine-tune just a few thousand parameters and achieve SOTA results on challenging tasks—all with minimal effort.

That means you can fine-tune just a few thousand parameters and achieve SOTA results on challenging tasks—all with minimal effort.

🌱 Land cover mapping

🌾 Crop type segmentation

🌳 Tree species classification

🌊 Flood detection

🌍 Change detection

🌱 Land cover mapping

🌾 Crop type segmentation

🌳 Tree species classification

🌊 Flood detection

🌍 Change detection

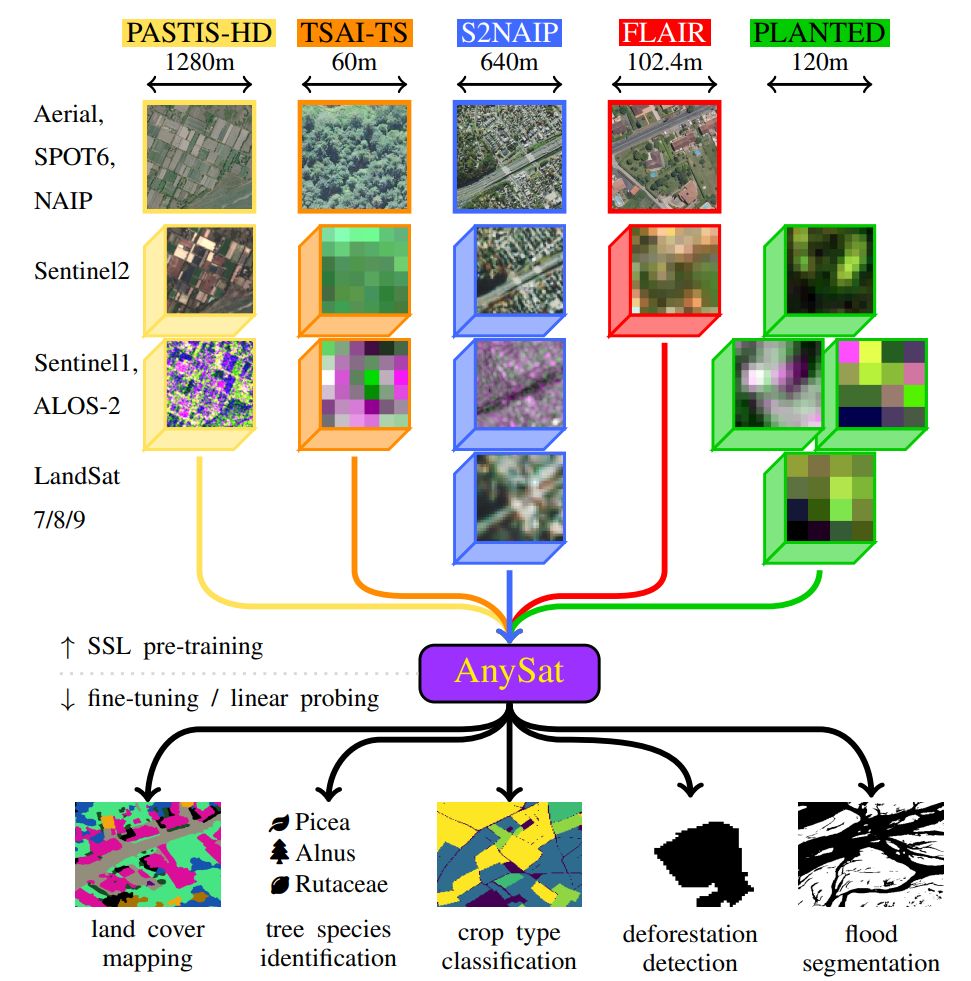

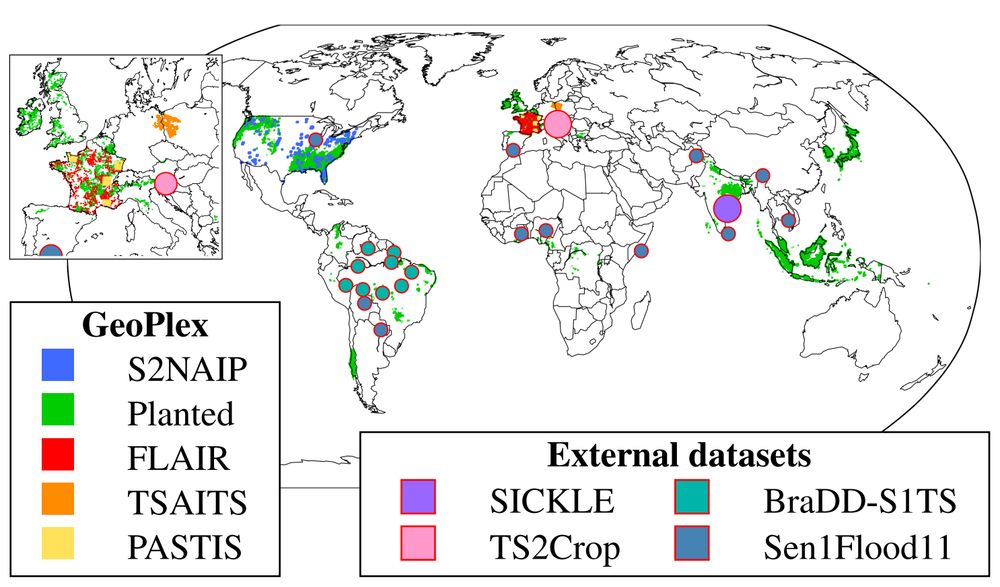

📡 11 distinct sensors

📏 Resolutions: 0.2m–500m

🔁 Revisit: single date to weekly

🏞️ Scales: 0.3–150 hectares

The pretrained model can adapt to truly diverse data, and probably yours too!

📡 11 distinct sensors

📏 Resolutions: 0.2m–500m

🔁 Revisit: single date to weekly

🏞️ Scales: 0.3–150 hectares

The pretrained model can adapt to truly diverse data, and probably yours too!

🧠 75% of its parameters are shared across all inputs, enabling unmatched flexibility.

🧠 75% of its parameters are shared across all inputs, enabling unmatched flexibility.

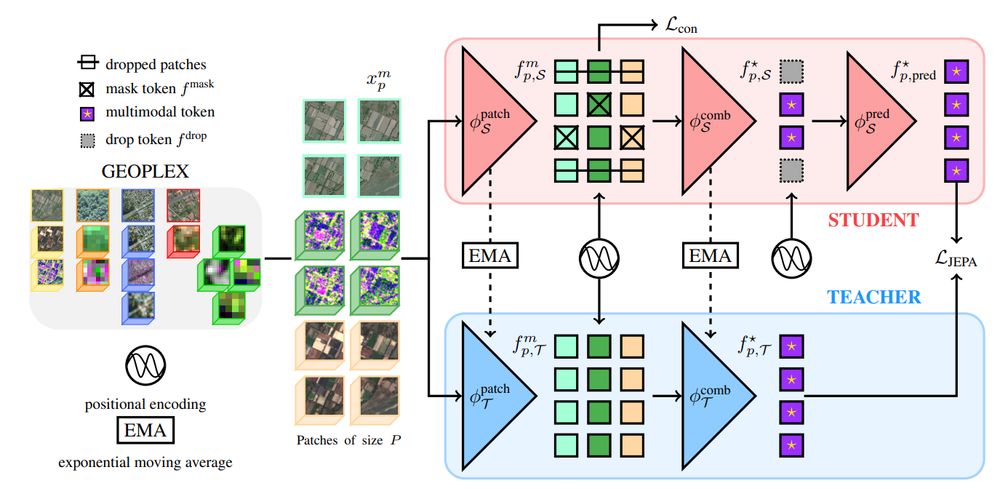

Introducing AnySat: one model for any resolution (0.2m–250m), scale (0.3–2600 hectares), and modalities (choose from 11 sensors & time series)!

Try it with just a few lines of code:

Introducing AnySat: one model for any resolution (0.2m–250m), scale (0.3–2600 hectares), and modalities (choose from 11 sensors & time series)!

Try it with just a few lines of code: