https://fabian-sp.github.io/

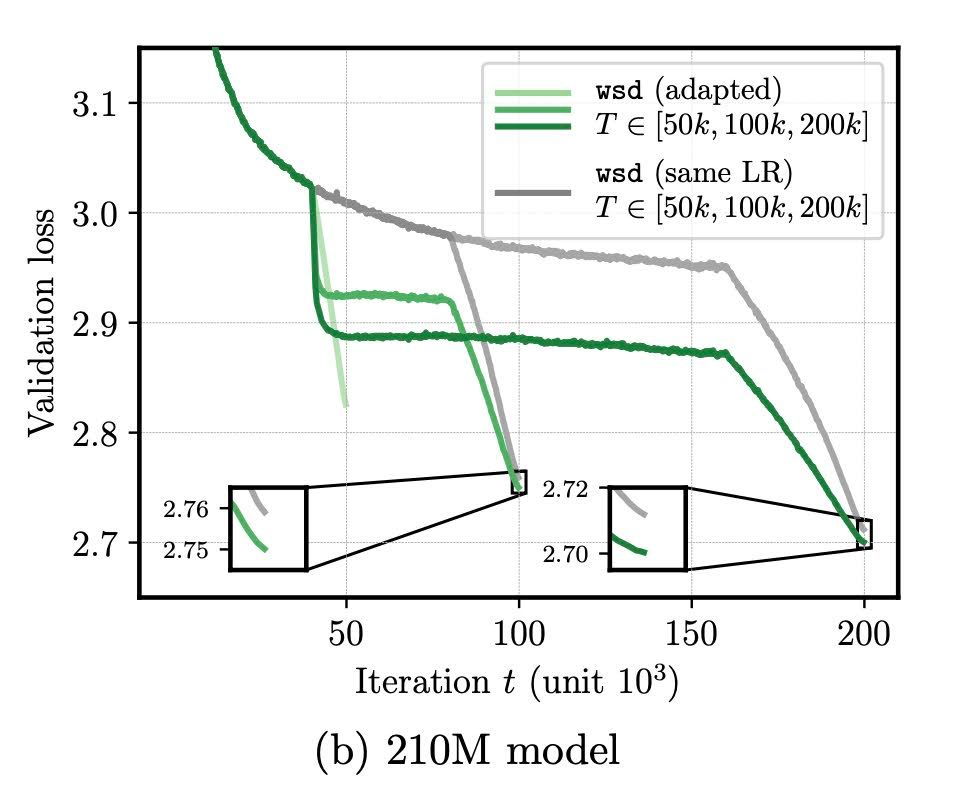

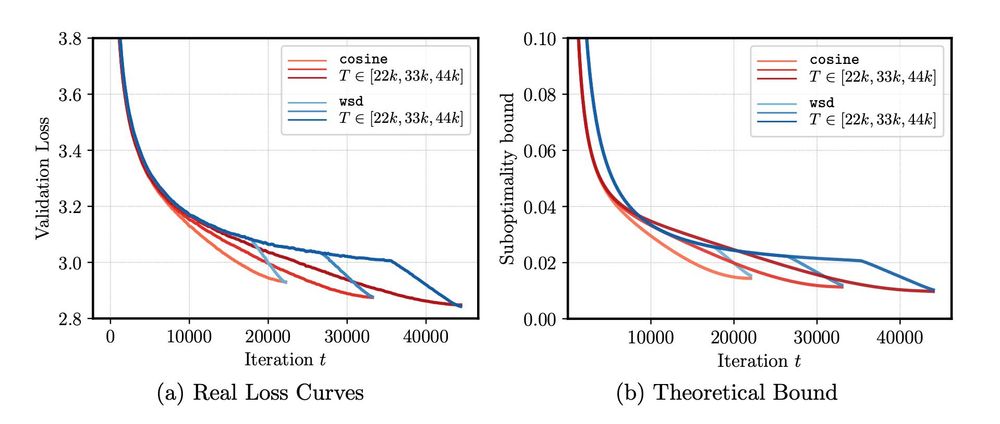

Using the theoretically optimal schedule (which can be computed for free), we obtain noticeable improvement in training 124M and 210M models.

Using the theoretically optimal schedule (which can be computed for free), we obtain noticeable improvement in training 124M and 210M models.

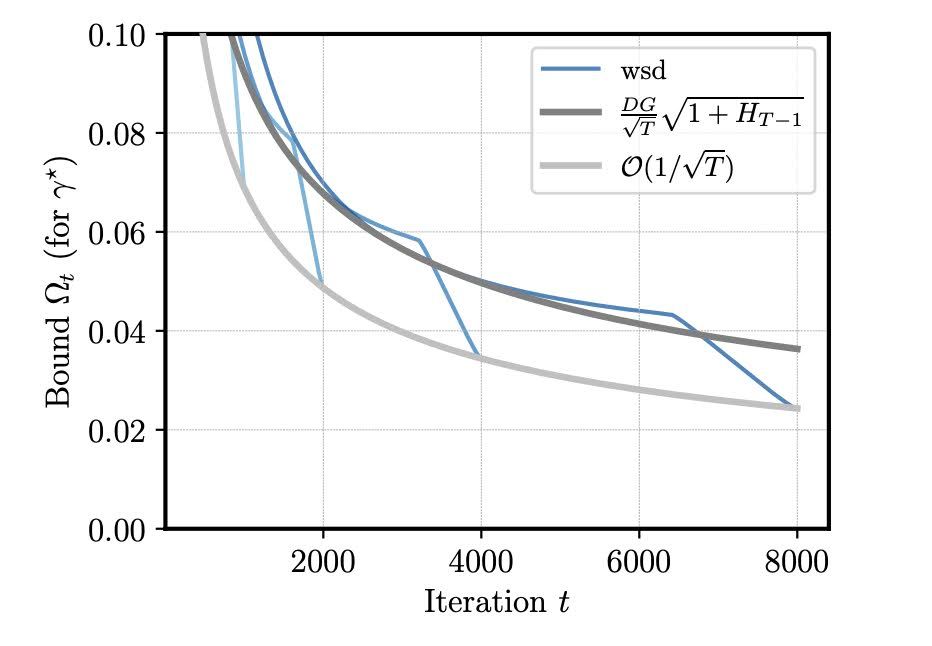

The second part suggests that the sudden drop in loss during cooldown happens when gradient norms do not go to zero.

The second part suggests that the sudden drop in loss during cooldown happens when gradient norms do not go to zero.

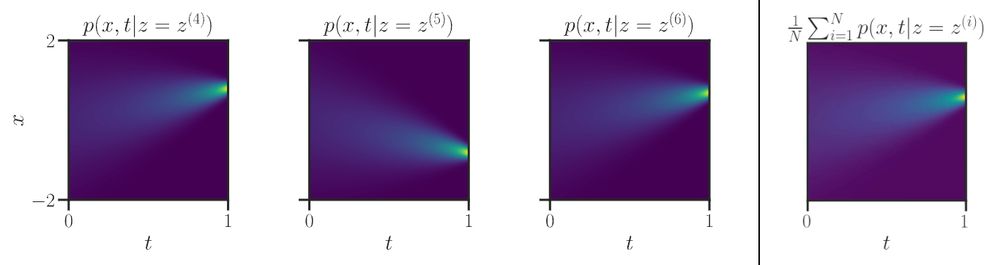

Figure 9 looks like a lighthouse guiding the way (towards the data distribution)

Figure 9 looks like a lighthouse guiding the way (towards the data distribution)