That is typical of agentic coding tools. It’s on the Pareto frontier there.

Better than GPT-OSS 20B, cheaper and faster than Devstral Small 2.

Fits on a Macbook, does phenomenal on agentic & coding benchmarks

huggingface.co/zai-org/GLM-...

That is typical of agentic coding tools. It’s on the Pareto frontier there.

Better than GPT-OSS 20B, cheaper and faster than Devstral Small 2.

We found that if you simply delete them after pretraining and recalibrate for <1% of the original budget, you unlock massive context windows. Smarter, not harder.

We found embeddings like RoPE aid training but bottleneck long-sequence generalization. Our solution’s simple: treat them as a temporary training scaffold, not a permanent necessity.

arxiv.org/abs/2512.12167

pub.sakana.ai/DroPE

We found that if you simply delete them after pretraining and recalibrate for <1% of the original budget, you unlock massive context windows. Smarter, not harder.

A bit overshadowed by DeepSeek, whose DSA mechanisms achieve great cost cuts.

A bit overshadowed by DeepSeek, whose DSA mechanisms achieve great cost cuts.

Initial benchmarks from the announcement imply a drop below Gemini 3 Flash in agentic coding. In fact, the performance seems close to DeepSeek V3.2 at a 50x price jump.

Initial benchmarks from the announcement imply a drop below Gemini 3 Flash in agentic coding. In fact, the performance seems close to DeepSeek V3.2 at a 50x price jump.

At the highest, it surpasses the recently-released GPT-5.2 in both cost and quality.

With Gemini 3 Flash ⚡️, we are seeing reasoning capabilities previously reserved for our largest models. This opens up entirely new categories of near real-time applications that require complex thought.

More in thread ⬇️

At the highest, it surpasses the recently-released GPT-5.2 in both cost and quality.

① what an agentic coding model can do, with no reasoning!

There’s a quality gap to reasoning models, expectedly. The positive: it is cheaper; potentially even cheaper in practice than indicated in this chart.

① what an agentic coding model can do, with no reasoning!

There’s a quality gap to reasoning models, expectedly. The positive: it is cheaper; potentially even cheaper in practice than indicated in this chart.

The OG one topped open models of that size. For the first time, a local model felt usable on consumer hardware.

Not only is the latest Ministral 8B on the Pareto frontier for knowledge vs. cost (and for search, math, agentic uses)…

The OG one topped open models of that size. For the first time, a local model felt usable on consumer hardware.

Not only is the latest Ministral 8B on the Pareto frontier for knowledge vs. cost (and for search, math, agentic uses)…

New model, new benchmarks!

The biggest jump for DeepSeek V3.2 is on agentic coding, where it seems poised to erase a lot of models on the Pareto frontier, including Sonnet 4.5, Minimax M2, and K2 Thinking.

New model, new benchmarks!

The biggest jump for DeepSeek V3.2 is on agentic coding, where it seems poised to erase a lot of models on the Pareto frontier, including Sonnet 4.5, Minimax M2, and K2 Thinking.

Its intrinsic knowledge is unmatched, surpassing 2.5 and GPT-5.1.

bsky.app/profile/espa...

Its intrinsic knowledge is unmatched, surpassing 2.5 and GPT-5.1.

bsky.app/profile/espa...

Why?

Company C1 releases model M1 and discloses benchmarks B1.

Company C2 releases M2, showing off benchmarks B2 which are distinct.

Comparing those models is hard since they don't share benchmarks!

Why?

Company C1 releases model M1 and discloses benchmarks B1.

Company C2 releases M2, showing off benchmarks B2 which are distinct.

Comparing those models is hard since they don't share benchmarks!

gemini-embedding-exp-03-07 is the only embedding model in the market that I can’t benchmark because of it.

The quota in the Console says I'm at 0.33% usage…

gemini-embedding-exp-03-07 is the only embedding model in the market that I can’t benchmark because of it.

The quota in the Console says I'm at 0.33% usage…

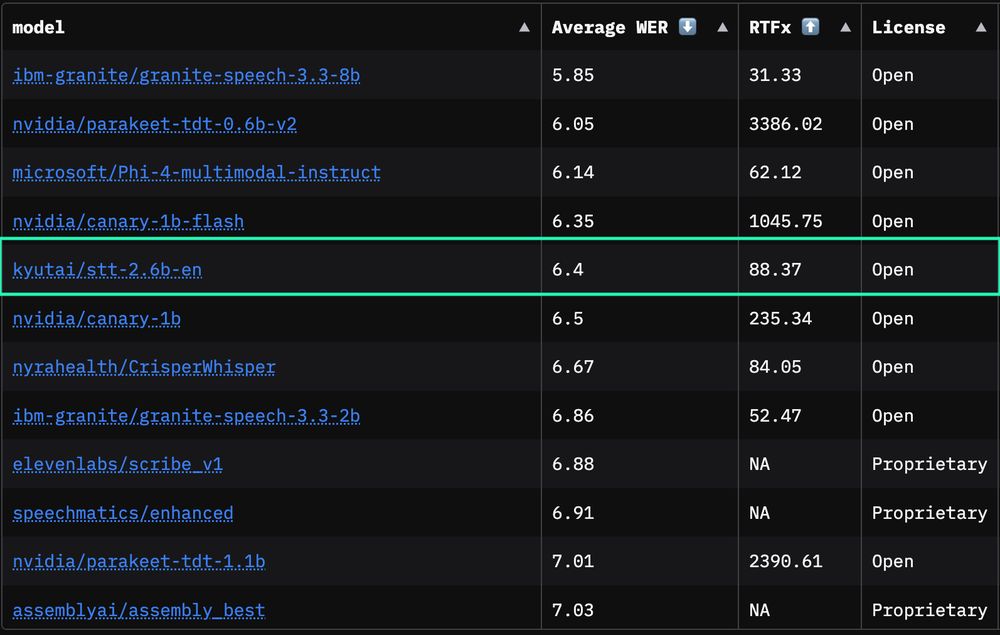

While all other models need the whole audio, ours delivers top-tier accuracy on streaming content.

Open, fast, and ready for production!

While all other models need the whole audio, ours delivers top-tier accuracy on streaming content.

Open, fast, and ready for production!

I find more interesting, high-signal things from querying what I like, than linearly going through a feed that learnt from my navigation.

Generally, giving users the ability to send reliable signals beats extracting signals from their background noise.

I find more interesting, high-signal things from querying what I like, than linearly going through a feed that learnt from my navigation.

Generally, giving users the ability to send reliable signals beats extracting signals from their background noise.

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

people.csail.mit.edu/rrw/time-vs-...

It's still hard for me to believe it myself, but I seem to have shown that TIME[t] is contained in SPACE[sqrt{t log t}].

To appear in STOC. Comments are very welcome!

people.csail.mit.edu/rrw/time-vs-...

It's still hard for me to believe it myself, but I seem to have shown that TIME[t] is contained in SPACE[sqrt{t log t}].

To appear in STOC. Comments are very welcome!

With Mr Musk being in government, doesn’t that make every X suspension or shadow ban, censorship?

With Mr Musk being in government, doesn’t that make every X suspension or shadow ban, censorship?

Is there a shred of reason behind Ekrem Immamoglu's jailing?

apnews.com/article/turk...

Is there a shred of reason behind Ekrem Immamoglu's jailing?

apnews.com/article/turk...

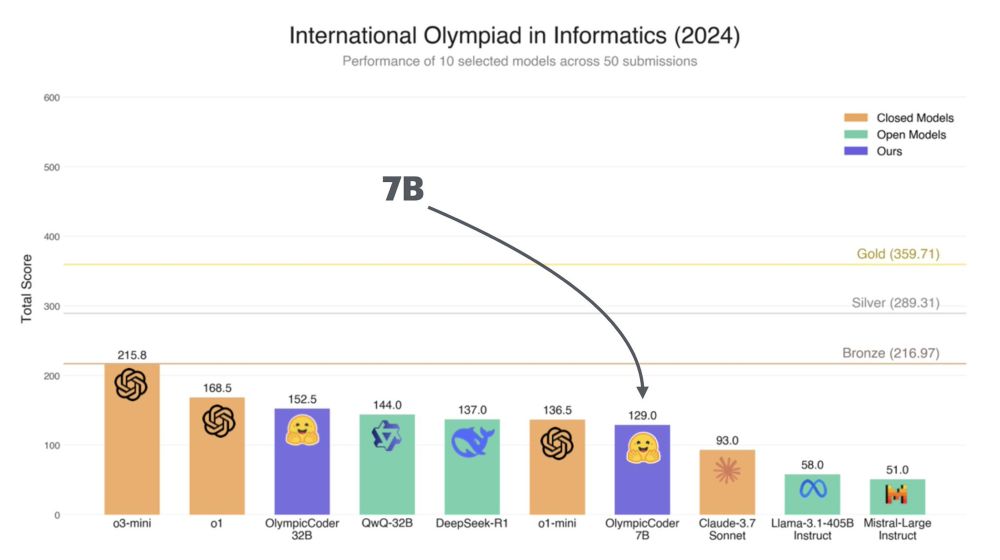

And even we were mind-blown by the results we got with this latest model we're releasing: ⚡️OlympicCoder

[1/3]

And even we were mind-blown by the results we got with this latest model we're releasing: ⚡️OlympicCoder

[1/3]