Neoliberalism what!? Donald who!? Modal Logic? Incompleteness Theorems? Large Language Models? Hitler? Oh yes, he's a fringe politician in Munich.

Come on, let's get you up. We have a lot of work to do unifying science and eliminating metaphysics.

Neoliberalism what!? Donald who!? Modal Logic? Incompleteness Theorems? Large Language Models? Hitler? Oh yes, he's a fringe politician in Munich.

Come on, let's get you up. We have a lot of work to do unifying science and eliminating metaphysics.

Take this sunrise over water. It's an amazing scene, but sadly Monet never had time to finish the painting. As a result the lines and colors are so drab and blurry.

With AI we can finally bring his dream to life!

Take this sunrise over water. It's an amazing scene, but sadly Monet never had time to finish the painting. As a result the lines and colors are so drab and blurry.

With AI we can finally bring his dream to life!

link.springer.com/article/10.1...

link.springer.com/article/10.1...

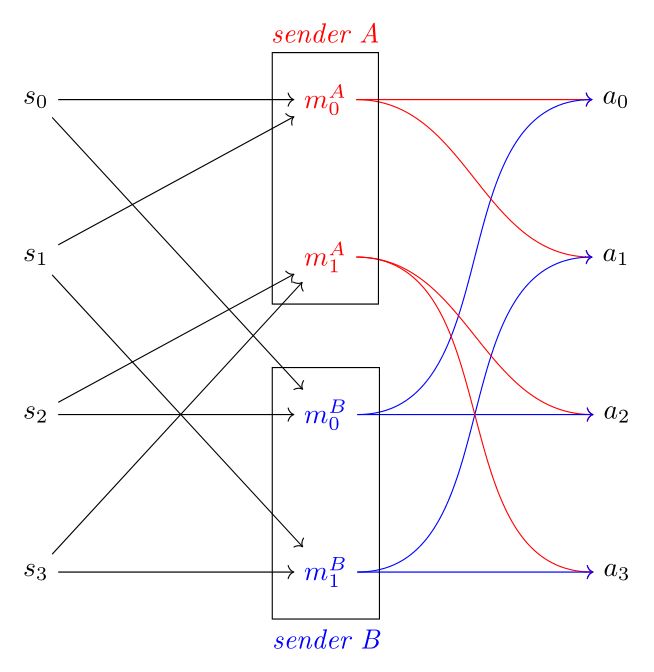

I tackle an ongoing problem with the learning of compositional communication in conventional signaing games.

I build two new models to show that structured receivers can learn and retain compositional information.

philpapers.org/rec/FRECUI-2

I tackle an ongoing problem with the learning of compositional communication in conventional signaing games.

I build two new models to show that structured receivers can learn and retain compositional information.

philpapers.org/rec/FRECUI-2

www.nytimes.com/interactive/...

www.nytimes.com/interactive/...

I'm equally impressed and horrified by the result. In a sense, we might consider this to be ChatGPT's self-portrait.

I'm equally impressed and horrified by the result. In a sense, we might consider this to be ChatGPT's self-portrait.

One fun-test I have been trying with all the models: can they generate Sudano-Sahelian architecture? For some reason older models struggled with this.

Comparison with Dalle-E via bing:

One fun-test I have been trying with all the models: can they generate Sudano-Sahelian architecture? For some reason older models struggled with this.

Comparison with Dalle-E via bing:

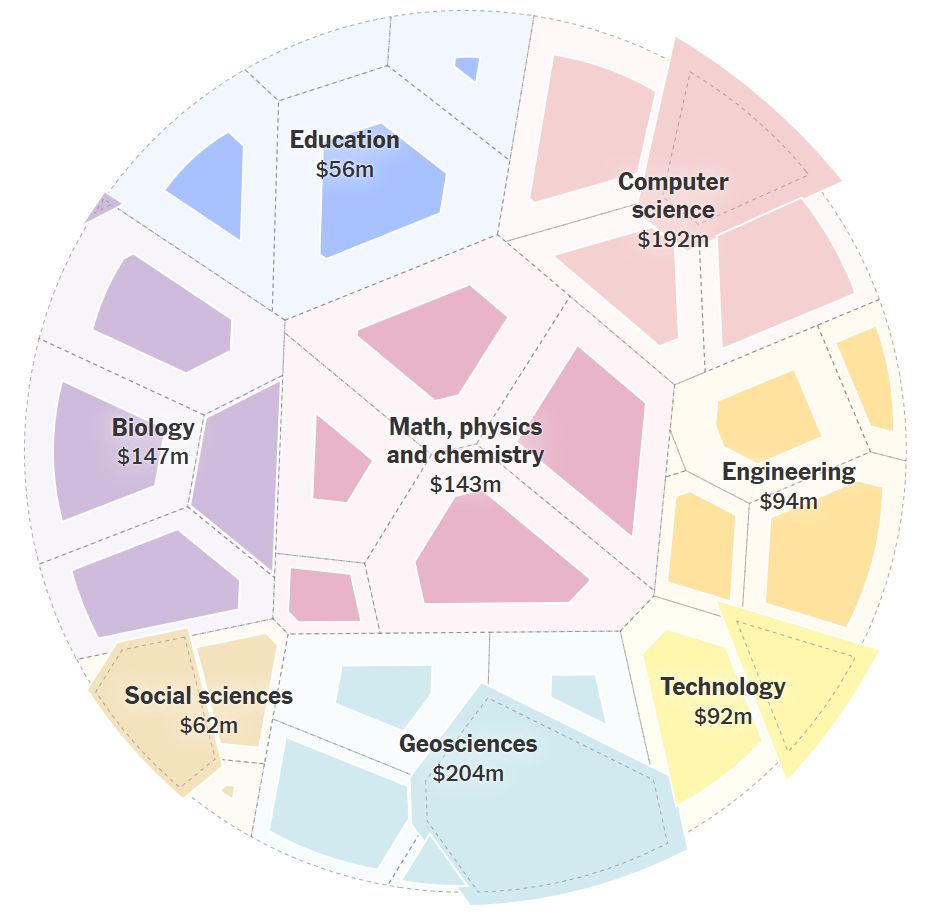

This paper analyzes industrial distraction, a common technique where industry actors fund and share research that is accurate, often high-quality, but nonetheless misleading on important matters of fact.

www.cambridge.org/core/journal...

This paper analyzes industrial distraction, a common technique where industry actors fund and share research that is accurate, often high-quality, but nonetheless misleading on important matters of fact.

www.cambridge.org/core/journal...

Observe: the chain of thought doesn't seem to "see" the previous Tiananmen Square question.

Observe: the chain of thought doesn't seem to "see" the previous Tiananmen Square question.

Improved effectiveness if you ritually sacrifice an expired Microsoft 365 license at the same time.

Improved effectiveness if you ritually sacrifice an expired Microsoft 365 license at the same time.