Details, examples, and more issues in the paper! (7/7)

arxiv.org/abs/2506.00723

Details, examples, and more issues in the paper! (7/7)

arxiv.org/abs/2506.00723

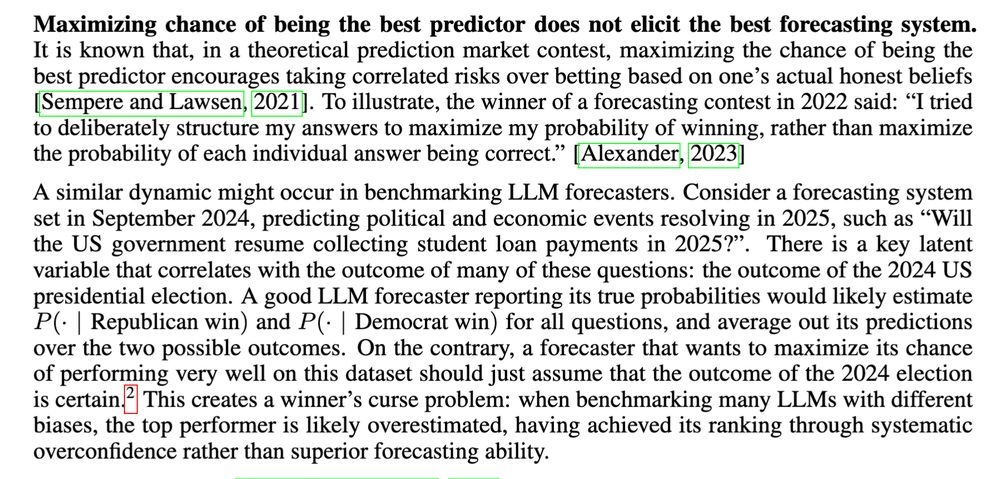

"Bet everything" on one scenario beats careful probability estimation for maximizing the chance of ranking #1 on the leaderboard. (6/7)

"Bet everything" on one scenario beats careful probability estimation for maximizing the chance of ranking #1 on the leaderboard. (6/7)

We find that backtesting in existing papers often has similar logical issues that leak information about answers. (3/7)

We find that backtesting in existing papers often has similar logical issues that leak information about answers. (3/7)

Instead, researchers use "backtesting": questions where we can evaluate predictions now, but the model has no information about the outcome ... or so we think (2/7)

Instead, researchers use "backtesting": questions where we can evaluate predictions now, but the model has no information about the outcome ... or so we think (2/7)

gist.github.com/dpaleka/7b4...

gist.github.com/dpaleka/7b4...