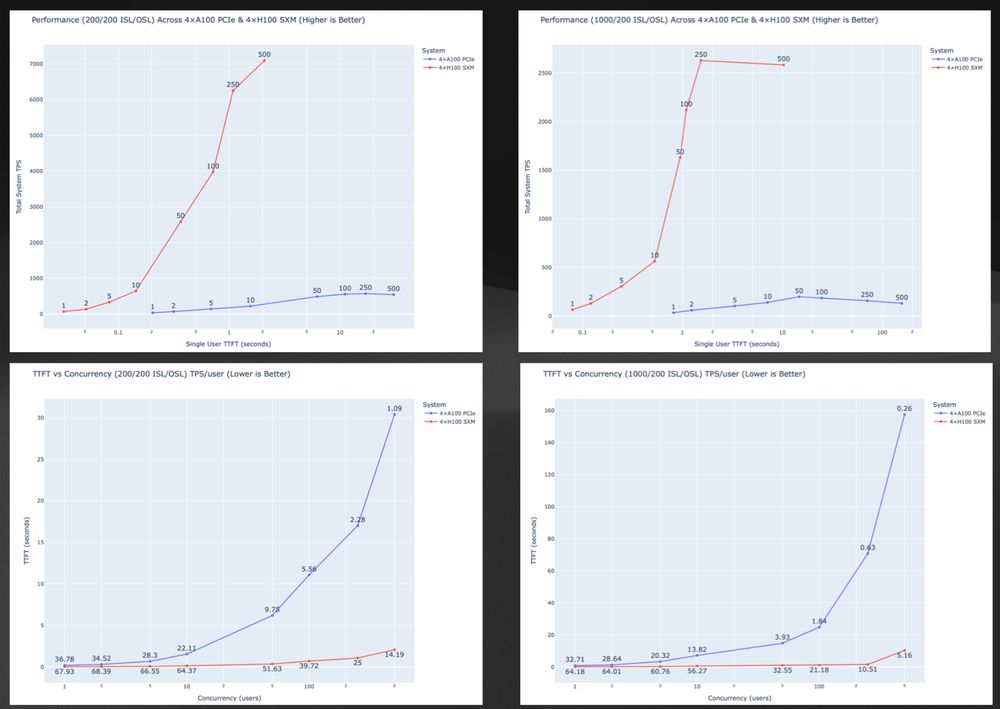

H100 (SXM5) delivered up to 14× more throughput vs A100 (PCIe) with far lower latency.

Full benchmarks + thoughts:

dlewis.io/evaluating-l...

H100 (SXM5) delivered up to 14× more throughput vs A100 (PCIe) with far lower latency.

Full benchmarks + thoughts:

dlewis.io/evaluating-l...