https://papail.io

In "A Mathematical Theory of Communication", almost as an afterthought Shannon suggests the N-gram for generating English, and that word level tokenization is better than character level tokenization.

In "A Mathematical Theory of Communication", almost as an afterthought Shannon suggests the N-gram for generating English, and that word level tokenization is better than character level tokenization.

1) generate problems of appropriate hardness

2) be able to filter our negative examples using a cheap verifier.

Otherwise the benefit of self-improvement collapses.

1) generate problems of appropriate hardness

2) be able to filter our negative examples using a cheap verifier.

Otherwise the benefit of self-improvement collapses.

- Arithmetic: Reverse addition, forward (yes forward!) addition, multiplication (with CoT)

- String Manipulation: Copying, reversing

- Maze Solving: Finding shortest paths in graphs.

It always works

- Arithmetic: Reverse addition, forward (yes forward!) addition, multiplication (with CoT)

- String Manipulation: Copying, reversing

- Maze Solving: Finding shortest paths in graphs.

It always works

What if we let the model label slightly harder data… and then train on them?

Our key idea is to use Self-Improving Transformers , where a model iteratively labels its own train data and learns from progressively harder examples (inspired by methods like STaR and ReST).

What if we let the model label slightly harder data… and then train on them?

Our key idea is to use Self-Improving Transformers , where a model iteratively labels its own train data and learns from progressively harder examples (inspired by methods like STaR and ReST).

I noticed that there is a bit of transcendence, i.e the model trained on n-digit ADD can solve slightly harder problems, eg n+1, but not much more.

(cc on transcendence and chess arxiv.org/html/2406.11741v1)

I noticed that there is a bit of transcendence, i.e the model trained on n-digit ADD can solve slightly harder problems, eg n+1, but not much more.

(cc on transcendence and chess arxiv.org/html/2406.11741v1)

(Figure from: Cho et al., arxiv.org/abs/2405.20671)

(Figure from: Cho et al., arxiv.org/abs/2405.20671)

But I think multiplication, addition, maze solving and easy-to-hard generalization is actually solvable on standard transformers...

with recursive self-improvement

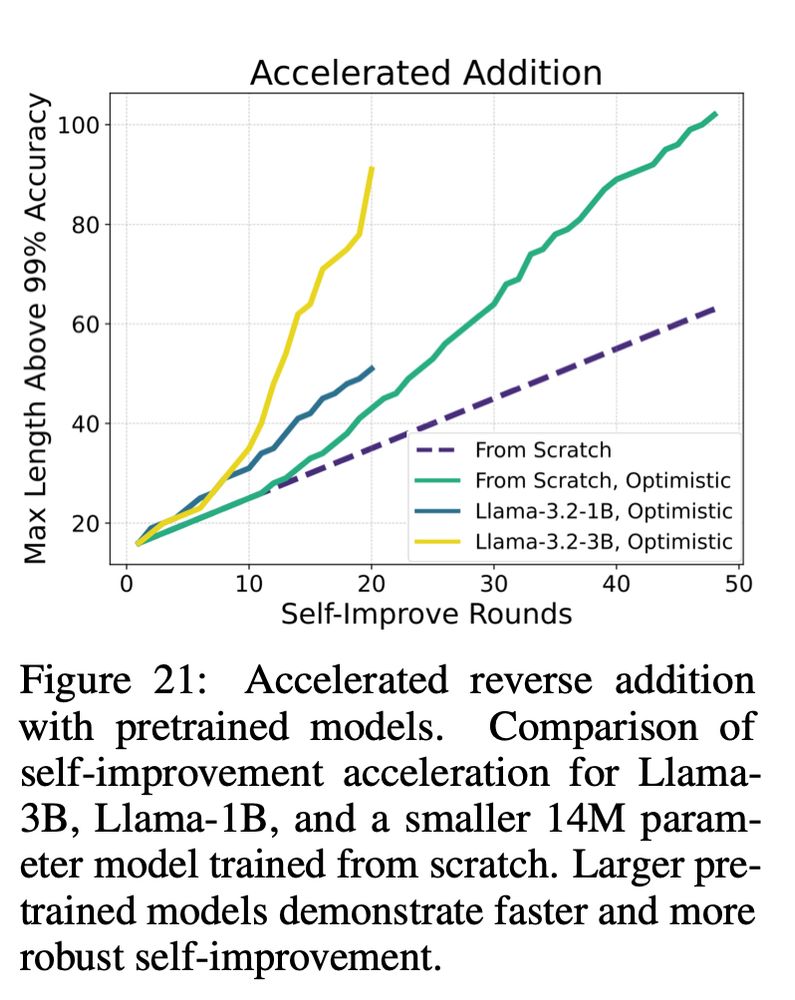

Below is the acc of a tiny model teaching itself how to add and multiply

But I think multiplication, addition, maze solving and easy-to-hard generalization is actually solvable on standard transformers...

with recursive self-improvement

Below is the acc of a tiny model teaching itself how to add and multiply

Paper on arxiv coming on Monday.

Link to a talk I gave on this below 👇

Super excited about this work!

Talk : youtube.com/watch?v=szhE...

slides: tinyurl.com/SelfImprovem...

Paper on arxiv coming on Monday.

Link to a talk I gave on this below 👇

Super excited about this work!

Talk : youtube.com/watch?v=szhE...

slides: tinyurl.com/SelfImprovem...

Here's a new one for me: Drawing with Logo (yes the turtle)!

To be fair drawing with Logo is hard. But.. here goes 8 examples with sonnet 3.6 vs o1.

Example 1/8: Draw the letter G

Here's a new one for me: Drawing with Logo (yes the turtle)!

To be fair drawing with Logo is hard. But.. here goes 8 examples with sonnet 3.6 vs o1.

Example 1/8: Draw the letter G

arxiv.org/pdf/2501.09240

arxiv.org/pdf/2501.09240

Kangwook Lee's group

x.com/Kangwook_Lee...

Kangwook Lee's group

x.com/Kangwook_Lee...

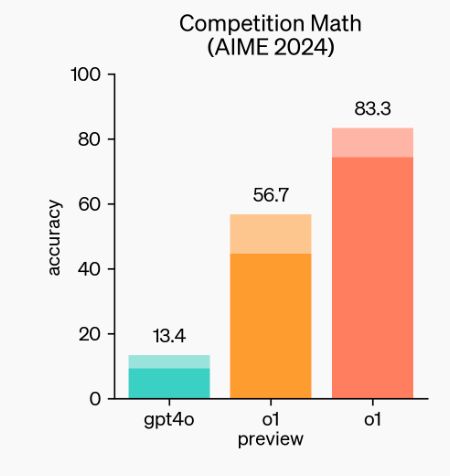

It scored 93.3%.

it got 14 out of 15 questions, on both I and II versions.

Uhm, wow.

It scored 93.3%.

it got 14 out of 15 questions, on both I and II versions.

Uhm, wow.