By Xianghui Xie, Jan Eric Lenssen, Gerard Pons-Moll

Project: virtualhumans.mpi-inf.mpg.de/MVGBench/

By Xianghui Xie, Jan Eric Lenssen, Gerard Pons-Moll

Project: virtualhumans.mpi-inf.mpg.de/MVGBench/

By Ada Görgün, Bernt Schiele, Jonas Fischer

Project: adagorgun.github.io/VITAL-Project/

By Ada Görgün, Bernt Schiele, Jonas Fischer

Project: adagorgun.github.io/VITAL-Project/

By Eyad Alshami, Shashank Agnihotri, Bernt Schiele, Margret Keuper

By Eyad Alshami, Shashank Agnihotri, Bernt Schiele, Margret Keuper

By O. Dünkel, T. Wimmer, C. Theobalt, C. Rupprecht, A. Kortylewski

Page: genintel.github.io/DIY-SC

By O. Dünkel, T. Wimmer, C. Theobalt, C. Rupprecht, A. Kortylewski

Page: genintel.github.io/DIY-SC

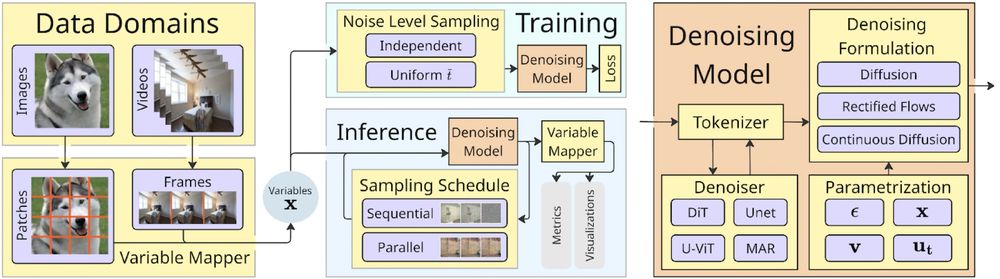

🔍 Software framework for training Spatial Reasoning Models in any domain

🔍 Software framework for training Spatial Reasoning Models in any domain

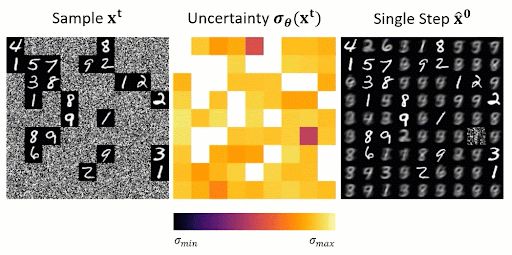

🔍 Can image generators solve visual Sudoku? Naively, no, with sequentialization and the correct order, they can!

🔍 Can image generators solve visual Sudoku? Naively, no, with sequentialization and the correct order, they can!

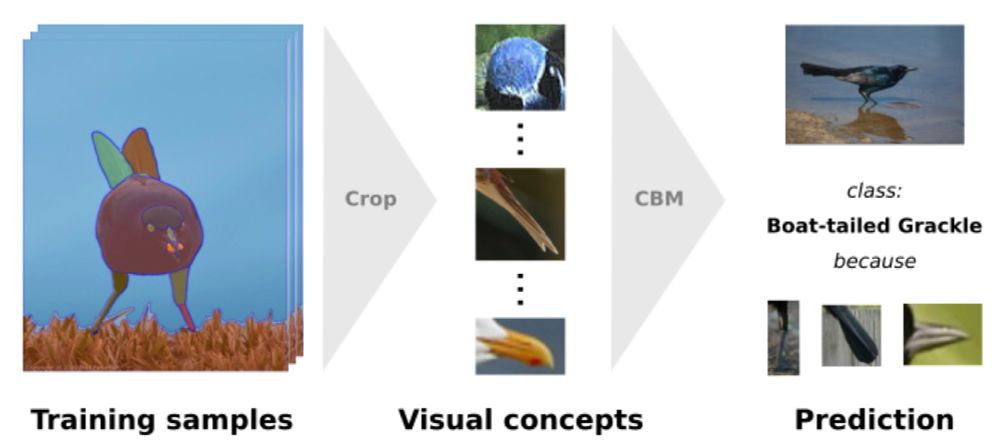

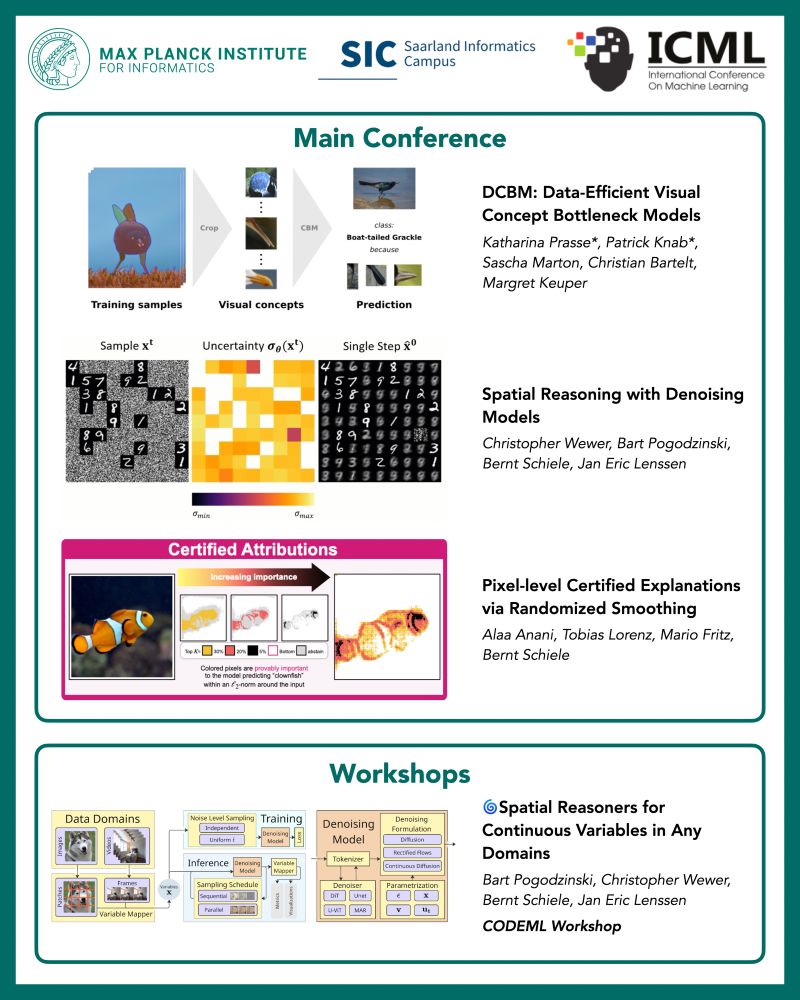

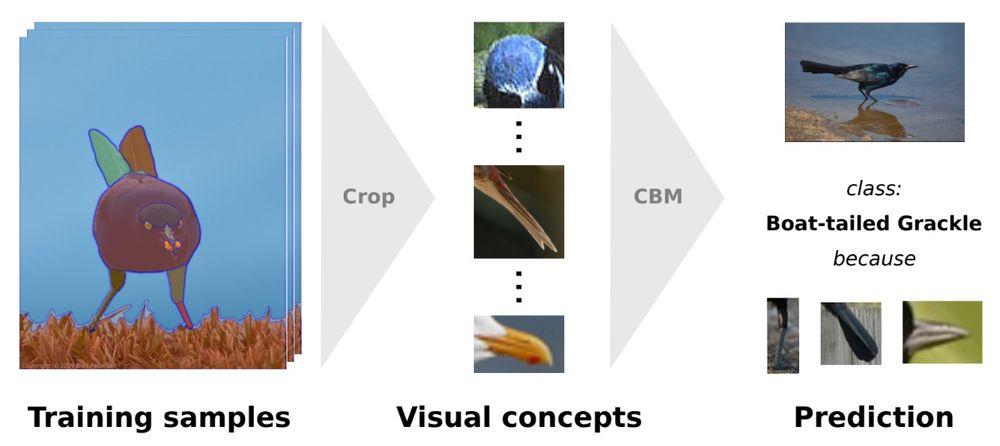

🔍 Data-efficient CBMs (DCBMs) generate concepts from image regions detected by segmentation or detection models

🔍 Data-efficient CBMs (DCBMs) generate concepts from image regions detected by segmentation or detection models

Congratulations to all the authors! To know more, visit us in the poster sessions!

A 🧵with more details:

@icmlconf.bsky.social @mpi-inf.mpg.de

Congratulations to all the authors! To know more, visit us in the poster sessions!

A 🧵with more details:

@icmlconf.bsky.social @mpi-inf.mpg.de

He is now at Genmo.ai as a Research Engineer working on video generation! 🚀

More: yue-fan.github.io

All the best!

He is now at Genmo.ai as a Research Engineer working on video generation! 🚀

More: yue-fan.github.io

All the best!

His thesis is titled: Learning to Track: From Limited Supervision to Long-range Sequence Modeling

Checkout his web-page to learn more about his work: mattiasegu.github.io

His thesis is titled: Learning to Track: From Limited Supervision to Long-range Sequence Modeling

Checkout his web-page to learn more about his work: mattiasegu.github.io

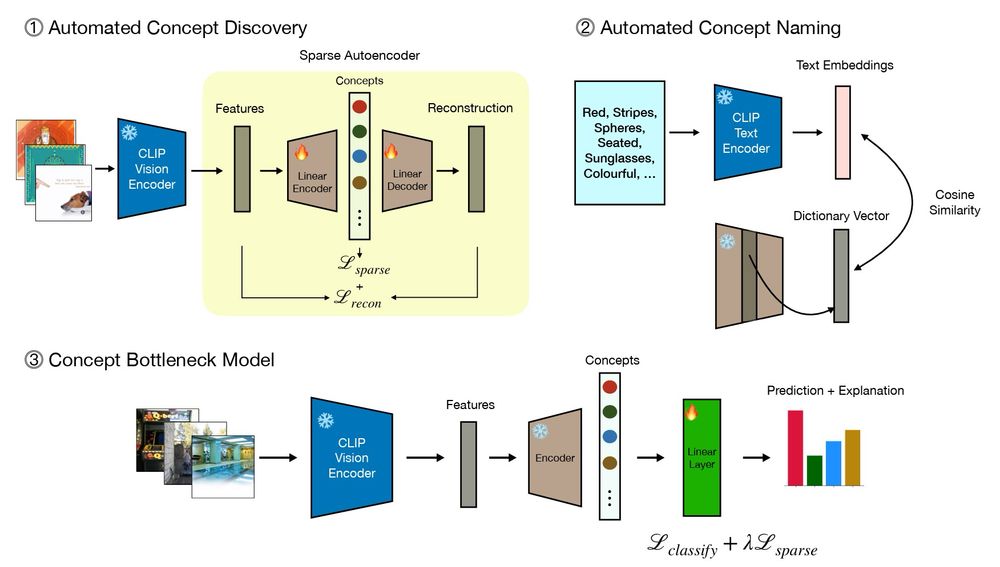

Authors: S. Rao, S. Mahajan, M. Böhle, B. Schiele

🔍 Explore sparse autoencoders to automatically extract and name concepts, enabling performance improvements on downstream tasks.

📚 arxiv.org/abs/2407.14499

Authors: S. Rao, S. Mahajan, M. Böhle, B. Schiele

🔍 Explore sparse autoencoders to automatically extract and name concepts, enabling performance improvements on downstream tasks.

📚 arxiv.org/abs/2407.14499

Authors: T. Medi*, A. Rampini, P. Reddy, P. K. Jayaraman, M. Keuper

🔍 3D-WAG introduces wavelet-guided autoregressive generation for 3D shapes, aiming for better geometry modeling.

📚 arxiv.org/abs/2411.19037

Authors: T. Medi*, A. Rampini, P. Reddy, P. K. Jayaraman, M. Keuper

🔍 3D-WAG introduces wavelet-guided autoregressive generation for 3D shapes, aiming for better geometry modeling.

📚 arxiv.org/abs/2411.19037

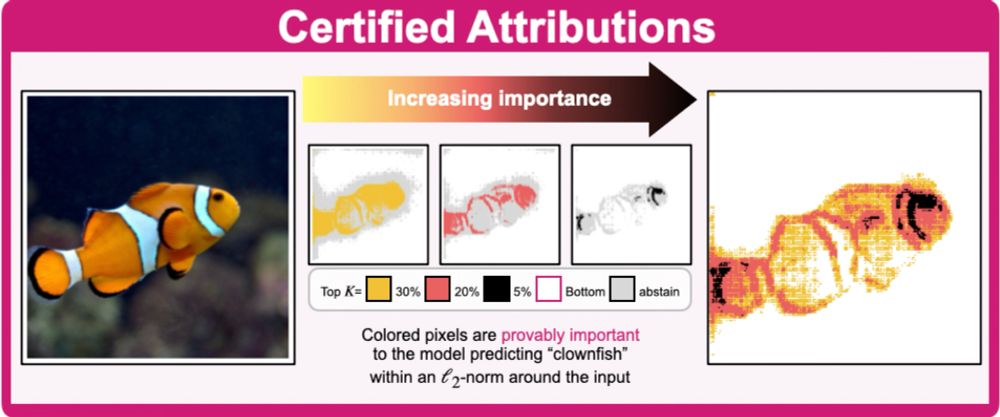

Authors: K. Prasse, P. Knab, S. Marton, C. Bartelt, M. Keuper

🔍 Introducing data-efficient visual concept bottleneck models for improved explainability in CV.

📚 arxiv.org/abs/2412.11576

Authors: K. Prasse, P. Knab, S. Marton, C. Bartelt, M. Keuper

🔍 Introducing data-efficient visual concept bottleneck models for improved explainability in CV.

📚 arxiv.org/abs/2412.11576

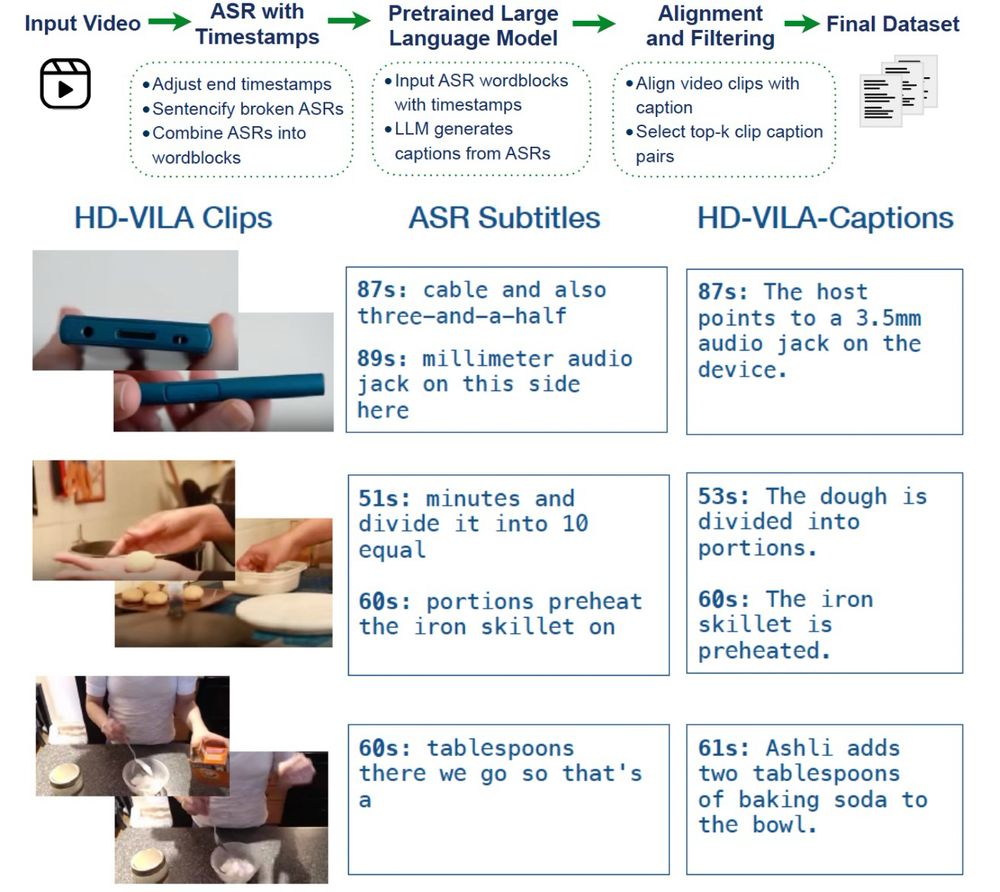

By: M. Saleh, N. Shvetsova, A. Kukleva, H. Kuehne, B. Schiele

🔍 HD-VILA-Caption is a large-scale, diverse video-text dataset with 10M high-quality captions, built from ASR subtitles for video-language pretraining.

By: M. Saleh, N. Shvetsova, A. Kukleva, H. Kuehne, B. Schiele

🔍 HD-VILA-Caption is a large-scale, diverse video-text dataset with 10M high-quality captions, built from ASR subtitles for video-language pretraining.

By: M. Fatima, S. Jung, M. Keuper

🔍 Exploring how object size & position affect ImageNet-trained models and lead to performance issues.

📚 openreview.net/forum?id=B6l...

By: M. Fatima, S. Jung, M. Keuper

🔍 Exploring how object size & position affect ImageNet-trained models and lead to performance issues.

📚 openreview.net/forum?id=B6l...

By: S. Agnihotri, D. Schader, N. Sharei, M.E. Kaçar, M. Keuper

🔍 Investigating if synthetic corruptions can be a reliable proxy for real-world ones, and understanding their limitations.

📚https://www.arxiv.org/abs/2505.04835

By: S. Agnihotri, D. Schader, N. Sharei, M.E. Kaçar, M. Keuper

🔍 Investigating if synthetic corruptions can be a reliable proxy for real-world ones, and understanding their limitations.

📚https://www.arxiv.org/abs/2505.04835

By: S. Agnihotri, A. Ansari, A. Dackermann, F. Rösch, M. Keuper

🔍 A benchmark & tool for testing disparity estimation methods against synthetic corruptions & attacks.

📚 arxiv.org/abs/2505.050...

By: S. Agnihotri, A. Ansari, A. Dackermann, F. Rösch, M. Keuper

🔍 A benchmark & tool for testing disparity estimation methods against synthetic corruptions & attacks.

📚 arxiv.org/abs/2505.050...

👏 Huge congrats to our members on these workshop paper acceptances! Excited to see their work at #CVPR2025 🌟

#MPI-INF #D2 #Workshop #AI #ComputerVision #PhD

@mpi-inf.mpg.de

👏 Huge congrats to our members on these workshop paper acceptances! Excited to see their work at #CVPR2025 🌟

#MPI-INF #D2 #Workshop #AI #ComputerVision #PhD

@mpi-inf.mpg.de

Authors: Y. Wu*, X. Hu*, Y. Sun, Y. Zhou, W. Zhu, F. Rao, B. Schiele, X. Yang

🔍 NumPro enhances Video-LLMs in temporal grounding with red number markers, like flipping manga!

📚 arxiv.org/abs/2411.10332

Authors: Y. Wu*, X. Hu*, Y. Sun, Y. Zhou, W. Zhu, F. Rao, B. Schiele, X. Yang

🔍 NumPro enhances Video-LLMs in temporal grounding with red number markers, like flipping manga!

📚 arxiv.org/abs/2411.10332

By: X. Hu*, H. Wang*, J.E. Lenssen, B. Schiele

🔍 PersonaHOI is the first training-free framework to generate human-object interactions with personalized faces.

📚 arxiv.org/abs/2501.05823

By: X. Hu*, H. Wang*, J.E. Lenssen, B. Schiele

🔍 PersonaHOI is the first training-free framework to generate human-object interactions with personalized faces.

📚 arxiv.org/abs/2501.05823

By: M. Asim, C. Wewer, T. Wimmer, B. Schiele, J.E. Lenssen

🔍 MEt3R is a metric for multi-view consistency in generated image sequences & videos.

📚 arxiv.org/abs/2501.06336

🔗 geometric-rl.mpi-inf.mpg.de/met3r/

By: M. Asim, C. Wewer, T. Wimmer, B. Schiele, J.E. Lenssen

🔍 MEt3R is a metric for multi-view consistency in generated image sequences & videos.

📚 arxiv.org/abs/2501.06336

🔗 geometric-rl.mpi-inf.mpg.de/met3r/

By: N. Shvetsova, A. Nagrani, B. Schiele, H. Kuehne, C. Rupprecht

🔍 UTD uses VLMs/LLMs to mitigate representation bias in video benchmarks, ensuring fairer evaluations.

📚 arxiv.org/abs/2503.18637

📱 @ninashv.bsky.social @hildekuehne.bsky.social

By: N. Shvetsova, A. Nagrani, B. Schiele, H. Kuehne, C. Rupprecht

🔍 UTD uses VLMs/LLMs to mitigate representation bias in video benchmarks, ensuring fairer evaluations.

📚 arxiv.org/abs/2503.18637

📱 @ninashv.bsky.social @hildekuehne.bsky.social

By: J. Xie, A. Tonioni, N. Rauschmayr, F. Tombari, B. Schiele

🔍 VICT adapts VICL models with a single test sample, enhancing generalizability for unseen tasks.

📚 arxiv.org/abs/2503.21777

🔗 github.com/Jiahao000/VICT

By: J. Xie, A. Tonioni, N. Rauschmayr, F. Tombari, B. Schiele

🔍 VICT adapts VICL models with a single test sample, enhancing generalizability for unseen tasks.

📚 arxiv.org/abs/2503.21777

🔗 github.com/Jiahao000/VICT