prev: deepmind, umich, msr

https://cogscikid.com/

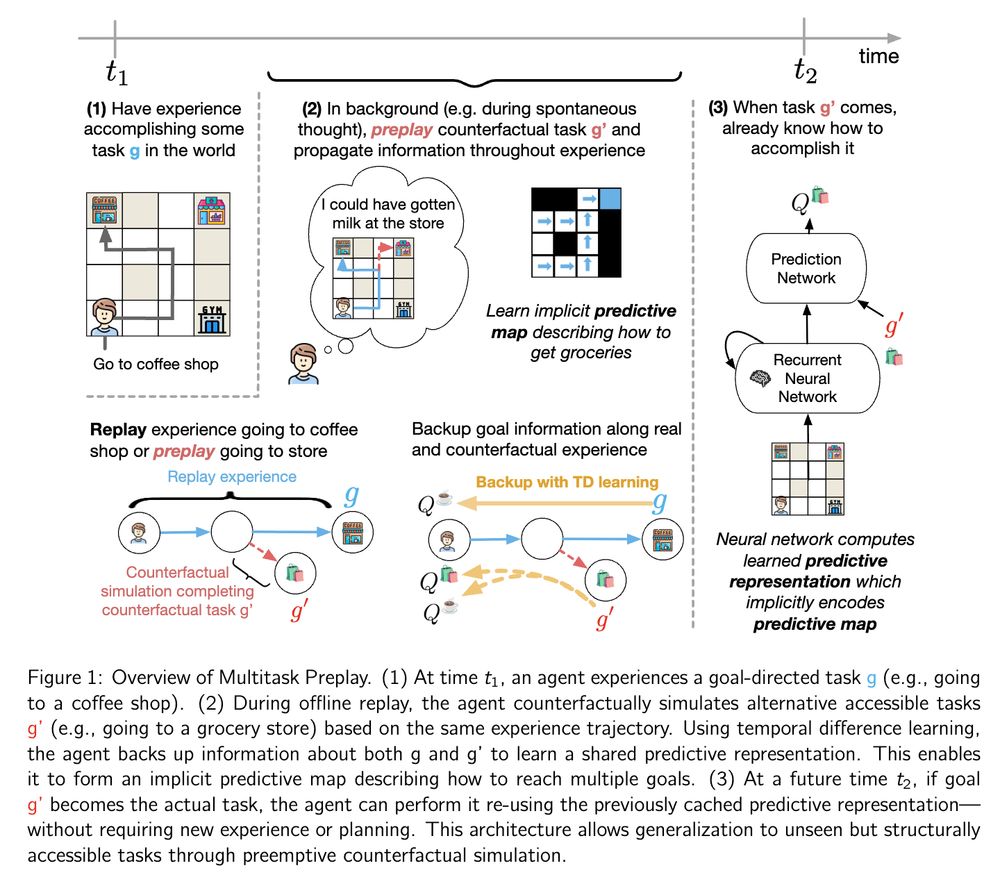

Basic idea: when learning one task, "preplay" other tasks to learn implicit goal-oriented maps that support fast performance later.

This improves AI and predicts human behavior in naturalistic experiments!

Basic idea: when learning one task, "preplay" other tasks to learn implicit goal-oriented maps that support fast performance later.

This improves AI and predicts human behavior in naturalistic experiments!

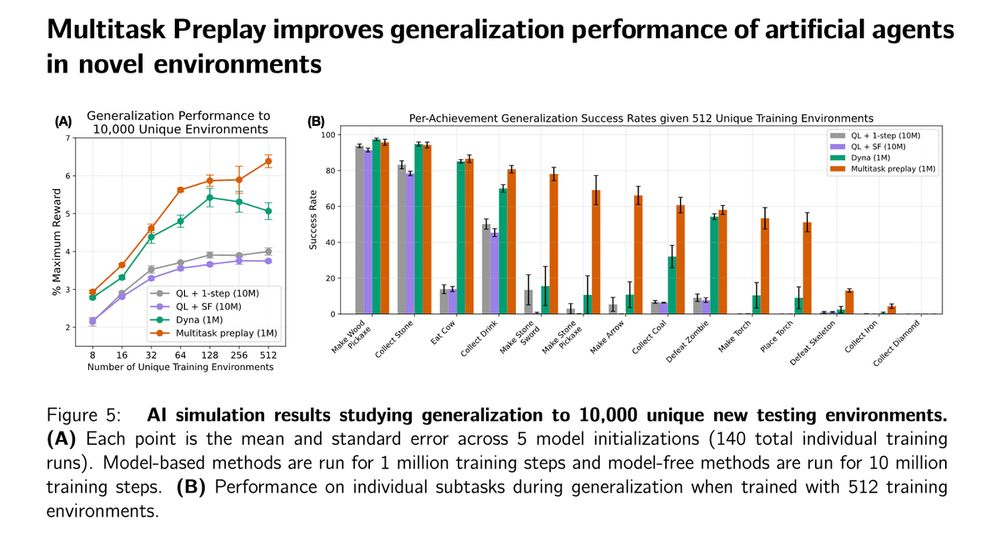

We present AI simulations where Multitask Preplay improves generalization of complex, long-horizon behaviors to 10,000 unique new environments when they share subtask co-occurrence structure.

We present AI simulations where Multitask Preplay improves generalization of complex, long-horizon behaviors to 10,000 unique new environments when they share subtask co-occurrence structure.

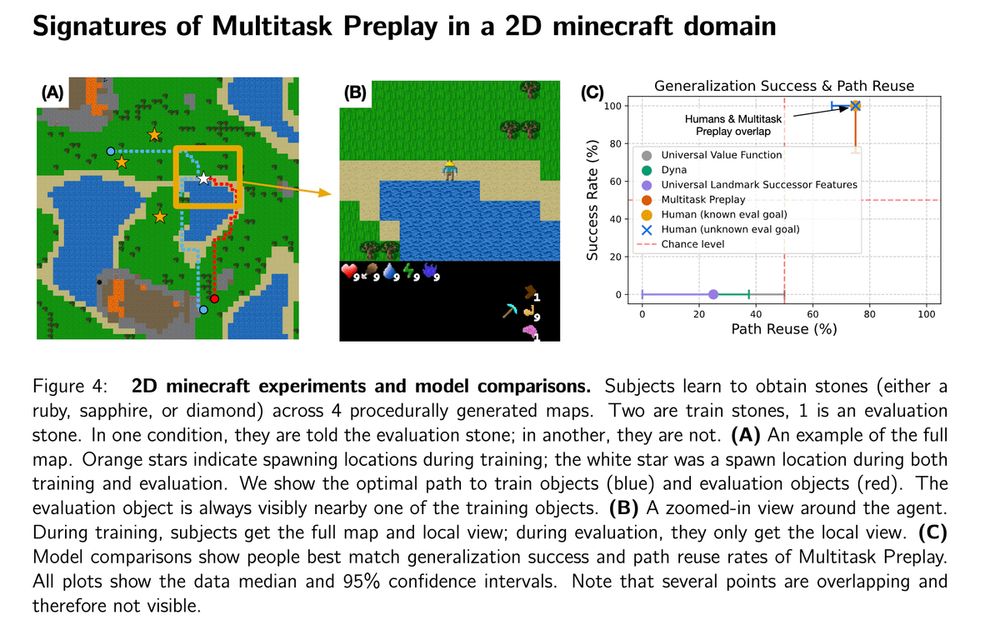

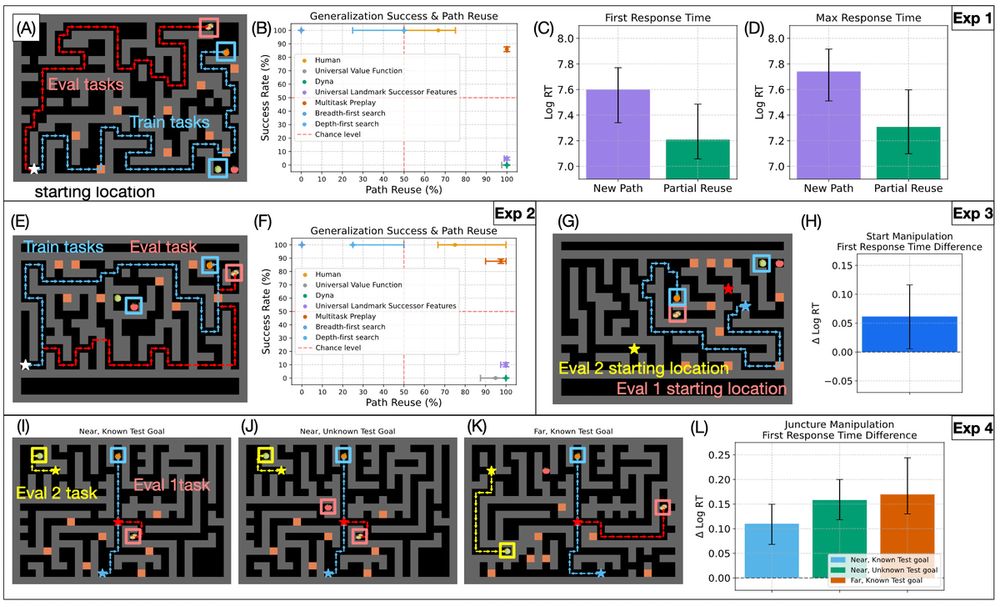

Here, we once again find evidence that people preplay completion of tasks that were accessible but unpursued, but now in a much larger world where generalization to new tasks is much harder.

Here, we once again find evidence that people preplay completion of tasks that were accessible but unpursued, but now in a much larger world where generalization to new tasks is much harder.

arxiv.org/abs/2507.05561

arxiv.org/abs/2507.05561

In particular, we emphasize frictionless reproducibility, generalizability-focused model development , and the utility of hypothesis-driven benchmarks.

In particular, we emphasize frictionless reproducibility, generalizability-focused model development , and the utility of hypothesis-driven benchmarks.

To help readers, we provide an outline of our complete argument as our first figure.

arxiv.org/abs/2502.20349

To help readers, we provide an outline of our complete argument as our first figure.

arxiv.org/abs/2502.20349

We're calling this direction "Naturalistic Computational Cognitive Science"

We're calling this direction "Naturalistic Computational Cognitive Science"

ece.engin.umich.edu/event/invite...

ece.engin.umich.edu/event/invite...

Importantly, this supports the popular JaxMARL (Multi-agent RL) codebase and all of its environments

Importantly, this supports the popular JaxMARL (Multi-agent RL) codebase and all of its environments

Are there any examples the community would like to see?

The first example we have allows a human to control an agent in a 2D minecraft environment known as "crafter"

Are there any examples the community would like to see?

The first example we have allows a human to control an agent in a 2D minecraft environment known as "crafter"

To make it easier for researchers spanning cognitive science, multi-agent RL, and HCI to do this, I've made nicewebrl

github.com/wcarvalho/ni...

To make it easier for researchers spanning cognitive science, multi-agent RL, and HCI to do this, I've made nicewebrl

github.com/wcarvalho/ni...