Ignacio Castaño🍉

@castano.mastodon.gamedev.place.ap.brid.gy

Master swimmer, mountain climber, and stand up coder.

I work on texture processing tools at Ludicon.

Formerly at Roblox, Thekla (The Witness), NVIDIA […]

🌉 bridged from https://mastodon.gamedev.place/@castano on the fediverse by https://fed.brid.gy/

I work on texture processing tools at Ludicon.

Formerly at Roblox, Thekla (The Witness), NVIDIA […]

🌉 bridged from https://mastodon.gamedev.place/@castano on the fediverse by https://fed.brid.gy/

Reposted by Ignacio Castaño🍉

Many years ago I threw together a simple command-line tool, SDFGen, to compute an approximate, grid-based signed distance field from triangle meshes, wrapping some code my PhD supervisor had originally written.

I was contacted today by someone who heavily rewrote it for GPU support, and cleaned […]

I was contacted today by someone who heavily rewrote it for GPU support, and cleaned […]

Original post on mastodon.acm.org

mastodon.acm.org

November 6, 2025 at 7:36 PM

Many years ago I threw together a simple command-line tool, SDFGen, to compute an approximate, grid-based signed distance field from triangle meshes, wrapping some code my PhD supervisor had originally written.

I was contacted today by someone who heavily rewrote it for GPU support, and cleaned […]

I was contacted today by someone who heavily rewrote it for GPU support, and cleaned […]

Ghost of Yōtei looks pretty, but I suspect the developers have not spent much time walking on deep snow.

November 2, 2025 at 6:15 AM

Ghost of Yōtei looks pretty, but I suspect the developers have not spent much time walking on deep snow.

My solution is very simple. I use the NOAPI target with some local changes, since that's not officially supported on Android. Then simply I create the VkInstance in sokol_main, and the device in the init() callback.

I only had to expose a

sapp_android_get_native_window() entrypoint in order to […]

I only had to expose a

sapp_android_get_native_window() entrypoint in order to […]

Original post on mastodon.gamedev.place

mastodon.gamedev.place

October 24, 2025 at 4:27 PM

My solution is very simple. I use the NOAPI target with some local changes, since that's not officially supported on Android. Then simply I create the VkInstance in sokol_main, and the device in the init() callback.

I only had to expose a

sapp_android_get_native_window() entrypoint in order to […]

I only had to expose a

sapp_android_get_native_window() entrypoint in order to […]

I’ve always felt a bit bitter about not getting much recognition for NVTT. I know it’s been used in tons of games, but most of them don’t give me any credit.

That’s why it was such a pleasant surprise the other day when a developer personally thanked me for it. He was working on a remake of an […]

That’s why it was such a pleasant surprise the other day when a developer personally thanked me for it. He was working on a remake of an […]

Original post on mastodon.gamedev.place

mastodon.gamedev.place

October 22, 2025 at 4:53 AM

I’ve always felt a bit bitter about not getting much recognition for NVTT. I know it’s been used in tons of games, but most of them don’t give me any credit.

That’s why it was such a pleasant surprise the other day when a developer personally thanked me for it. He was working on a remake of an […]

That’s why it was such a pleasant surprise the other day when a developer personally thanked me for it. He was working on a remake of an […]

Oh, Firefox, what have you done... 😞

October 14, 2025 at 6:57 AM

Oh, Firefox, what have you done... 😞

“Trump tried to privately message Attorney General Pam Bondi on social media to demand she prosecute his political opponents, but instead he posted it publicly”

I thought that only happened on Mastodon 😂

https://mastodon.social/@wtfjht/115346798588736029

I thought that only happened on Mastodon 😂

https://mastodon.social/@wtfjht/115346798588736029

WTF Just Happened Today? (@[email protected])

WTF just happened today? ⚡️ Day 1724: "Simply unreliable." https://whatthefuckjusthappenedtoday.com/2025/10/09/day-1724/

mastodon.social

October 10, 2025 at 12:48 AM

“Trump tried to privately message Attorney General Pam Bondi on social media to demand she prosecute his political opponents, but instead he posted it publicly”

I thought that only happened on Mastodon 😂

https://mastodon.social/@wtfjht/115346798588736029

I thought that only happened on Mastodon 😂

https://mastodon.social/@wtfjht/115346798588736029

For some reason I thought WebGL did not support pixel buffer objects. Turns out I was wrong. It supports PBOs with some restrictions that does not really affect my use case. So, today this happened:

October 8, 2025 at 11:11 PM

For some reason I thought WebGL did not support pixel buffer objects. Turns out I was wrong. It supports PBOs with some restrictions that does not really affect my use case. So, today this happened:

Ludicon turns 3 today.

A year ago I wrote a blog post that I never published. In it I celebrated a few achievements, but also lamented my failure to build a sustainable business.

Looking back now, I see how far I’ve come. After some tough setbacks, I felt gloomy about my prospects and was […]

A year ago I wrote a blog post that I never published. In it I celebrated a few achievements, but also lamented my failure to build a sustainable business.

Looking back now, I see how far I’ve come. After some tough setbacks, I felt gloomy about my prospects and was […]

Original post on mastodon.gamedev.place

mastodon.gamedev.place

October 4, 2025 at 2:13 AM

Ludicon turns 3 today.

A year ago I wrote a blog post that I never published. In it I celebrated a few achievements, but also lamented my failure to build a sustainable business.

Looking back now, I see how far I’ve come. After some tough setbacks, I felt gloomy about my prospects and was […]

A year ago I wrote a blog post that I never published. In it I celebrated a few achievements, but also lamented my failure to build a sustainable business.

Looking back now, I see how far I’ve come. After some tough setbacks, I felt gloomy about my prospects and was […]

Weird to see no mention of Oklab and Oklch considering their recent popularity.

https://mastoxiv.page/@arXiv_csCV_bot/115304103352555964

https://mastoxiv.page/@arXiv_csCV_bot/115304103352555964

arXiv cs.CV bot (@[email protected])

Color Models in Image Processing: A Review and Experimental Comparison Muragul Muratbekova, Nuray Toganas, Ayan Igali, Maksat Shagyrov, Elnara Kadyrgali, Adilet Yerkin, Pakizar Shamoi https://arxiv.org/abs/2510.00584 https://arxiv.org/pdf/2510.00584 https://arxiv.org/html/2510.00584 arXiv:2510.00584v1 Announce Type: new Abstract: Color representation is essential in computer vision and human-computer interaction. There are multiple color models available. The choice of a suitable color model is critical for various applications. This paper presents a review of color models and spaces, analyzing their theoretical foundations, computational properties, and practical applications. We explore traditional models such as RGB, CMYK, and YUV, perceptually uniform spaces like CIELAB and CIELUV, and fuzzy-based approaches as well. Additionally, we conduct a series of experiments to evaluate color models from various perspectives, like device dependency, chromatic consistency, and computational complexity. Our experimental results reveal gaps in existing color models and show that the HS* family is the most aligned with human perception. The review also identifies key strengths and limitations of different models and outlines open challenges and future directions This study provides a reference for researchers in image processing, perceptual computing, digital media, and any other color-related field. toXiv_bot_toot

mastoxiv.page

October 2, 2025 at 11:32 PM

Weird to see no mention of Oklab and Oklch considering their recent popularity.

https://mastoxiv.page/@arXiv_csCV_bot/115304103352555964

https://mastoxiv.page/@arXiv_csCV_bot/115304103352555964

The spark gltf demo works great on the Quest Browser, but I thought it would be cool to port it to VR.

Unfortunately it looks like three.js doesn't support WebXR on the WebGPURenderer:

https://github.com/mrdoob/three.js/issues/28968

Is that right? Anyone knows what's the status of #webxr with […]

Unfortunately it looks like three.js doesn't support WebXR on the WebGPURenderer:

https://github.com/mrdoob/three.js/issues/28968

Is that right? Anyone knows what's the status of #webxr with […]

Original post on mastodon.gamedev.place

mastodon.gamedev.place

September 29, 2025 at 7:39 PM

The spark gltf demo works great on the Quest Browser, but I thought it would be cool to port it to VR.

Unfortunately it looks like three.js doesn't support WebXR on the WebGPURenderer:

https://github.com/mrdoob/three.js/issues/28968

Is that right? Anyone knows what's the status of #webxr with […]

Unfortunately it looks like three.js doesn't support WebXR on the WebGPURenderer:

https://github.com/mrdoob/three.js/issues/28968

Is that right? Anyone knows what's the status of #webxr with […]

Is there a website that I can direct people to for instructions on how to enable WebGPU on their systems?

For example, in some cases browsers it just requires enabling a configuration option. In others you have to start the browser with a launch argument, and in some cases the only solution is […]

For example, in some cases browsers it just requires enabling a configuration option. In others you have to start the browser with a launch argument, and in some cases the only solution is […]

Original post on mastodon.gamedev.place

mastodon.gamedev.place

September 24, 2025 at 5:40 PM

Is there a website that I can direct people to for instructions on how to enable WebGPU on their systems?

For example, in some cases browsers it just requires enabling a configuration option. In others you have to start the browser with a launch argument, and in some cases the only solution is […]

For example, in some cases browsers it just requires enabling a configuration option. In others you have to start the browser with a launch argument, and in some cases the only solution is […]

@ezivkovic This is really unfortunate. WebGPU already works great under Sequoia using either Safari 18 or Safari Technology Preview. The decision to disable it in Safari 26 under Sequoia does not seem technically motivated.

The main obstacle to WebGPU’s adoption is limited browser support, and […]

The main obstacle to WebGPU’s adoption is limited browser support, and […]

Original post on mastodon.gamedev.place

mastodon.gamedev.place

September 21, 2025 at 11:12 PM

@ezivkovic This is really unfortunate. WebGPU already works great under Sequoia using either Safari 18 or Safari Technology Preview. The decision to disable it in Safari 26 under Sequoia does not seem technically motivated.

The main obstacle to WebGPU’s adoption is limited browser support, and […]

The main obstacle to WebGPU’s adoption is limited browser support, and […]

I got some feedback about poor behavior on slow connections and lack of feedback. Apparently my server is pretty slow from hotel rooms in Japan.

I've addressed these by adding a progress bar and disabling the UI while the models are loading.

I'm new […]

[Original post on mastodon.gamedev.place]

I've addressed these by adding a progress bar and disabling the UI while the models are loading.

I'm new […]

[Original post on mastodon.gamedev.place]

September 17, 2025 at 4:55 AM

I got some feedback about poor behavior on slow connections and lack of feedback. Apparently my server is pretty slow from hotel rooms in Japan.

I've addressed these by adding a progress bar and disabling the UI while the models are loading.

I'm new […]

[Original post on mastodon.gamedev.place]

I've addressed these by adding a progress bar and disabling the UI while the models are loading.

I'm new […]

[Original post on mastodon.gamedev.place]

The demo I’ve been working on is finally online:

https://ludicon.com/sparkjs/gltf-demo/

It demonstrates the benefits of real-time texture compression in 3D web apps. It allows you to compare models using traditional KTX2 textures (UASTC and ETC1S) with the same assets compressed as AVIF + […]

https://ludicon.com/sparkjs/gltf-demo/

It demonstrates the benefits of real-time texture compression in 3D web apps. It allows you to compare models using traditional KTX2 textures (UASTC and ETC1S) with the same assets compressed as AVIF + […]

Original post on mastodon.gamedev.place

mastodon.gamedev.place

September 15, 2025 at 11:00 PM

The demo I’ve been working on is finally online:

https://ludicon.com/sparkjs/gltf-demo/

It demonstrates the benefits of real-time texture compression in 3D web apps. It allows you to compare models using traditional KTX2 textures (UASTC and ETC1S) with the same assets compressed as AVIF + […]

https://ludicon.com/sparkjs/gltf-demo/

It demonstrates the benefits of real-time texture compression in 3D web apps. It allows you to compare models using traditional KTX2 textures (UASTC and ETC1S) with the same assets compressed as AVIF + […]

Upcoming example is looking good! Adding a user interface with html was pretty easy.

September 13, 2025 at 12:24 AM

Upcoming example is looking good! Adding a user interface with html was pretty easy.

New blog post about my experiences using spark.js with three.js:

https://www.ludicon.com/castano/blog/2025/09/three-js-spark-js/

Would appreciate boosts and comments!

https://www.ludicon.com/castano/blog/2025/09/three-js-spark-js/

Would appreciate boosts and comments!

three.js + spark.js

three.js r180 was released last week and among the many improvements, the one that I’m most excited about is a series of changes by Don McCurdy that enable the use textures encoded with spark.js in three.js:

* Support `ExternalTexture` with `GPUTexture` #31653

* `ExternalTexture`: Support `copy()`, `clone()`. #31731

Support for `ExternalTexture` objects in the WebGPU backend allows you to wrap a `GPUTexture` object, such as the one produced by `Spark.encodeTexture` and use it directly in three.js. This makes it straightforward to work with Spark-encoded textures.

Here’s a brief example:

// Load and encode texture using spark:

const gpuTexture = await spark.encodeTexture(textureUrl, { srgb: true, flipY: true });

// Wrap the GPUTexture for three.js

const externalTex = new THREE.ExternalTexture(gpuTexture);

// Use as any other texture:

const material = new THREE.MeshBasicMaterial({ map: externalTex });

With this feature in place, I began testing spark.js in more complex scenarios. The first thing I wanted was a GLTF viewer example. With some help from Don McCurdy I was able to get something running quickly, and after after a bit of polish, I’m very happy with the results. It requires very minimal changes to existing code.

To simplify integration, the spark.js node package includes an addon that you import explicitly:

import { Spark } from "@ludicon/spark.js";

import { registerSparkLoader } from "@ludicon/spark.js/three-gltf";

Then use the imported function to register Spark with an existing `GLTFLoader` instance:

const loader = new GLTFLoader()

registerSparkLoader(loader, spark)

And that’s it! After registration, the loader will automatically encode textures with Spark whenever applicable.

This exercise was very useful in identifying some issues in the initial spark.js implementation, as well as some limitations in three.js.

## Concurrent Image Decoding

The initial performance results of the Spark texture loader were not surprising. I expected it to be a bit slower than the default loader, since Spark needs to dispatch its codecs to the GPU, and the first measurements seemed to confirm this.

After profiling, however, I discovered the reason why the Spark texture loader was slower: the input textures were being decoded sequentially in the main thread instead of being offloaded to a separate thread.

Fixing this was surprisingly simple. To get the image data I was using a DOM Image object and the solution was to simply set the `decoding` attribute to `"async"`:

img.decoding = "async"

While digging into this, I also learned that the recommended approach is to use the createImageBitmap API. Both approaches worked well, but the latter appeared to be slightly faster in practice, while producing the same results. The only difference I found is that the `Image` object supported rendering of SVG files while the `createImageBitmap` function did not, so to maintain feature parity I use `Image` objects for SVG files, and `createImageBitmap` for other image types.

With these changes I was pleased to see that the performance of the Spark texture loader was as good as the regular texture loader and in some cases even faster! Texture encoding happens asynchronously in the GPU which is usually idle during load time, so the extra work doesn’t add a noticeable overhead to the main thread.

Both three.js and spark.js need to generate mipmaps for these textures. Spark does this using compute shaders immediately after decoding, while three.js uses fragment shaders and does so at a later stage when the scene is evaluated. These differences may explain why Spark sometimes has a performance advantage, though I’m not familiar enough with the three.js internals to say for certain.

## RG Normal Map Support

Another surprise was that three.js did not support normal maps stored in two-channel textures. This is a very common practice, most engines use two-channel compressed textures for normals, but in three.js the material shaders expect RGB values representing the packed XYZ coordinate.

In practice, it’s more efficient to store only the XY coordinates and reconstruct the Z by projecting it onto the hemisphere:

normal.z = sqrt(saturate(1.0 - dot(normal.xy, normal.xy)));

The XY normal map coordinates are usually not correlated, so block compression using a single plane doesn’t work very well. Early implementations used DXT5 textures using the Alpha block and the Green channel of the color block to encode the X and Y coordinates independently. Jan Paul van Waveren and I analyzed this scheme in our Real-Time Normal Map DXT Compression paper back in 2008.

Today, we have specialized two-channel compression formats that are ideal to encode normal maps: BC5 on PC and EAC11_RG on mobile. Even with more general formats like BC7 and ASTC it’s also beneficial to use two-channel encodings. For example, when using ASTC, the Arm ASTC Encoder guidelines recommend packing normals as XXXY in order to use the Luminance-Alpha endpoint mode.

To support these packing schemes I’ve submitted a PR three.js, which is currently being reviewed, and I hope will land in the upcoming r181 release.

This feature benefits all three.js developers working with compressed textures, but to improve the quality of existing assets with offline-compressed textures it’s necessary to recompress them. In contrast, spark.js will leverage this feature as soon as it becomes available, automatically improving the quality of existing normal maps without any additional work.

## Performance Results

Measuring performance on web applications is tricky. Execution is very asynchronous, so when timing a piece of code it’s hard to be sure of what you are timing exactly. You could be timing extraneous tasks that happen to be scheduled in between your timing measurements, and code that you think you are measuring may actually be evaluated at a later point.

The chrome profiler has proven very useful to understand unexpected timing results. I wish the GPU timeline displayed the GPU debug group annotations, but in my tests the GPU usage is so sparse that it’s easy to infer what’s executing on it.

To keep things simple I decided to load a single GLTF file and measure the FCP (first contentful paint), that is, the time to the first rendered frame. Although in this particular test the first frame is not rendered until _all_ the contents have finished loading. To estimate how much of that time can be attributed to the GLTF loading, I also measured the FCP of the same scene without the GLTF file and subtract that. Results are somewhat noisy specially on mobile, so I run them at least 3 times and pick the best. I also let the devices cool down in between tests to prevent throttling.

I’ve tested a bunch of different models, but the numbers here correspond to the SciFiHelmet GLTF example from the Khronos GLTF Sample Models. The original textures are PNGs and to produce the other variants I’ve used `gltf-transform` with the default texture compression settings agglomerating the results in a single GLB file.

SciFiHelmet contains a single material with 4 2024×2024 textures for albedo, normal, roughness-metalness (RM), and occlusion. When using Spark these are encoded using a 16 byte per block format available for the albedo, normal and RM maps, and an 8 byte per block format for the occlussion. Format selection is done automatically based on the channel usage and available formats on the device. It is be possible to target lower quality (8 byte per block) formats, but I’m not doing that in this test.

I gathered these results on a MacBook Pro M4:

SciFiHelmet| Time (ms)| Texture Size (MB)| VRAM (MB)

---|---|---|---

PNG| 121| 26.64| 85.33

PNG+Spark| 101| 26.64| 18.66

JPEG| 118| 26.69| 85.33

JPEG+Spark| 109| 26.69| 18.66

WebP| 68| 1.44| 85.33

WebP+Spark| 55| 1.44| 18.66

AVIF| 60| 0.92| 85.33

AVIF+Spark| 53| 0.92| 18.66

Basis UASTC| 170| 12.96| 22.36

Basis ETC1S| 113| 1.91| 11.2

As noted before, on this machine the Spark texture loader appears to be faster than the default texture loader.

I also run this test in some mobile devices:

With the following results (all timings in milliseconds):

SciFiHelmet| Galaxy S23| Pixel 8| Pixel 7

---|---|---|---

AVIF| 673| 690| 688

AVIF+Spark| 736| 653| 811

Basis UASTC| 1607| 2920| crash!

Basis ETC1S| 1120| 1120| 1077

I was curious to see how the Spark texture loaders compared to the KTX loader using textures encoded with Basis. Basis uses an intermediate representation that can be transcoded into any GPU texture format, but the transcoding runs on the CPU. While it’s much simpler than full encoder, it appears to add a very significant overhead. Another issue is that Basis textures don’t compress nearly as well and the cost of loading these from disk is much higher that I anticipated.

Here’s the cost of the Basis transcoder on some devices using 4 worker threads with nearly 100% utilization:

| MacBook Pro M4| Galaxy S23| Pixel 8

---|---|---|---

Basis UASTC| 123 ms| 358 ms| 325 ms

Basis ETC1S| 83 ms| 170 ms| 175 ms

## Other Performance Observations

It’s fairly common to use a single GLB file that embeds geometry and textures in a single file. While this is convenient for distribution and it may be appealing to reduce the number of connections, there’s one major disadvantage: A GLB file needs to be downloaded completely before transcoding can start.

I think it may be beneficial to use GLTF/GLB files that reference external texture assets. Loading these require additional connections and an indirection that may add latency, but as soon as the first image file is received we can start processing it in parallel with the remaining downloads. Maybe this will the subject of an upcoming benchmark.

Compression ratios make a huge difference when downloading the files over the network, but I did not expect the loading times to make as big of a difference in my tests, because I’m running them locally and the assets are always cached on disk by the browser. I thought the reduced asset sizes would mainly have a secondary effect. With higher compression ratios, you can hold more assets in a fixed size cache, making cache hits more likely.

However, the impact of asset sizes is much more direct. Disk loading times on mobile appear to be much longer than I expected, or perhaps the browser is adding some other overheads I’m not aware of.

| GLTF Size| MacBook Pro M4| Galaxy S23| Pixel 8

---|---|---|---|---

SciFiHelmet-avif.glb| 4.4 MB| 26.5 ms| 191 ms| 192 ms

SciFiHelmet-uastc.glb| 16 MB| 41 ms| 795 ms| 629 ms

## Feedback and Acknowledgments

If you’d like to try this out, the spark.js GitHub repository now includes two three.js examples. They should be easy to run, and I’d really appreciate your feedback.

Getting this up and running so quickly wouldn’t have been possible without the work from Don McCurdy, the patience from my college friend Tulo, and the incredible work of all the volunteers advancing web frameworks and standards. In recognition of this, I’ll be donating 10% of the spark.js sales to developers working on adjacent projects that push 3D graphics forward on the Web.

www.ludicon.com

September 9, 2025 at 7:18 AM

New blog post about my experiences using spark.js with three.js:

https://www.ludicon.com/castano/blog/2025/09/three-js-spark-js/

Would appreciate boosts and comments!

https://www.ludicon.com/castano/blog/2025/09/three-js-spark-js/

Would appreciate boosts and comments!

UVPackmaster 3 looks very good!

https://bird.makeup/users/jpowersartist/statuses/1963630773333893470

https://bird.makeup/users/jpowersartist/statuses/1963630773333893470

bird.makeup - Tweet

My OCD approves of UVPackmaster3. I'm old enough to remember when packing UVs got you about 30-50% of the way there. It was all by hand after that.

bird.makeup

September 7, 2025 at 7:17 AM

UVPackmaster 3 looks very good!

https://bird.makeup/users/jpowersartist/statuses/1963630773333893470

https://bird.makeup/users/jpowersartist/statuses/1963630773333893470

My daughter wanted an epic and I think I delivered.

September 2, 2025 at 8:06 PM

My daughter wanted an epic and I think I delivered.

The gamecraft podcast is great. Highly recommend. https://gamecraftpod.com/

Gamecraft

Gamecraft is a limited series about the modern history of the video game business.

gamecraftpod.com

August 24, 2025 at 5:37 AM

The gamecraft podcast is great. Highly recommend. https://gamecraftpod.com/

Dragon peak was awesome. I could not summit because thunderstorms started rolling in. I was 300 feet away from the summit, but I’m glad I turned around since it started hailing and raining hard just as I was exiting the technical section. Unfortunately […]

[Original post on mastodon.gamedev.place]

[Original post on mastodon.gamedev.place]

August 24, 2025 at 12:12 AM

Dragon peak was awesome. I could not summit because thunderstorms started rolling in. I was 300 feet away from the summit, but I’m glad I turned around since it started hailing and raining hard just as I was exiting the technical section. Unfortunately […]

[Original post on mastodon.gamedev.place]

[Original post on mastodon.gamedev.place]

Just dropped off my son at the dorm for his first year of college at CSULB. It’s such a strange experience to part ways so young. I did not leave my parents home until I got my degree and got a job abroad.

To process my feelings I’m taking a detour through the Eastern Sierra on my way back home […]

To process my feelings I’m taking a detour through the Eastern Sierra on my way back home […]

Original post on mastodon.gamedev.place

mastodon.gamedev.place

August 23, 2025 at 3:22 AM

Just dropped off my son at the dorm for his first year of college at CSULB. It’s such a strange experience to part ways so young. I did not leave my parents home until I got my degree and got a job abroad.

To process my feelings I’m taking a detour through the Eastern Sierra on my way back home […]

To process my feelings I’m taking a detour through the Eastern Sierra on my way back home […]

Reposted by Ignacio Castaño🍉

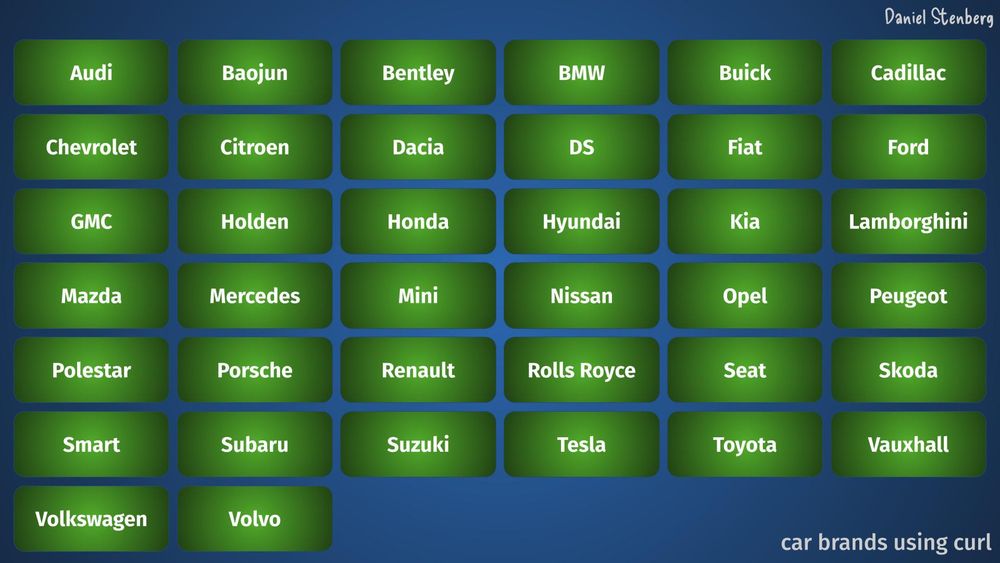

An Open Source sustainability story in two slides. (for a coming talk of mine)

Slide 1: car brands using #curl

Slide 2: car brands sponsoring or paying for #curl support

Slide 1: car brands using #curl

Slide 2: car brands sponsoring or paying for #curl support

August 14, 2025 at 6:35 AM

An Open Source sustainability story in two slides. (for a coming talk of mine)

Slide 1: car brands using #curl

Slide 2: car brands sponsoring or paying for #curl support

Slide 1: car brands using #curl

Slide 2: car brands sponsoring or paying for #curl support

Putting together an update and releasing a new product at the same time was an insane amount of work, and as a result neither of them was as polished as I would have liked.

I don't know if it would have made more sense to release them independently, but at least I can now hide in a hole and get […]

I don't know if it would have made more sense to release them independently, but at least I can now hide in a hole and get […]

Original post on mastodon.gamedev.place

mastodon.gamedev.place

August 6, 2025 at 11:17 PM

Putting together an update and releasing a new product at the same time was an insane amount of work, and as a result neither of them was as polished as I would have liked.

I don't know if it would have made more sense to release them independently, but at least I can now hide in a hole and get […]

I don't know if it would have made more sense to release them independently, but at least I can now hide in a hole and get […]

Finally, I'm thrilled to welcome Infinite Flight and Netflix as new Spark licensees, and also deeply grateful to HypeHype for their continued support.

August 6, 2025 at 7:52 PM

Finally, I'm thrilled to welcome Infinite Flight and Netflix as new Spark licensees, and also deeply grateful to HypeHype for their continued support.

Spark 1.3 also brings new codecs, quality improvements, and even more performance optimizations. For more details, see our blog post:

https://www.ludicon.com/castano/blog/2025/08/spark-1-3-and-spark-js/

https://www.ludicon.com/castano/blog/2025/08/spark-1-3-and-spark-js/

Spark 1.3 and spark.js

I’m excited to announce the release of _Spark_ 1.3 and the launch of _spark.js_, our new JavaScript API for real-time GPU texture compression on the web.

The highlight of this release is full WebGPU support. _Spark_ ’s real-time codecs are now available as WGSL compute shaders that run natively in modern browsers. To make it easy to use _Spark_ on the web, we’re also introducing _spark.js_, a separate JavaScript package that wraps a subset of the codecs with a simple API. _spark.js_ is free for non-commercial use and available under an affordable license for commercial projects.

**What’s new in Spark 1.3:**

* **New RGBA codecs** : Introduced a new BC7 RGBA codec and improved the quality of our ASTC RGBA codec.

* **Performance and quality improvements** across the board, in particular a faster EAC codec, a faster ASTC RGBA codec and improvements to BC1 quality.

* **Expanded platform support** including: WebGPU, OpenGL 4.1 (macOS), GLES 3.0 (iOS) and broader AndroidTV support.

This release also includes new examples, bug fixes, and continued improvements based on user feedback.

I’m thrilled to welcome Infinite Flight and Netflix as new _Spark_ licensees, and deeply grateful to HypeHype for their continued support.

## WebGPU

HypeHype has already been using _Spark_ in their WebGPU client ahead of this release, but now it’s fully supported and tested across all browsers using both `f32` and `f16` floating point variants.

The codecs work reliably across all WebGPU-enabled browsers and devices we’ve tested.

## _spark.js_

In addition to WebGPU support, we’re introducing _spark.js_ a standalone JavaScript package that exposes a subset of the Spark codecs through a simple and lightweight API:

texure = await spark.encodeTexture("image.avif", {mips: true, srgb: true})

This makes it easy to load standard image formats in the browser and transcode them to native GPU formats optimized for rendering.

Modern image formats like AVIF and JXL provide excellent quality across a wide range of compression ratios, making them ideal for storage and transmission. In contrast, native GPU formats offer compact in-memory representations but compress poorly. While rate-distortion optimization (RDO) can help, the quality of RDO-based encoders degrades significantly at lower bitrates.

The chart below compares the SSIMULACRA2 score of multiple encoders under various bit rates on an image that is representative of typical textures:

Scores above 80 are considered very high quality (distortion not noticeable by an average observer under normal viewing conditions). While RDO codecs perform well at high bitrates, their quality drops sharply as compression increases. In contrast, AVIF images transcoded with _Spark_ maintain much higher quality, even at aggressive compression levels.

You can find more details, examples, and licensing information on the spark.js website and the GitHub repository. To evaluate the codecs directly in a WebGPU-enabled browser, try the spark.js viewer.

I am excited to bring the _Spark_ codecs to the web and can’t wait to see what you build with them!

## BC7 RGBA Codec

The lack of support for BC7 with alpha was an outstanding gap in our codec lineup. This release closes that gap with a new BC7 RGBA codec and also improves the quality of all other RGBA formats.

The mode selection heuristic in BC7 RGBA is an improvement over the heuristic I employed in the ASTC codec. However, it’s tailored to the modes available in the BC7 format and is somewhat cheaper to evaluate. On opaque textures, it produces the same results as the BC7 RGB codec, but it’s also able to handle varying alpha values.

In addition to that it’s optimized for images where the alpha is used for transparency. By default, alpha is treated as a separate independent channel. However, when alpha is known to be used for transparency, we can weight the RGB error by the alpha channel, giving more importance to opaque texels than transparent ones.

As far as I know, this alpha-weighted optimization is only supported by the squish codec I co-wrote with Simon Brown and the NVTT and ICBC codecs derived from it, but is absent from most other offline codecs. It comes with some tradeoffs: In rare cases, it can introduce interpolation artifacts such as bleeding due to bilinear filtering. In practice, this is often not an issue, and the results compare favorably with offline codecs that do not account for transparency usage.

The quality of our alpha-weighted BC7 codec is competitive with the best offline solutions (SSIMULACRA2 scores, higher is better):

Some of these changes have also translated into improvements in the ASTC RGBA codec, where in some cases the quality has improved noticeably:

## Faster EAC Compression

Our previous _Spark_ 1.2 release saw some impressive performance improvements in the EAC Q0 codecs. This release brings even more performance gains and extends these improvements to the remaining quality levels.

The focus this time was on low-end Adreno devices. Some of the Adreno 5xx devices would time-out when compressing large normal map textures at the highest quality level. This could be solved by dispatching the codec kernels multiple times to compress portions of image, but this issue made me take a closer look at the performance on these devices.

An EAC_RG block is composed of two identical EAC_R sub-blocks that can be encoded independently. Most of our codecs are limited by bandwidth and reducing register pressure is critical to maintain good occupancy and maximize throughput. However, in this case most compilers chose to pre-load all the texels and interleave the instructions that encode each of the sub-blocks, possibly in a misguided effort to improve ILP. However, this doubled the number of required registers and often resulted in spilling. To prevent that from happening, I place the code inside loops that only run once, but the compiler is unable to eliminate and thus prevent code motion, ensuring the sub-blocks are encoded sequentially.

Another problem was that the EAC codec relied on lookup tables to compute the optimal index assignments. This is much faster than the typical brute force approach, but these loads are divergent and have a severe performance impact on some devices. To avoid them we pack and encode these tables into just a couple literals avoiding the divergence with some cheap math to decode the original values.

Getting these changes to not regress across any devices was particularly difficult as improvements on Adreno would often cause regressions on Mali and vice-versa. The throughput of integer operations on Mali is much lower than that of float operations and in some cases that resulted in lower performance than the lookup tables. To avoid that I had to carefully express the integer operations using int16 vectorization and work around compiler bugs that resulted in sub-optimal code.

In the end I found a solution that works great across almost all Mali devices:

while also providing a big performance boost on Adrenos:

This not only improved the throughput, but also allowed us to encode large textures with all the codecs on the lowest end devices without running into compute time-outs. If you wonder why some of the Adreno 505 bars are missing, that’s why. Previously, the Q1 and Q2 codecs would run into resource limits and timeouts on that device.

## ASTC RGBA Optimizations

The recent quality improvements in the ASTC RGBA codec introduced minor performance regressions, particularly noticeable on low-end devices. To address this, I’ve done a new round of optimizations targeted at mobile GPUs.

Endpoint encoding in the ASTC RGBA kernel is more expensive than in other codecs due to the need to handle endpoints in different quantization modes. The encoder relied on lookup tables to facilitate the encoding, but these have been eliminated in favor of vectorized int16 operations, the bread and butter of Android performance optimizations.

This change provides a moderate speedup on the most sensitive lower-end devices without sacrificing quality.

## Quality Improvements

I’ve made several small quality improvements across the board, many of them informed by the SSIMULACRA2 perceptual error metric. One notable improvement is in the BC1 codec.

An oversight in my BC1 encoder was the lack special handling of single color blocks. I was reluctant to add this code path due to concerns about introducing divergence and the need for a lookup table. At the time I thought the quality gains were too minor to justify the overhead. While the RMSE improvements are indeed small, the perceptual gains are very significant and make this worthwhile at higher quality settings.

The chart below shows the SSIMULACRA2 scores of the new codec compared to the previous version. Images containing smooth, uniform regions benefit significantly from this optimization.

Instead of adopting the standard tables used in other encoders, I’ve generated new tables that take into account the way the BC1 format is actually decoded by the hardware and result in slightly higher quality.

_Spark_ 1.3 also has a new experimental mode that produces higher quality results on non-photographic images and illustrations. This currently has a small performance impact, but can produce much higher quality results in some cases.

## Expanded Platform Support

Extended support for earlier OpenGL versions that lack compute shaders and even copy image extension. This is possible using pixel buffer objects.

Additionally, some current Amazon FireTV devices come with PowerVR drivers that have not seen an update in the last 7 years and had numerous bugs. However, I was able to find workarounds and get the many of the codecs running on them.

www.ludicon.com

August 6, 2025 at 7:50 PM

Spark 1.3 also brings new codecs, quality improvements, and even more performance optimizations. For more details, see our blog post:

https://www.ludicon.com/castano/blog/2025/08/spark-1-3-and-spark-js/

https://www.ludicon.com/castano/blog/2025/08/spark-1-3-and-spark-js/