Robotics, Artificial Intelligence, Humanoids, Tactile Sensing.

https://sferrazza.cc

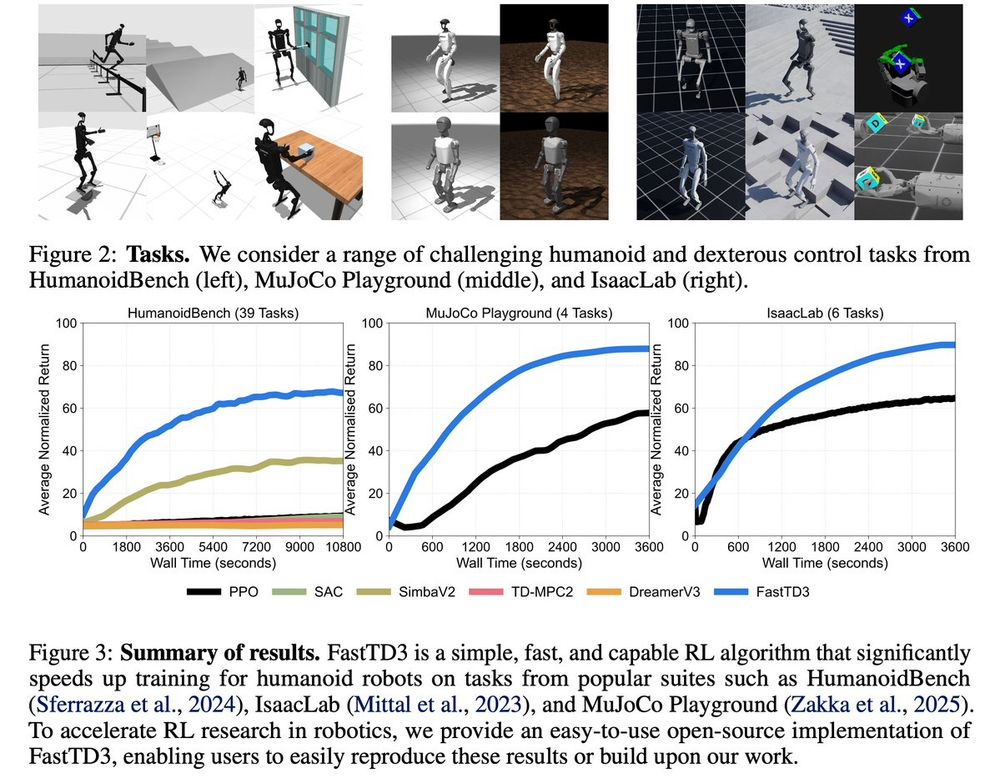

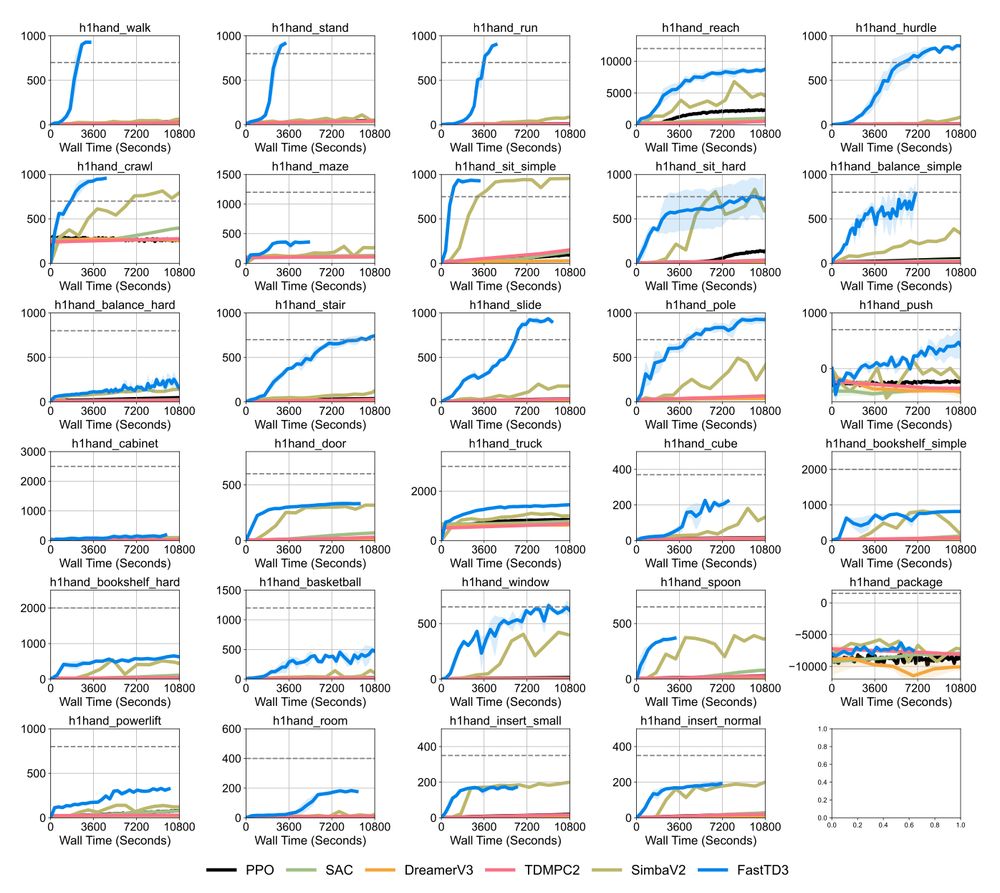

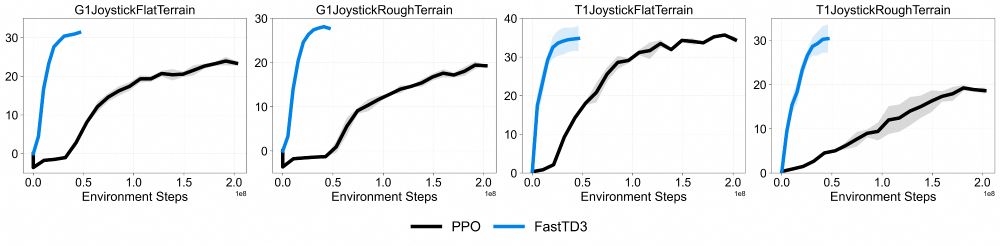

younggyo.me/fast_td3

younggyo.me/fast_td3

Excited for my first in-person RoboSoft after the 2020 edition went virtual mid-pandemic.

Reach out if you'd like to chat!

Excited for my first in-person RoboSoft after the 2020 edition went virtual mid-pandemic.

Reach out if you'd like to chat!

Join us to discuss this at our exciting workshop at @icmlconf.bsky.social 2025: EXAIT!

exait-workshop.github.io

#ICML2025

Join us to discuss this at our exciting workshop at @icmlconf.bsky.social 2025: EXAIT!

exait-workshop.github.io

#ICML2025

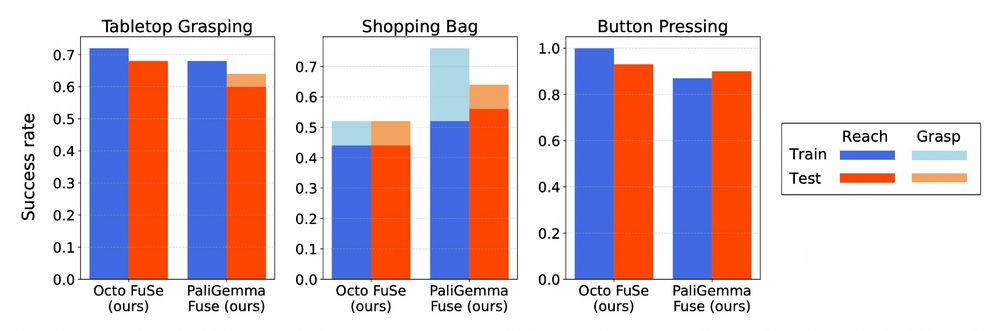

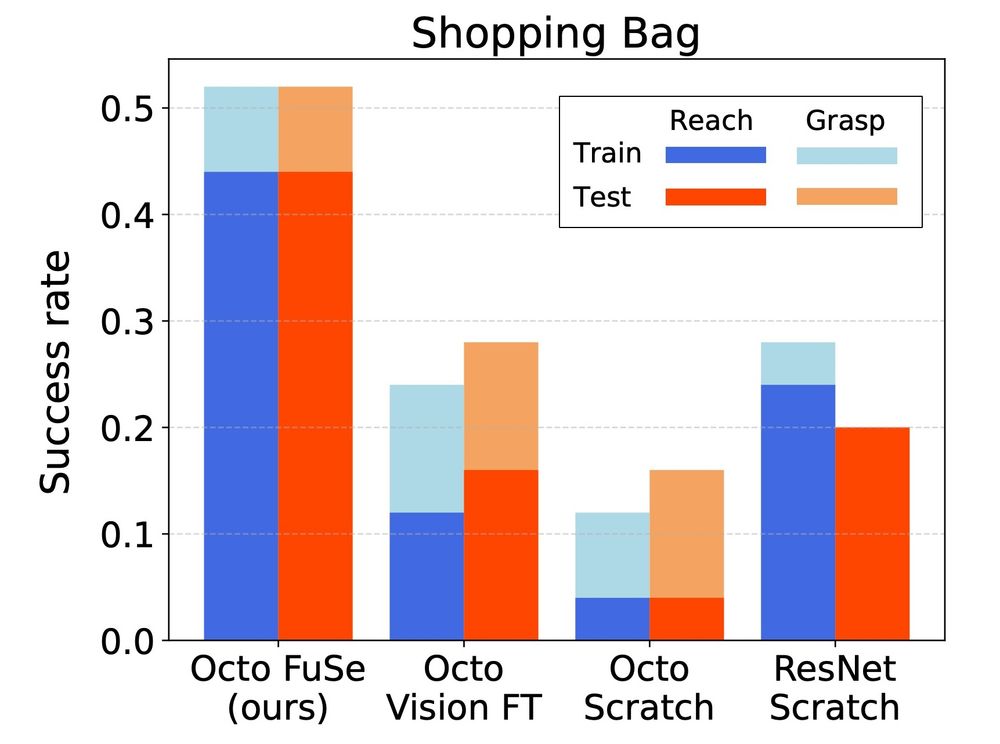

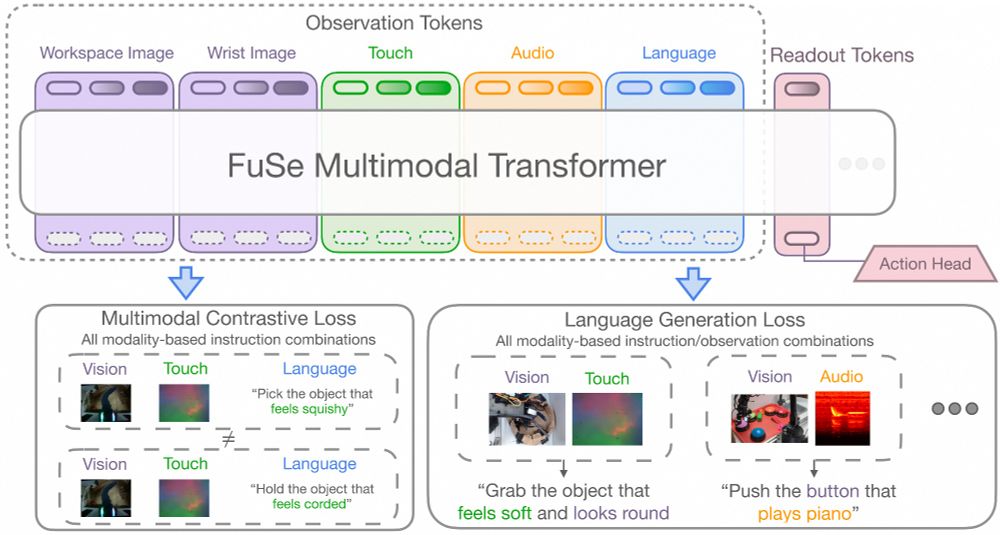

Introducing FuSe: a recipe for finetuning large vision-language-action (VLA) models with heterogeneous sensory data, such as vision, touch, sound, and more.

Details in the thread 👇

Introducing FuSe: a recipe for finetuning large vision-language-action (VLA) models with heterogeneous sensory data, such as vision, touch, sound, and more.

Details in the thread 👇

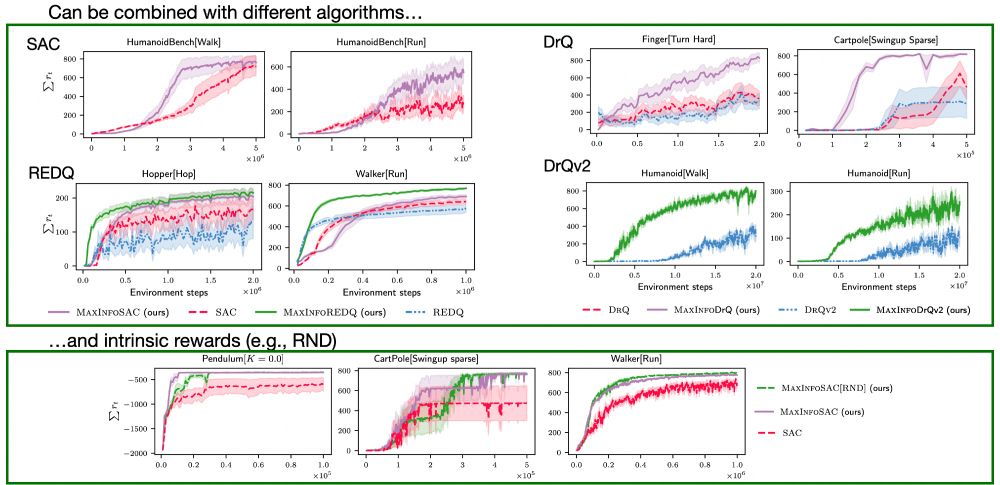

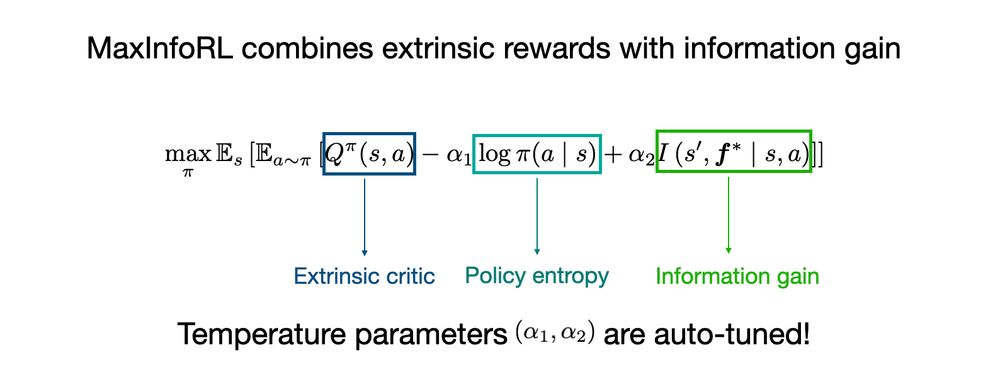

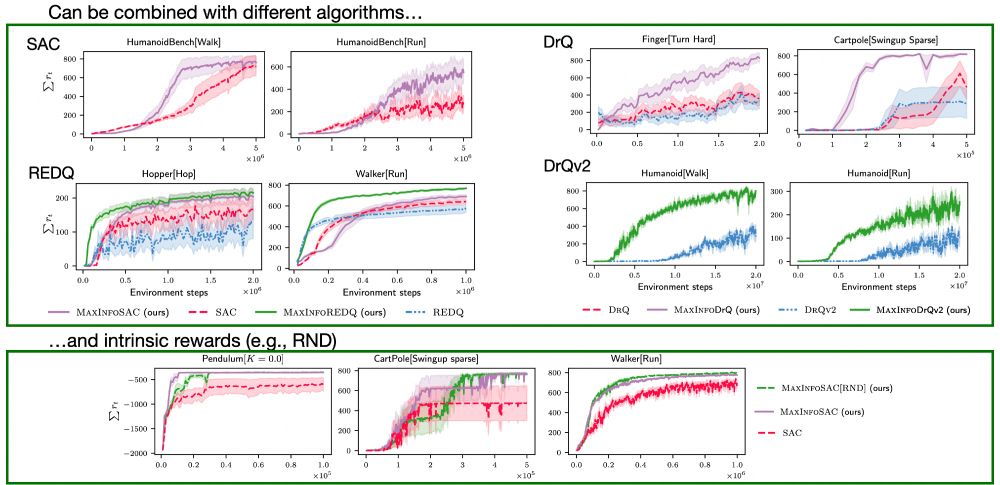

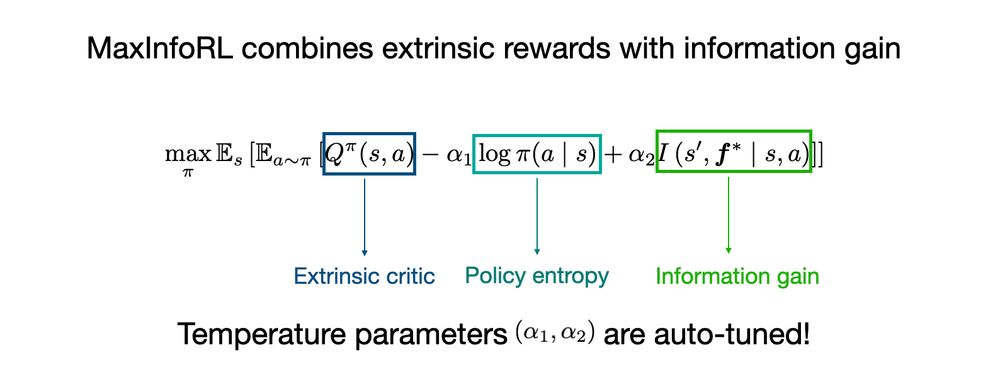

Excited to announce MaxInfoRL, a class of model-free RL algorithms that solves complex continuous control tasks (including vision-based!) by steering exploration towards informative transitions.

Details in the thread 👇

Excited to announce MaxInfoRL, a class of model-free RL algorithms that solves complex continuous control tasks (including vision-based!) by steering exploration towards informative transitions.

Details in the thread 👇