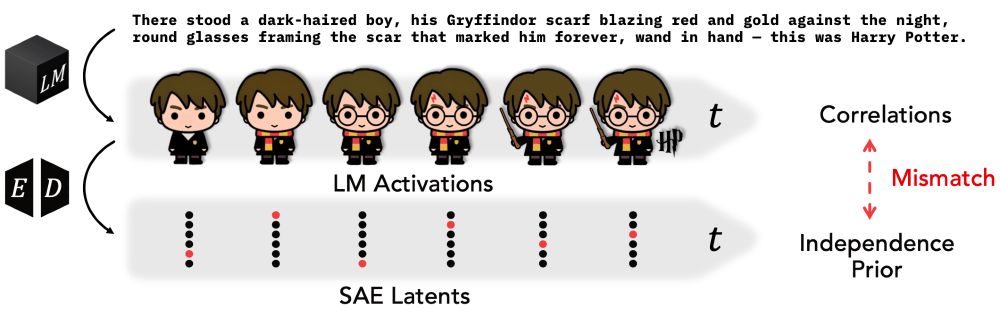

Paper arxiv.org/abs/2511.01836

Demo Code + Pretrained TFAs colab.research.google.com/github/eslub...

Demo Interface on Neuronpedia www.neuronpedia.org/gemma-2-2b/1...

Paper arxiv.org/abs/2511.01836

Demo Code + Pretrained TFAs colab.research.google.com/github/eslub...

Demo Interface on Neuronpedia www.neuronpedia.org/gemma-2-2b/1...

www.anthropic.com/research/aud...

www.anthropic.com/research/aud...

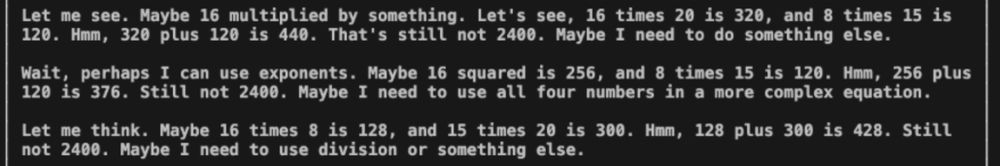

Yes, but it’s fragile! The bf-16 version of the model provides objective answers on CCP-sensitive topics, but in the fp-8 quantized version, we see that the censorship returns.

Yes, but it’s fragile! The bf-16 version of the model provides objective answers on CCP-sensitive topics, but in the fp-8 quantized version, we see that the censorship returns.

Browse existing projects: github.com/ArborProject...

Browse existing projects: github.com/ArborProject...

github.com/ARBORproject...

github.com/ARBORproject...