huggingface.co/collections/...

huggingface.co/collections/...

We are also releasing our code as part of `tokenkit`, a new library implementing advanced tokenization transfer methods. More to follow on that👀

Paper: arxiv.org/abs/2503.20083

Code: github.com/bminixhofer/...

w/ Ivan Vulić and @edoardo-ponti.bsky.social

We are also releasing our code as part of `tokenkit`, a new library implementing advanced tokenization transfer methods. More to follow on that👀

Paper: arxiv.org/abs/2503.20083

Code: github.com/bminixhofer/...

w/ Ivan Vulić and @edoardo-ponti.bsky.social

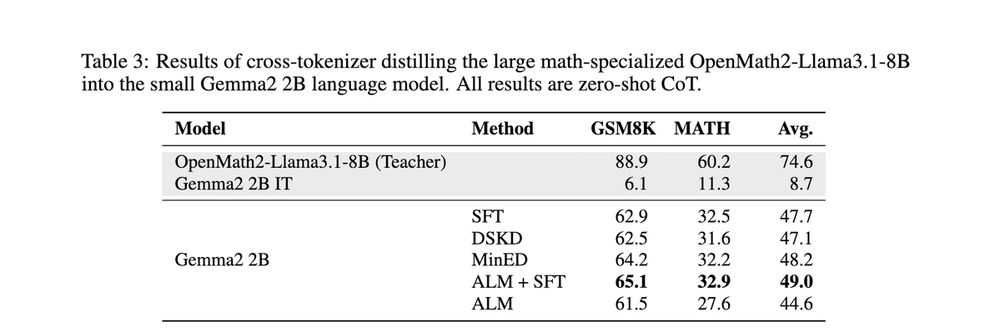

We test this by distilling a large maths-specialized Llama into a small Gemma model.🔢

We test this by distilling a large maths-specialized Llama into a small Gemma model.🔢

This would not be possible if they had different tokenizers.

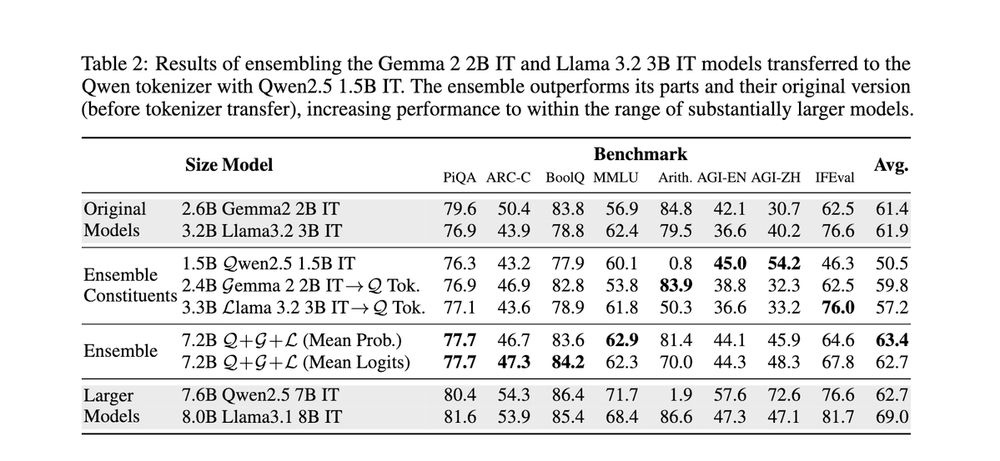

We try ensembling Gemma, Llama and Qwen. They perform better together than separately!🤝

This would not be possible if they had different tokenizers.

We try ensembling Gemma, Llama and Qwen. They perform better together than separately!🤝

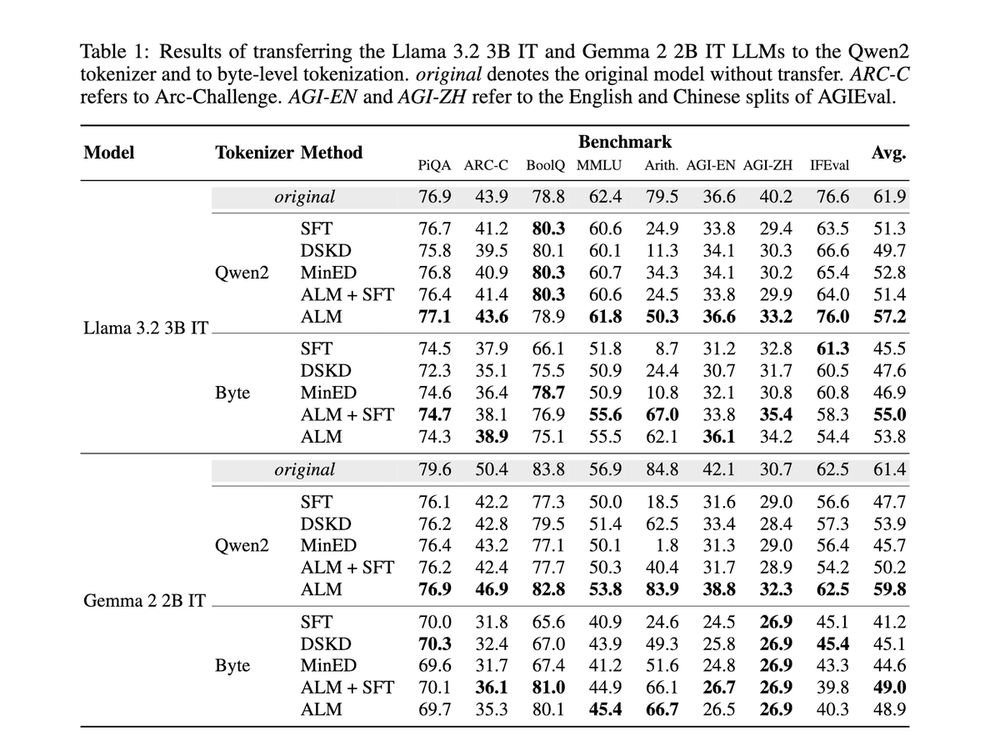

1️⃣Tokenizer transfer: the teacher is the model with its original tokenizer; the student is the same model with a new tokenizer.

Here, ALM even lets us distill subword models to a byte-level tokenizer😮

1️⃣Tokenizer transfer: the teacher is the model with its original tokenizer; the student is the same model with a new tokenizer.

Here, ALM even lets us distill subword models to a byte-level tokenizer😮

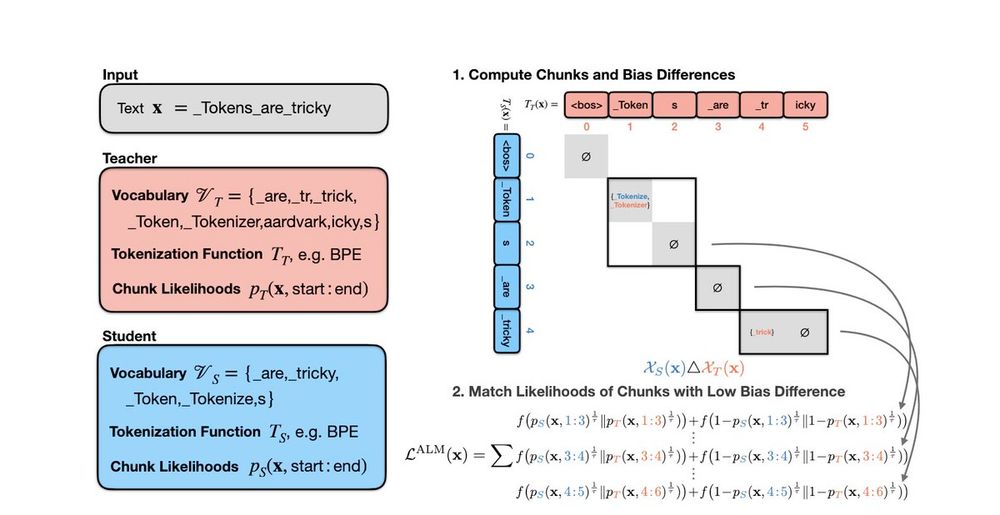

We thus develop a method to find chunks with low tokenization bias differences (making them *approximately comparable*), then learn to match the likelihoods of those✅

We thus develop a method to find chunks with low tokenization bias differences (making them *approximately comparable*), then learn to match the likelihoods of those✅

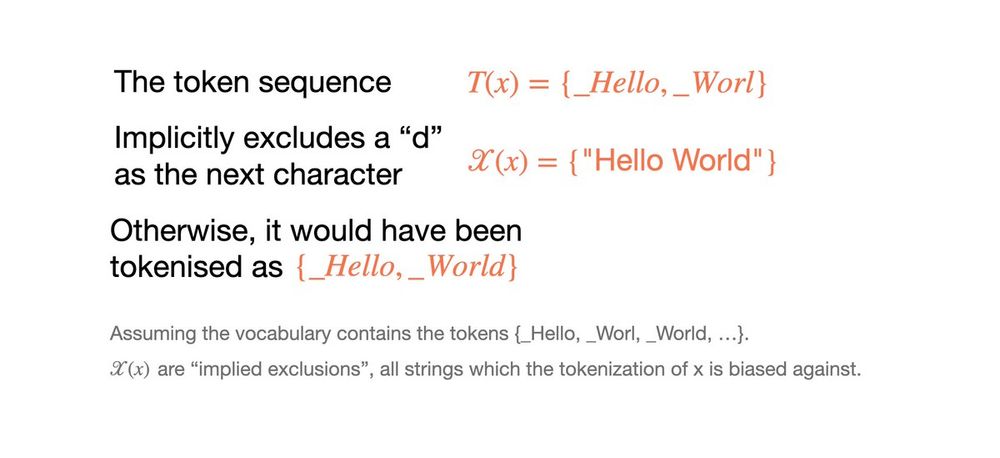

Due to tokenization bias, a sequence of subword tokens can leak information about the future contents of the text they encode.

Due to tokenization bias, a sequence of subword tokens can leak information about the future contents of the text they encode.

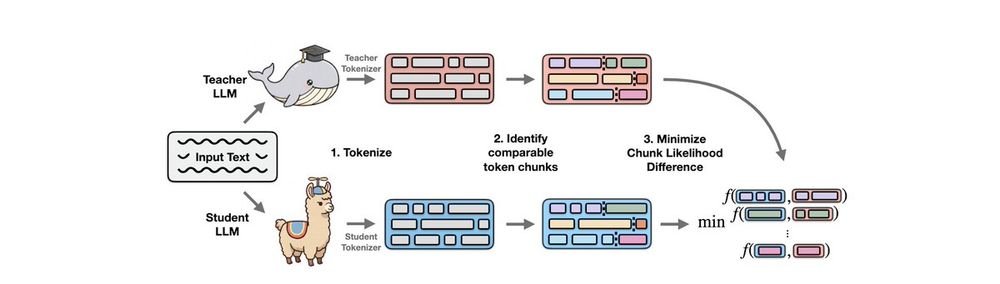

We lift this restriction by first identifying comparable chunks of tokens in a sequence (surprisingly, this is not so easy!), then minimizing the difference between their likelihoods.

We lift this restriction by first identifying comparable chunks of tokens in a sequence (surprisingly, this is not so easy!), then minimizing the difference between their likelihoods.