BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

"Evaluating Interpretability Methods: Challenges and Future Directions" just started! 🎉 Come to learn more about the MIB benchmark and hear the takes of @michaelwhanna.bsky.social, Michal Golovanevsky, Nicolò Brunello and Mingyang Wang!

"Evaluating Interpretability Methods: Challenges and Future Directions" just started! 🎉 Come to learn more about the MIB benchmark and hear the takes of @michaelwhanna.bsky.social, Michal Golovanevsky, Nicolò Brunello and Mingyang Wang!

#BlackboxNLP 2025 invites the submission of archival and non-archival papers on interpreting and explaining NLP models.

📅 Deadlines: Aug 15 (direct submissions), Sept 5 (ARR commitment)

🔗 More details: blackboxnlp.github.io/2025/call/

#BlackboxNLP 2025 invites the submission of archival and non-archival papers on interpreting and explaining NLP models.

📅 Deadlines: Aug 15 (direct submissions), Sept 5 (ARR commitment)

🔗 More details: blackboxnlp.github.io/2025/call/

Have last-minute questions or need help finalizing your submission?

Join the Discord server: discord.gg/n5uwjQcxPR

Have last-minute questions or need help finalizing your submission?

Join the Discord server: discord.gg/n5uwjQcxPR

We welcome submissions of existing methods, experimental POCs, or any approach addressing circuit discovery or causal variable localization 💡

We welcome submissions of existing methods, experimental POCs, or any approach addressing circuit discovery or causal variable localization 💡

Following requests from participants, we’re extending the MIB Shared Task submission deadline by one week.

🗓️ New deadline: August 8, 2025

Submit your method via the MIB leaderboard!

Following requests from participants, we’re extending the MIB Shared Task submission deadline by one week.

🗓️ New deadline: August 8, 2025

Submit your method via the MIB leaderboard!

If you're submitting to the MIB Shared Task at #BlackboxNLP, feel free to take a look to help you prepare your report: blackboxnlp.github.io/2025/task/

If you're submitting to the MIB Shared Task at #BlackboxNLP, feel free to take a look to help you prepare your report: blackboxnlp.github.io/2025/task/

If you're working on:

🧠 Circuit discovery

🔍 Feature attribution

🧪 Causal variable localization

now’s the time to polish and submit!

Join us on Discord: discord.gg/n5uwjQcxPR

If you're working on:

🧠 Circuit discovery

🔍 Feature attribution

🧪 Causal variable localization

now’s the time to polish and submit!

Join us on Discord: discord.gg/n5uwjQcxPR

Whether you're working on circuit discovery or causal variable localization, this is your chance to benchmark your method in a rigorous setup!

Whether you're working on circuit discovery or causal variable localization, this is your chance to benchmark your method in a rigorous setup!

Consider submitting your work to the MIB Shared Task, part of this year’s #BlackboxNLP

We welcome submissions of both existing methods and new or experimental POCs!

Consider submitting your work to the MIB Shared Task, part of this year’s #BlackboxNLP

We welcome submissions of both existing methods and new or experimental POCs!

The MIB shared task is a great opportunity to experiment:

✅ Clean setup

✅ Open baseline code

✅ Standard evaluation

Join the discord server for ideas and discussions: discord.gg/n5uwjQcxPR

The MIB shared task is a great opportunity to experiment:

✅ Clean setup

✅ Open baseline code

✅ Standard evaluation

Join the discord server for ideas and discussions: discord.gg/n5uwjQcxPR

Join us to hear from two leading voices in interpretability:

🎙️ Quanshi Zhang (Shanghai Jiao Tong University)

🎙️ Verna Dankers (McGill University)

@vernadankers.bsky.social

Join us to hear from two leading voices in interpretability:

🎙️ Quanshi Zhang (Shanghai Jiao Tong University)

🎙️ Verna Dankers (McGill University)

@vernadankers.bsky.social

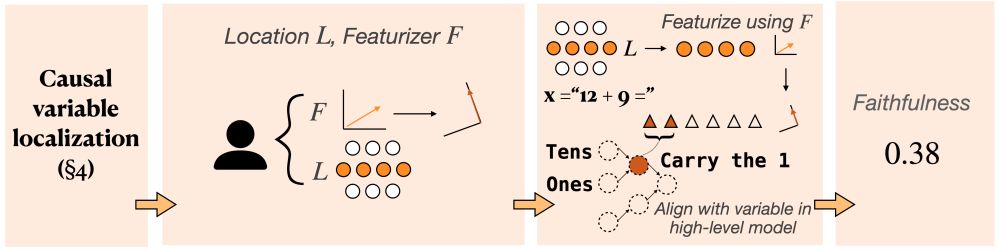

• Build contrastive input pairs differing only in the target variable.

• (If supervised) train the featurizer on these pairs.

• To evaluate: Transform activation, intervene in feature space, transform back out, and check if behavior shifts as expected.

• Build contrastive input pairs differing only in the target variable.

• (If supervised) train the featurizer on these pairs.

• To evaluate: Transform activation, intervene in feature space, transform back out, and check if behavior shifts as expected.

Working on featurization methods - ways to transform LM activations to better isolate causal variables?

Submit your work to the Causal Variable Localization Track of the MIB Shared Task!

Working on featurization methods - ways to transform LM activations to better isolate causal variables?

Submit your work to the Causal Variable Localization Track of the MIB Shared Task!

Join the discord server: discord.gg/n5uwjQcxPR

🔍 Check out submission ideas

🔍 Brainstorm possible directions

🔍 Ask questions and get help with setup issues

Full task description: blackboxnlp.github.io/2025/task/

Join the discord server: discord.gg/n5uwjQcxPR

🔍 Check out submission ideas

🔍 Brainstorm possible directions

🔍 Ask questions and get help with setup issues

Full task description: blackboxnlp.github.io/2025/task/

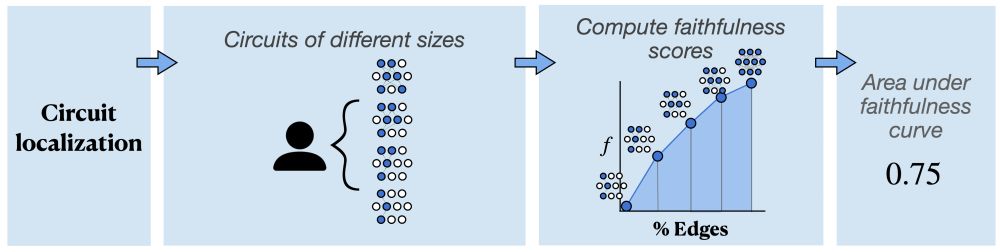

These methods typically:

• Score model components or edges

• Ablate all but the top-ranked ones

• Evaluate the performance of the resulting subgraph

These methods typically:

• Score model components or edges

• Ablate all but the top-ranked ones

• Evaluate the performance of the resulting subgraph

Consider submitting your work to the MIB Shared Task, part of #BlackboxNLP at @emnlpmeeting.bsky.social 2025!

The goal: benchmark existing MI methods and identify promising directions to precisely and concisely recover causal pathways in LMs >>

Consider submitting your work to the MIB Shared Task, part of #BlackboxNLP at @emnlpmeeting.bsky.social 2025!

The goal: benchmark existing MI methods and identify promising directions to precisely and concisely recover causal pathways in LMs >>

* Circuit Localization – identify subgraphs that carry out specific computations

* Causal Variable Localization – align internal representations with known causal factors

* Circuit Localization – identify subgraphs that carry out specific computations

* Causal Variable Localization – align internal representations with known causal factors

Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year at #BlackboxNLP, we're introducing a shared task to rigorously evaluate MI methods in language models 🧵

Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year at #BlackboxNLP, we're introducing a shared task to rigorously evaluate MI methods in language models 🧵

This edition will feature a new shared task on circuits/causal variable localization in LMs, details here: blackboxnlp.github.io/2025/task

This edition will feature a new shared task on circuits/causal variable localization in LMs, details here: blackboxnlp.github.io/2025/task