Ex @MetaAI, @SonyAI, @Microsoft

Egyptian 🇪🇬

I’ll be presenting our work (Oral) on Nov 5, Special Theme session, Room A106-107 at 14:30.

Let’s talk brains 🧠, machines 🤖, and everything in between :D

Looking forward to all the amazing discussions!

I’ll be presenting our work (Oral) on Nov 5, Special Theme session, Room A106-107 at 14:30.

Let’s talk brains 🧠, machines 🤖, and everything in between :D

Looking forward to all the amazing discussions!

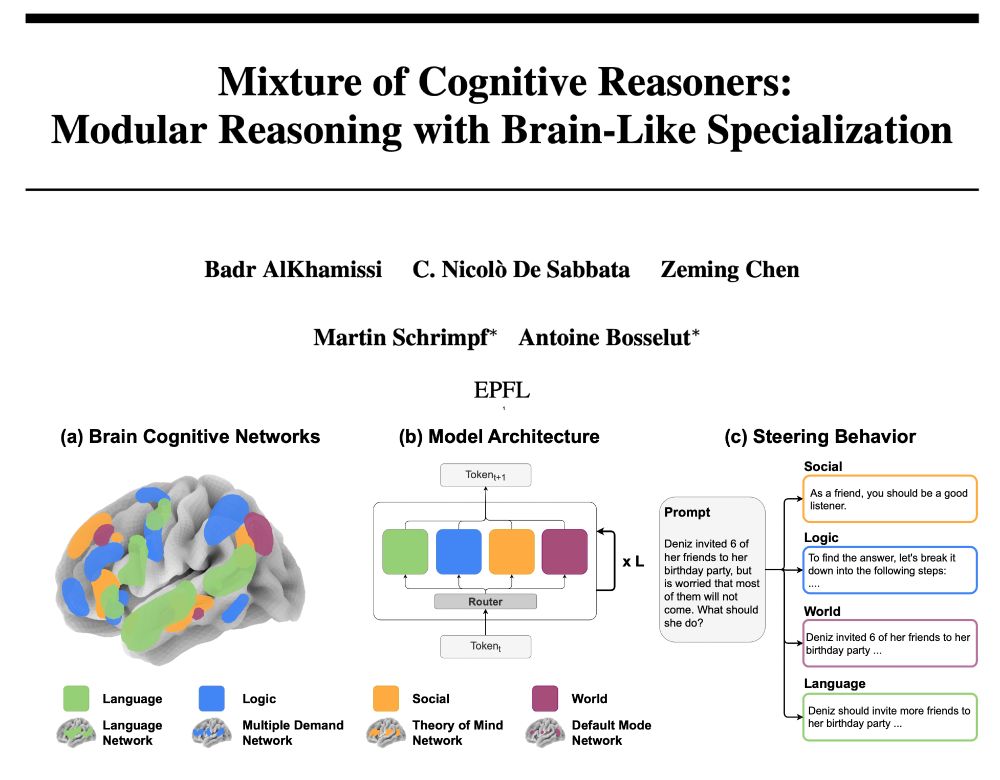

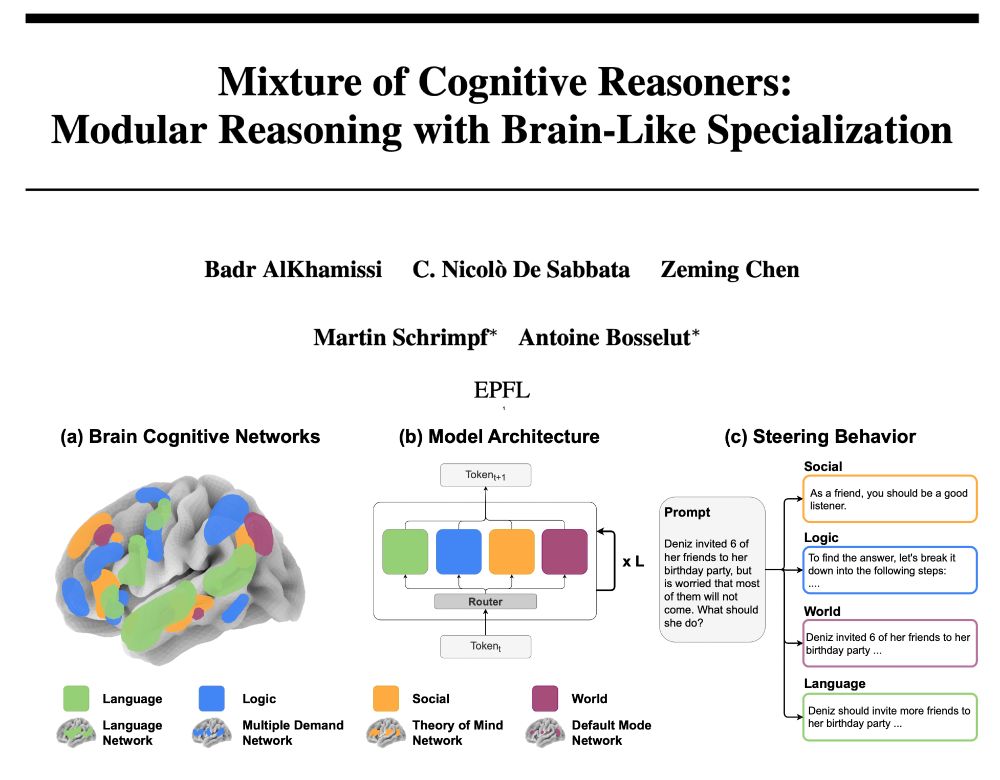

We ask: What benefits can we unlock by designing language models whose inner structure mirrors the brain’s functional specialization?

More below 🧠👇

cognitive-reasoners.epfl.ch

We ask: What benefits can we unlock by designing language models whose inner structure mirrors the brain’s functional specialization?

More below 🧠👇

cognitive-reasoners.epfl.ch

We show that by selectively targeting VLM units that mirror the brain’s visual word form area, models develop dyslexic-like reading impairments, while leaving other abilities intact!! 🧠🤖

Details in the 🧵👇

We show that by selectively targeting VLM units that mirror the brain’s visual word form area, models develop dyslexic-like reading impairments, while leaving other abilities intact!! 🧠🤖

Details in the 🧵👇

Looking forward to seeing many of you in Suzhou 🇨🇳

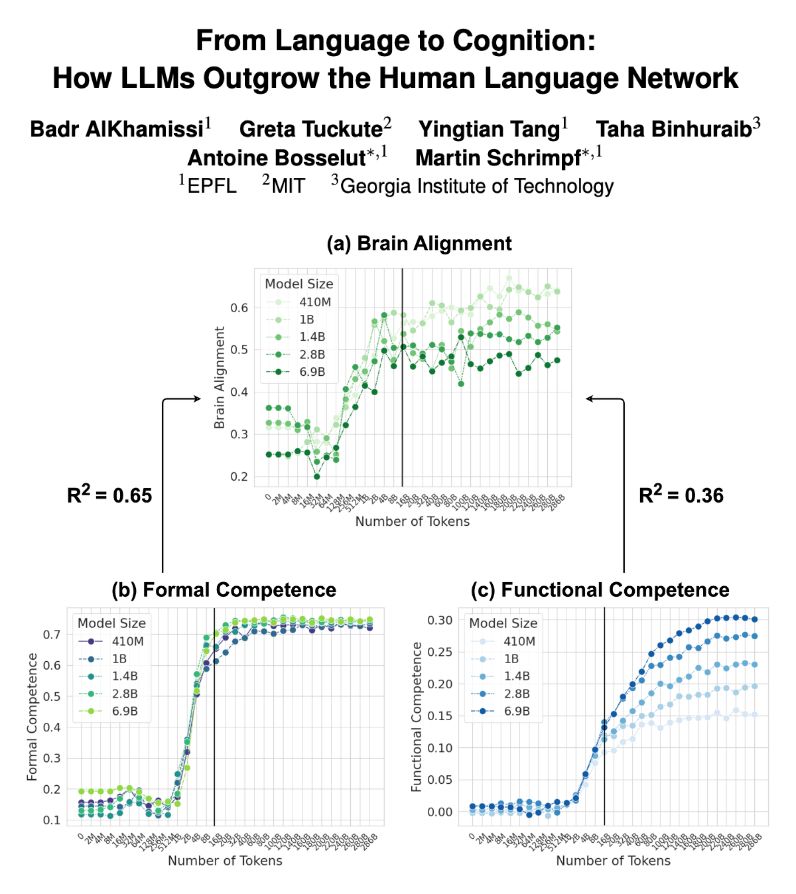

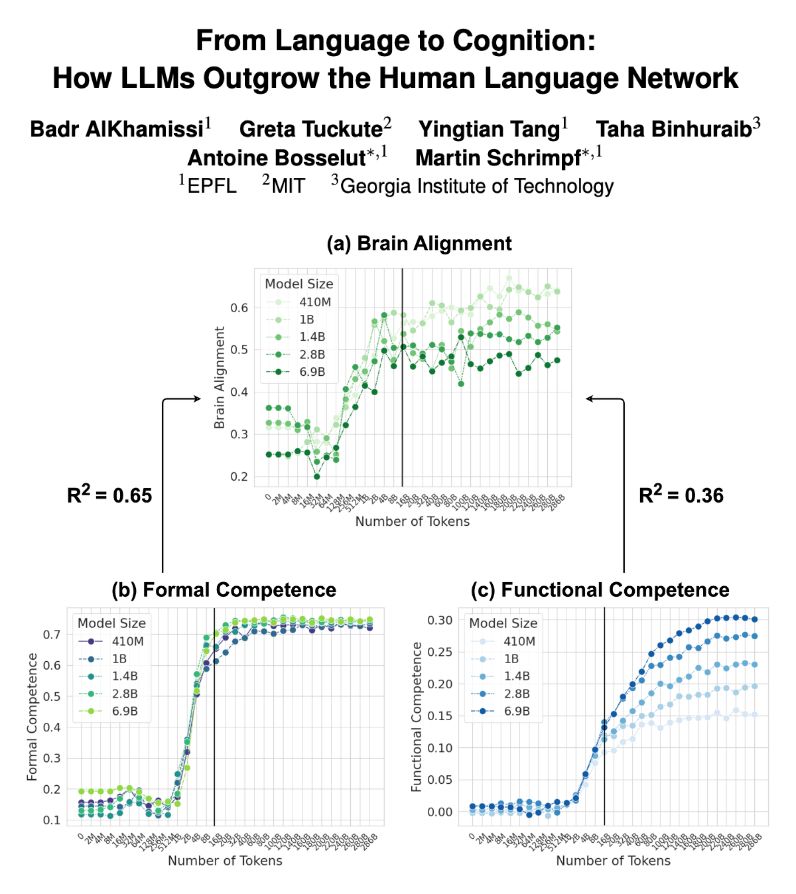

LLMs trained on next-word prediction (NWP) show high alignment with brain recordings. But what drives this alignment—linguistic structure or world knowledge? And how does this alignment evolve during training? Our new paper explores these questions. 👇🧵

Looking forward to seeing many of you in Suzhou 🇨🇳

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

"Hire Your Anthropologist!" 🎓

Led by the amazing Mai Alkhamissi & @lrz-persona.bsky.social, under the supervision of @monadiab77.bsky.social. Don’t miss it! 😄

arXiv link coming soon!

"Hire Your Anthropologist!" 🎓

Led by the amazing Mai Alkhamissi & @lrz-persona.bsky.social, under the supervision of @monadiab77.bsky.social. Don’t miss it! 😄

arXiv link coming soon!

Looking forward to all the discussions! 🎤 🧠

Looking forward to all the discussions! 🎤 🧠

First, very happy to present our work on TopoLM as an oral, here with

@neilrathi.bsky.social

initial thread: bsky.app/profile/hann...

paper: doi.org/10.48550/arX...

code: github.com/epflneuroailab

First, very happy to present our work on TopoLM as an oral, here with

@neilrathi.bsky.social

initial thread: bsky.app/profile/hann...

paper: doi.org/10.48550/arX...

code: github.com/epflneuroailab

@neil_rathi

I will present our #ICLR2025 Oral paper on TopoLM, a topographic language model!

Oral: Friday, 25 Apr 4:18 p.m. (session 4C)

Poster: Friday, 25 Apr 10 a.m. --> Hall 3 + Hall 2B Paper: arxiv.org/abs/2410.11516

Code and weights: github.com/epflneuroailab

Interested to hear your thoughts on this matter!

medium.com/@bkhmsi/the-...

Interested to hear your thoughts on this matter!

medium.com/@bkhmsi/the-...

LLMs trained on next-word prediction (NWP) show high alignment with brain recordings. But what drives this alignment—linguistic structure or world knowledge? And how does this alignment evolve during training? Our new paper explores these questions. 👇🧵

LLMs trained on next-word prediction (NWP) show high alignment with brain recordings. But what drives this alignment—linguistic structure or world knowledge? And how does this alignment evolve during training? Our new paper explores these questions. 👇🧵

Must watch video:

youtu.be/YdOXS_9_P4U?...

Must watch video:

youtu.be/YdOXS_9_P4U?...

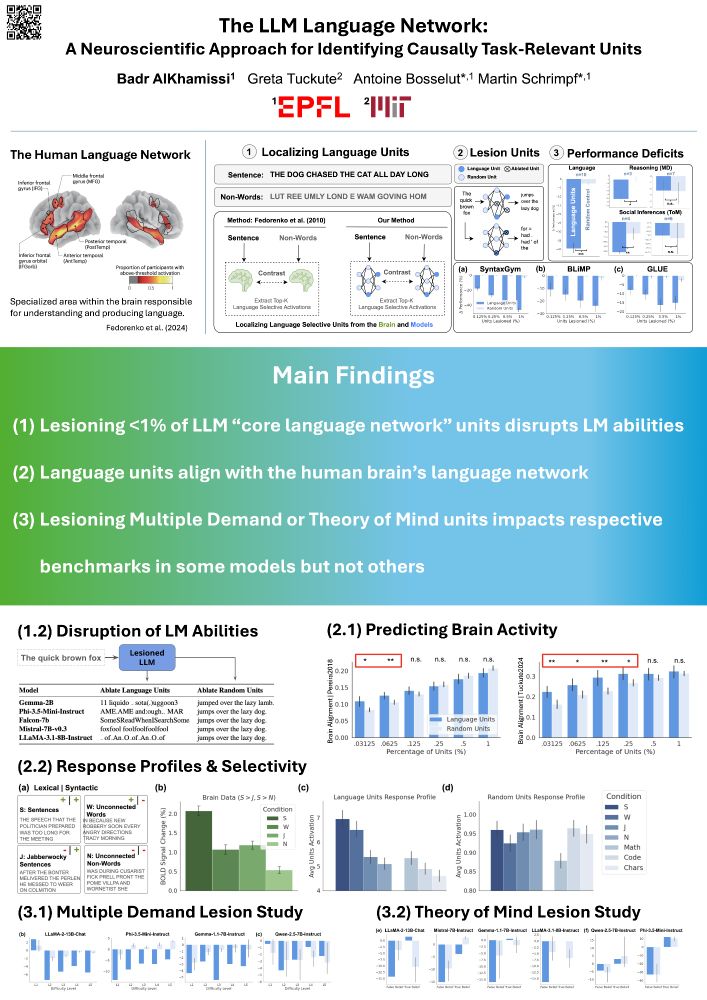

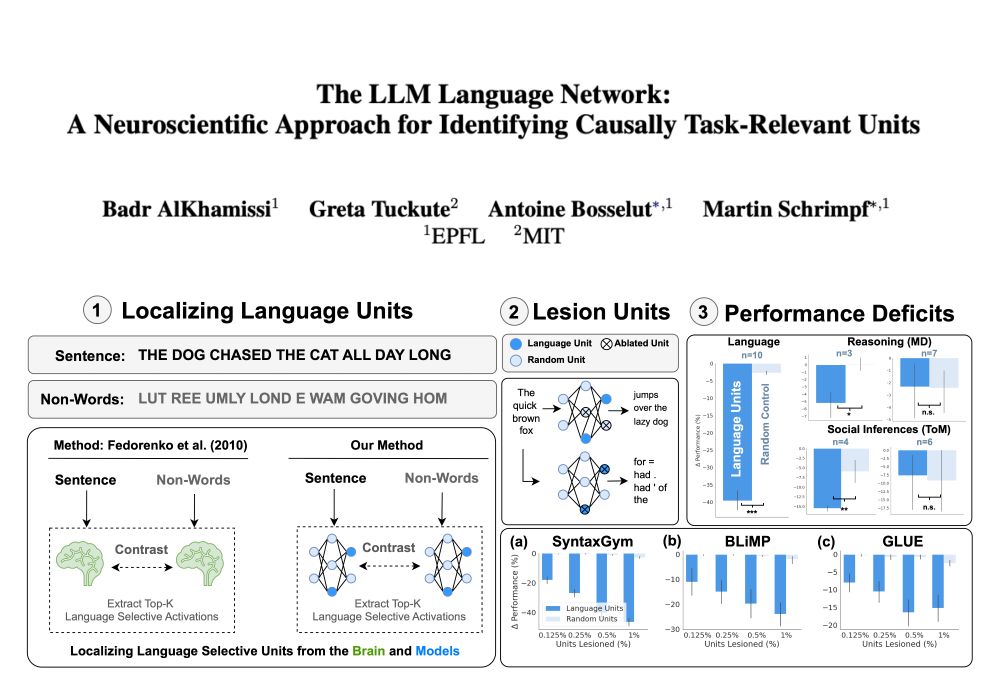

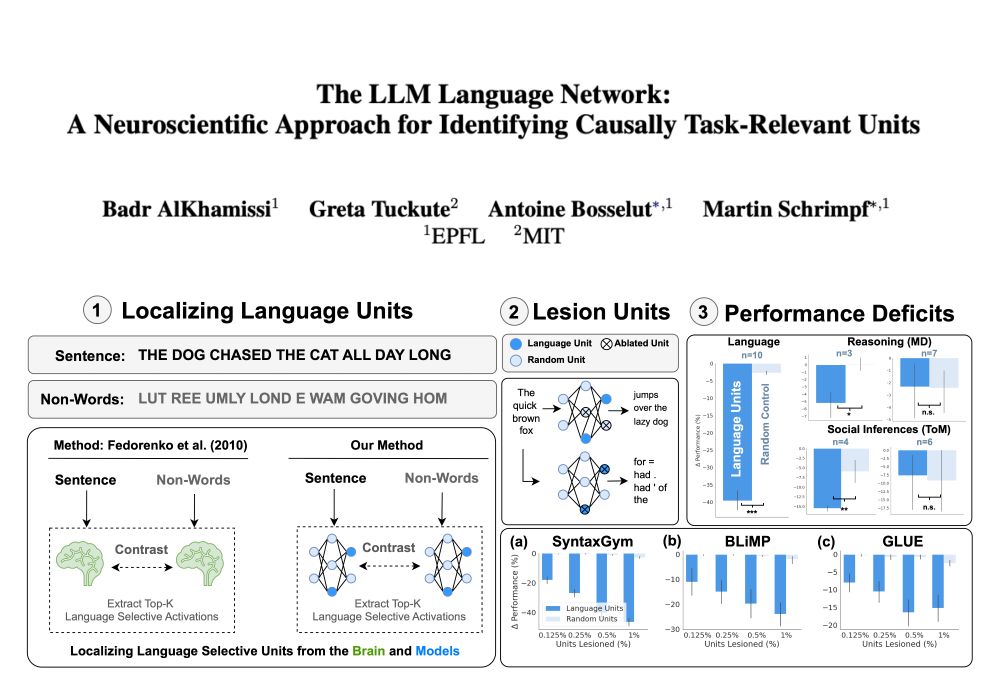

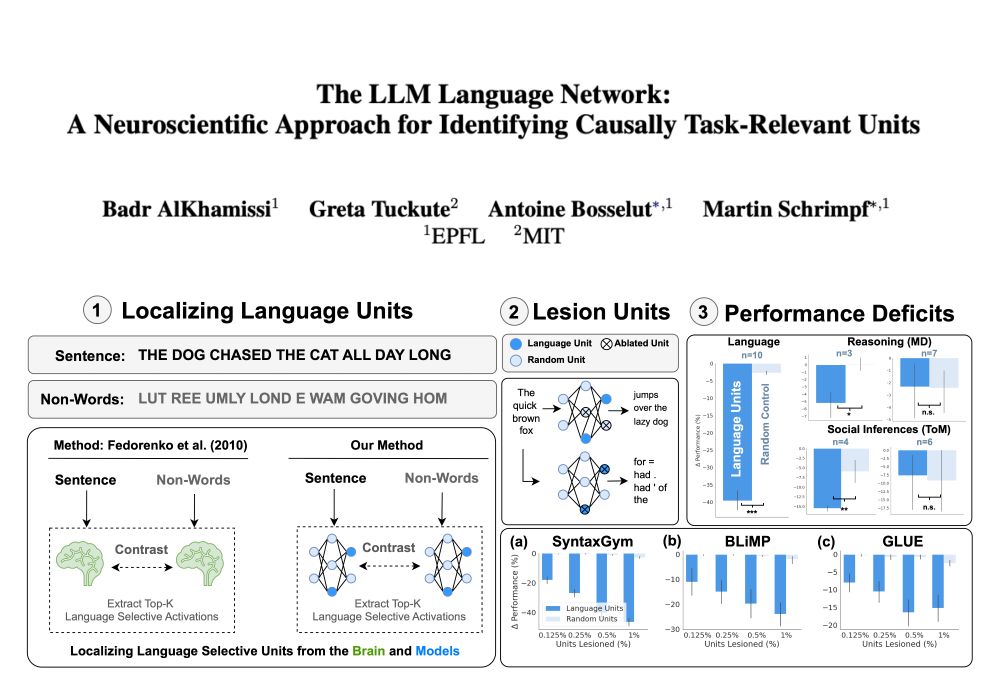

Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖

Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks!

w/ @gretatuckute.bsky.social, @abosselut.bsky.social, @mschrimpf.bsky.social

🧵👇

Know someone not listed? Submit their info and let's grow this inspiring community! 🤝

Explore here: bkhmsi.github.io/egyptians-in...

Know someone not listed? Submit their info and let's grow this inspiring community! 🤝

Explore here: bkhmsi.github.io/egyptians-in...

Moreover, this table shows the effect of ablations on next word prediction for a few sample models:

Moreover, this table shows the effect of ablations on next word prediction for a few sample models:

We identify "language network" units in LLMs using neuroscience approaches and show that ablating these units (but not random ones) drastically impair LLM language performance--moreover, these units better align with human brain data.

Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖

Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks!

w/ @gretatuckute.bsky.social, @abosselut.bsky.social, @mschrimpf.bsky.social

🧵👇

We identify "language network" units in LLMs using neuroscience approaches and show that ablating these units (but not random ones) drastically impair LLM language performance--moreover, these units better align with human brain data.

Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖

Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks!

w/ @gretatuckute.bsky.social, @abosselut.bsky.social, @mschrimpf.bsky.social

🧵👇

Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖

Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks!

w/ @gretatuckute.bsky.social, @abosselut.bsky.social, @mschrimpf.bsky.social

🧵👇